Surface Haptics: A Safer Way for Drivers to Operate Smooth-Surface Controls

Deep changes in technology capability and consumer expectations are leading experts in automotive interior design and user interaction to reimagine the cabin of tomorrow’s car. Dramatic improvements in data processing, network bandwidth, wireless communication, digitization, display size and resolution, and multi-sensor awareness of the environment in and around the car are bringing about a new conception of functions inside the car. In the future, the cabin will likely become simultaneously a cockpit, workstation, communications hub, and entertainment center.

In this automobile of the future, the user will continually interact with the car’s technologies for driving, comfort, navigation, communication, and entertainment. To simplify and enhance the interface for controlling these features, designers are experimenting with innovations that feature a large touchscreen display dominating the dashboard, including a “pillar-to-pillar” concept in which display surfaces stretch across the entire width of the cabin. Everywhere, smooth surfaces are in vogue, free of mechanical knobs, buttons, or switches that break the smooth lines of glass, plastic, and wood (Fig. 1).

Automotive designers favor this sleek design style in part because it results in a more streamlined interior. The absence of mechanical actuators suits automotive OEMs because it cuts down on the number of parts to be assembled, which lowers assembly and materials costs, shrinks inventory, and reduces exposure to failure modes such as mechanical wear and tear over time.

However, this new, featureless and larger user interface presents two novel problems for the car’s interaction designer to solve:

- Allow the driver to operate control functions via the touchscreen display while keeping their eyes on the road.

- Make the user experience feel intuitive and engaging when the main vector for sensory engagement with cabin controls is between a finger and a flat plate of glass.

These are the problems that a new solid-state technology for touchscreen surface haptics addresses. The innovation allows for less visual guidance and makes interaction with the flat, lifeless touchscreen a richly tactile experience.

How to Remove Buttons and Enable Software Programmability—and Keep the Driver Safe?

The primary responsibility of the driver is safety, and this means that the periods in which their eyes aren’t on the road must be both brief and infrequent. A 2015 study published in the Journal of Transportation Safety and Security found that, when a driver’s view leaves the road for longer than two seconds, the risk of accident increases by between four and 24 times. Recently, a German court suspended a Tesla driver after he hit some trees trying to adjust the windshield-wiper speed using his touchscreen.

The operation of traditional, mechanical buttons and switches has helped to reduce this risk by providing primarily tactile rather than visual forms of guidance and feedback to the driver.

However, in cabin designs utilizing large, multiple or curved pillar-to-pillar concepts, more if not all user controls are implemented as virtual dials or switches displayed on a touchscreen. Today’s touchscreen displays require primarily visual rather than tactile navigation, forcing the driver’s eyes to leave the road, and thus compromising safety.

Where tactile input is given to the user, it comes in the form of vibrotactile haptics, typically providing confirmation that, for instance, a button press has been registered by the system. It would be very difficult for vibration-based haptics to allow the user's finger to navigate the surface or sort through a menu by feeling at the tip of their finger.

As mechanical buttons and switches prove, user controls and menu navigation in the car are best operated by feel supported by audio feedback rather than by vision. A better principle for control of functions on an automotive touchscreen isn’t “look, press and lift,” but “feel, hold, and swipe/turn.”

Yet, how can the featureless glass surface of a display provide the required range of tactile inputs, matched to the touch-sensing and audio systems? Further, how can this surface be configurable to the requirements of any type of user input to enable on/off control, adjustment on a sliding scale, and menu navigation by feel and sound?

This is the precise solution offered by surface-haptic technology, and it can provide an almost infinite palette of tactile textures rendered on a standard display’s cover glass, as well as on other materials. The textures may be easily and precisely coordinated in time and place with the display’s touch sensor and its graphics and audio content. Because it’s a solid-state haptic technology, it includes no moving parts, and the display assembly itself stays motionless.

Electroadhesion and Software-Defined Texture

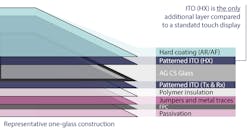

This unique technology works on the principle of electroadhesion. One way of implementing the technology is by controlling the voltage on a set of conductive electrodes, which can be transparent for display applications by using ITO (indium tin oxide; the same material used in a touchscreen sensor). These haptic electrodes are positioned on the surface of the touch-panel glass (Fig. 2). The friction that naturally occurs when a finger moves across smooth glass can now be amplified and modulated to create the feel of a range of textures.

The electroadhesion-based technology provides finite control over the feel of the touch surface. The visual appearance, the feeling, and even the sound the finger makes as it glides across the surface can be programmed using software, including the feel of flipping a toggle switch, the click of a dial, and any range of textures from grainy to fine.

Textures and effects are conceived as images that may be linked to a display graphic (Fig. 3). Software automatically converts the image created by the designer to code executed in the surface touch controller so that the image is rendered as a texture on the screen surface.

A New World of Opportunity in Interaction Design

Because surface-haptic technology is almost infinitely configurable, user experience and interaction designers can rethink the user interface in the car’s cabin by making the fingers, rather than the eyes, the primary vector of control, input, and feedback. This frees the driver’s eyes to focus on the road ahead.

Unlike vibrotactile haptics, surface haptics can be implemented on an automotive display of any size or shape and a variety of surfaces including wood, plastics and ceramics. Effects are easily customized and configured to provide a tactile experience that can reflect the unique characteristics of the brand and be combined with vibration and audio to create a more holistic experience.

Car designers now have the creative freedom to implement haptic branding where textures and tactile effects on multiple surfaces in the cabin—from the display the door handles—can have their own haptic brand signature. With such a highly programmable platform, the manufacturer is able to deliver new effects with over-the-air updates and even allow haptic effects to be configured by the end user.

Now, the designer’s vision for a sleek, touchscreen-display-based user interface to everything in the car can be implemented without introducing a safety risk. And, in a way, that’s unique to each brand, engaging with the human’s primal response to touch and texture.

Phillip LoPresti is CEO of Tanvas Inc.