This article is part of our GTC Fall 2021 coverage.

What you'll learn:

- What are the technologies behind NVIDIA's Drive Hyperion 8 dev platform?

- How AI not only improves safety, but boosts performance.

- How the AI-driven "Drive Concierge" simplifies parking.

A safe, dependable foundation must be at the core of autonomous vehicles. For NVIDIA’s new Drive Hyperion 8, an end-to-end development platform targeting self-driving system design, this includes a functionally safe AI compute platform including two NVIDIA Drive Orin systems-on-a-chip (SoCs) for redundancy, as well as sufficient computing power for level 4 self-driving and intelligent cockpit capabilities.

The Drive Hyperion 8 technology is further supported by sensors from a wide range of suppliers, including Continental, Hella, Luminar, Sony, and Valeo. The production platform, which is available today for 2024 vehicle models, is designed to be open and modular. Therefore, customers can use what they need—from core compute and middleware all the way to level 3 driving, level 4 parking, and AI cockpit capabilities.

Automaker Lotus, autonomous-driving solutions provider QCraft, and EV startups Human Horizons and WM Motor are using the platform in their next-generation software-defined vehicles. Baidu, one of China’s self-driving technology developers, is integrating Drive Orin within its third-generation autonomous-driving platform Apollo Computing Unit, known as Sanxian. The integration of Drive Orin, which consists of 17 billion transistors and is the result of four years of R&D investment, within Baidu’s Sanxian platform helps improve its driving performance and safety—along with accelerating mass production.

In addition, autonomous trucking company Plus announced plans to transition to Drive Orin, beginning next year. Its self-driving system, known as PlusDrive, can be retrofitted to existing trucks.

254-TOPS Performance

While AI is a key enabler of autonomous safety and convenience, it also can amp up driving. Those companies join global automakers such as Mercedes-Benz and Volvo Cars, and EV startups like NIO, previously committed to Drive Hyperion 8. The Orin SoC achieves 254 trillion operations per second (TOPS) and is designed to handle the large number of applications and deep neural networks (DNNs) that run simultaneously in AVs, while achieving systematic safety standards such as ISO 26262 ASIL-D.

For those who prefer to stay behind the wheel, Lotus is utilizing Drive Orin to develop intelligent driving technology designed for the track. With the centralized computing capabilities of Drive Orin and redundant and diverse DNNs, Lotus vehicles can improve to reach their peak driving performance.

As proof of concept during his GTC keynote, NVIDIA CEO Jensen Huang showed a Drive Hyperion 8 vehicle driving autonomously from the company’s headquarters in Santa Clara, Calif., to Rivermark Plaza in San Jose (for more on the keynote, see the video GTC November 2021 Keynote with Jensen Huang).

By including a complete sensor setup on top of centralized compute and AI software, Drive Hyperion 8 provides what’s needed to validate an intelligent vehicle’s hardware on the road. Its sensor suite encompasses 12 cameras, nine radars, 12 ultrasonic sensors, and one front-facing LiDAR sensor. The long-range Luminar Iris sensor will perform front-facing LiDAR capabilities, using a custom architecture to meet stringent performance, safety, and automotive-grade requirements.

The radar suite includes Hella short-range and Continental long-range and imaging radars for redundant sensing. Sony and Valeo cameras provide visual sensing. In addition, ultrasonic sensors from Valeo measure object distance.

The Drive Hyperion 8 developer kit also includes NVIDIA Ampere architecture GPUs. This processor delivers enough headroom for developers to test and validate new software capabilities, according to the company.

Parking and Voice Assistance

NVIDIA also unveiled two AI platforms dedicated to removing the stress and hassles of everyday driving. Drive Concierge simplifies parking, whether it’s parallel, perpendicular, or angled parking, and Drive Chauffeur offers autonomous driving,

Drive Concierge is an AI-driven software assistant that combines conversational voice assistance with driver monitoring and autonomous parking technology. The Drive AGX Orin is the processor behind both the Drive Concierge and Drive Chauffeur

Drive Concierge can alert the user if the cabin cameras detect someone has left a bag or purse in the backseat. While driving, those same cameras monitor the driver's attention level to encourage a drowsy driver to take a break or nudge a distracted driver's eyes back to the road.

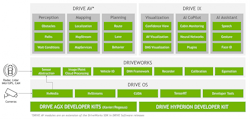

Drive Concierge software runs on top of Drive Orin and the NVIDIA Drive SDK. It consists of the DriveWorks middleware compute graph framework and Drive OS safe operating system, enabling a wide range of DNNs to run simultaneously with improved runtime latency.

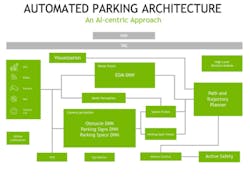

Drive Concierge fuses the data from ultrasonic sensors and fish-eye cameras. Its Evidence Grid Map DNN uses data from the sensors to generate a real-time dense grid map in the immediate vicinity of the vehicle

Dense fusion generates information about whether the space surrounding the vehicle is free and the likelihood of whether any space is likely to be occupied by a dynamic obstacle or a stationary obstacle. Then the ParkNet DNN fuses images from multiple cameras and provides a list of potential parking spaces to choose from. Finally, parking spot perception fuses data from multiple cameras to associate parking spaces and parking signs to determine which parking space to use.

In addition to the dense perception, the parking function includes sparse perception, which perceives far-range objects using both camera and radar data.

Lastly, the vehicle’s path and trajectory planner module takes this data to plan the vehicle’s maneuvers for parking, avoiding collisions with immediate close objects and higher-speed far-range obstacles.

Maps that are accurate to within a few meters are good enough when providing turn-by-turn directions for humans. Autonomous vehicles, however, require much greater precision. They must operate with centimeter-level exactness for accurate localization—the ability of an AV to locate itself in the world.

To that end, this past August, NVIDIA completed its acquisition of DeepMap, a startup dedicated to building high-definition maps for autonomous vehicles.

By combining NVIDIA Drive and DeepMap technology, the result is a high-definition solution that enables crowd-sourced Drive Mapping, which includes both camera and radar localization layers in every region that’s mapped for AI-assisted driving capabilities. Radar provides a layer of redundancy for localizing and driving in poor weather and lighting conditions, where cameras may be blinded.