Occupancy Status Technology: Is It the Future of Vehicle Safety?

What you’ll learn:

- Why occupancy status technology is critical to the future of vehicle safety.

- How machine-learning and neural-processing advances in computer vision are raising the quality and performance of OS to new levels.

- How a single-camera solution, running on custom convolutional neural networks and an RGB-IR/IR sensor, enables simultaneous driver and passenger sensing

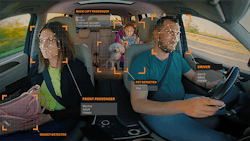

Occupancy-status (OS) technology is the future of vehicle safety systems. Already a part of many commercial fleets, the use of OS in detecting passengers such as children is a logical next step into a new frontier.

In fact, it’s already gaining regulatory traction and industry-wide adoption. Euro NCAP (New Car Assessment Programme) will incentivize automakers that offer the system in 2022, and members of the Alliance of Automobile Manufacturers and the Association of Global Automakers are willing to make occupancy monitoring a standard feature by 2025.

What’s driving this change? Why is occupant status such a critical aspect to the future of mobility? The simple answer is the emergence of autonomous vehicles and increasing automation in non-autonomous vehicles. Detection technology like OS is supportive in its ability to identify everything from occupant behavior and state to emergencies, attentiveness, and more.

Pause here to reflect on your first car’s advanced tech, and the pencil gauge you kept in the glovebox for tire air pressure.

The point is that technology helps make vehicle travel safer and will continue to evolve with changing needs. As such, the application of OS, while far removed from that old tire air-pressure gauge, represents a future where passenger safety—including pets—must account for more automated, as well as self-driven and autonomous, experiences. Xperi’s DTS AutoSense was developed and designed to do just this, for the following reasons:

- Safety during changing environments: OS technology is needed to handle a variety of changes at any time—passenger control, autonomous environment, partial autonomous, emergency passenger control, and so on. To that end, it should provide an overview of the full cabin interior with the ability to analyze with frame accuracy.

- Occupant positioning: While we can acknowledge that passengers should sit and behave in a safe manner, they often don’t, especially children. OS technology can analyze and issue a warning, and airbags can be deployed based on each passenger pose and exposure to an accident. This technology, which can deploy the airbags in a controlled manner aligned with passenger ID, also provides customized traveling experiences (load-specific setup, temperature, lighting, sound etc.).

- Occupancy behavior: In a more autonomous future, safety regulation organizations will need to understand far more than simple seat occupancy. By developing a rear-view mirror camera stream, analyzed by various neural-network-based algorithms, we have enabled a holistic in-cabin sensing system that allows for more behavioral context that can be scored by any regulatory institute.

- Occupancy experience: It’s not just about safety. OS monitoring will enable a better journey experience through the personalization of music, lighting schemes, seat adjustments, and more—all based on passenger ID/location/behavior and activity. Imagine having a playlist selected for you based on your mood, as detected through activity and analysis.

Accomplishing this was a significant challenge. Industry sentiment was, at the time, leaning toward the impossibility of performing both driver and passenger simultaneous sensing from a single camera. The biggest identified problem was the rearview mirror angle: Mounted in this position, the camera wasn’t facing the driver frontally, which directly affected the efficacy of the driver sensing technology.

How the Technology was Developed

The core technology behind the DTS AutoSense Occupancy Monitoring System (OMS) leverages machine-learning and neural-processing advances in computer vision, raising quality and performance to new levels. With careful use of data, KPIs for detection, classification, and recognition reach the upper 90 percentiles.

The availability of relevant data with high-quality annotations is key to performance, which is why DTS invested heavily in all aspects of data infrastructure, from acquisition and generation, validation, evaluation, storage, retrieval, and compute power to a skilled workforce dedicated to using and managing it. With the stream of data secured, DTS AutoSense leverages AI advances as they were prototyped to continue to innovate development.

The result? Our OS solution is fully neural, based on custom convolutional neural networks (CNNs) that combine cascading detectors for body, face, and generic objects to generate in-cabin context and act on it. We also deploy a complex lens distortion correction process, followed by a customized image-processing sequence with an in-cabin 3D space positioning of occupants.

The solution is camera agnostic, so we have the capability of correcting the feed of any camera and lens system in any existing in-cabin space. The solution runs on an RGB-IR (red-green-blue infrared)/IR sensor, be it on a single RGB stream, a cumulated RGB-IR, or only on a pure IR one.

The OS solution runs in real-time in any vehicle cabin and can offer feedback and/or directly report on passenger status. If a seat in the vehicle is occupied, this position will be detected, recorded, reported, and actualized in real-time.

Development Challenges

Developing nascent technology and creating novel, undefined use cases presented our team with some major challenges. As we built one of the core technologies required to enable a key feature, we encountered unforeseen side effects. Each of these had to be addressed, solved, cataloged, and classified to advance.

Ultimately, it demanded a new level of mastery, including the development of new acquisition systems, data marking, additional notation requirements and processes, and a complete refining of our neural training approach. Here are a few of the challenges and how we solved them:

Keeping driver monitoring system (DMS) accuracy as high as existing solutions

Some might assume that a single-camera solution (running DMS and OMS from a rear mirror) versus only a frontal camera could affect accuracy. However, a much broader context is available from the rear mirror, meaning the same or even higher accuracy is achieved by analyzing the extended landscape of the driver, i.e., body pose analysis, behavior, activity detection, etc.

Backseat obstruction rate

Another big challenge involved handling the obstruction rate of backseat passengers (the front seat always occludes the rear subjects to some degree). We overcome these issues by applying a temporal analysis of the activities and behaviors of the passengers. This analysis creates a complex algorithm that tracks activities (sleeping, talking, using gadgets, body movement, pose, appearing/disapperaing objects, etc.) over a period of time, for each occupant independently, and then saves a history of it.

Based on this information, we calculate probabilities for the objects (occluded or not) and the resulting specific behaviors (observable or not). Valid decisions/warnings are issued only after analyzing the history of these activities and behaviors as a whole.

Detection of occupants

Detecting occupants outside the in-cabin space area presented a unique challenge. Our OMS enabled in-cabin occupancy solely with computer vision and RGB-IR or IR sensors. However, we needed to go beyond the limitations of current in-market seat pressure sensors so that the system could always detect the number of people—and their position—in the car.

A side effect of that detection technology was it detected people outside, as well as inside, the car, impacting the solution’s effectiveness. So, we came up with specific geometrical calculation techniques to detect only what was in the cabin.

Overall, as we navigated through these challenges, we developed specific data-acquisition scenarios and perfected a custom infrastructure that was able to design solutions for issues particular to this technology.

How Testing was Conducted

The complexity of these challenges required rigorous analysis and testing, including evaluation contexts, specific scenarios, poses behaviors, and occlusions. We addressed a variety of factors, from cabin size and form, to day vs. night light, to high occlusion rates. We then collected real traveling scenarios from different vehicles with a variety of passengers (up to five), and at multiple traveling lengths and landscapes.

Sensor and image quality were critically important. We had to tackle problems caused mainly by noise, overexposure (extreme sunlight), underexposure (low-light condition) issues caused by the mixed IR and visible domains, fast ambient light transitions (entering a tunnel), shadows in-cabin and on passengers, as well as varying ambient lighting, color, and intensity.

During these different lighting scenarios, passengers were engaged in a variety of activities and poses, which were validated/annotated by specific hardware systems and further analyzed by a skilled data team.

Summary

Overall, it took the team years of ideation, development, testing, and more testing to create a truly accurate OS system for passenger vehicles. We’re proud of the work we’ve done, excited to see how it’s applied in the future, and how it evolves as vehicle users’ needs multiply and change.

Clearly, the number of in-cabin features provided by car makers will increase, and, as such, they will need to have a corresponding number of safety elements and use cases—that will be critical. DTS AutoSense is designed, developed, tested, and ready for the global market challenge.