AMD Equips Automotive-Grade SoC with Advanced AI Engine

Check out Electronic Design's coverage of CES 2024.

AMD ups the ante in the autonomous driving market with its most advanced automotive-grade SoC yet. At CES, the Santa Clara, Calif.-based vendor launched its latest Versal AI Edge “XA” family of SoCs that bring up to 171 TOPS of AI performance and high-bandwidth programmable logic for signal processing to vehicles.

According to the company, it can safely handle everything from sensor fusion for LiDAR, radar, front-facing, and surround-view cameras in cars to the heavy-duty computations used to chart out surroundings and detect objects and other obstacles on the road ahead. So, while it fits advanced safety features such as lane-change assist, AMD said the new SoC brings more than enough power to the table to do up to “Level 4” autonomous driving.

While it remains a relatively small player in the market, AMD is already established in the auto sector with its Xilinx-designed Artix FPGAs and Zynq UltraScale SoCs (Fig. 1). The U.S. semiconductor giant also sells its embedded processors to run dashboard displays and other “infotainment” systems, most notably for Tesla.

But now it’s endeavoring to take a bigger bite out of the autonomous driving market that has been dominated by NVIDIA (with its current “Orin” SoC and next-generation “Thor” platform), Mobileye (with its EyeQ processors and upcoming EyeQ Ultra SoC), and Qualcomm (with its Snapdragon Ride series, including the “Flex” SoC due out early this year). Though bound to face an uphill battle, AMD is trying to become more competitive with the Versal XA SoC.

Versal XA is a series of heterogeneous SoCs based on the 7-nm process that combines general-purpose CPU, DSP, and programmable-logic cores while baking advanced AI compute engines and other hardened IP into it.

While it’s based on the same blueprint as the other members of Xilinx’s Versal AI family, AMD said the Versal XA is purposely designed to tolerate the severe temperatures, harsh vibrations, and other rigors of the road.

Inside AMD’s New Automotive-Grade AI SoC

The hardware under the hood of autonomous cars is becoming as important as the software that runs on top of it. Heterogeneous SoCs with potent and power-efficient AI computing like AMD’s Versal XA are playing a key role.

The accelerator adds general-purpose Arm CPU cores: a single pair of high-performance Cortex-A75s and another pair of real-time Cortex-R5 CPU cores. These cores are supplemented by the same type of programmable logic at the heart of Xilinx’s FPGAs, giving it a degree of flexibility that’s generally lacking in rival processors.

The chips have 20,000 to 521,000 20k LUTs that can be used to program the digital logic inside the SoC. They’re also equipped with up to 1,312 DSP engines for the purposes of signal processing and sensor fusion.

But at the heart of the automobile-grade SoC is the AI Engine. On the high end, the chips incorporate more than 300 of these AI processor tiles. When used with the programmable logic and other parts of the SoC, they can supply 5 to 171 trillion operations per second (TOPS) of performance for AI workloads. AMD said it gains more than enough performance to run AI inferencing on large ingests of data and create accurate, real-time views of the road ahead (Fig. 2).

By processing these vast amounts of data in real-time, the Versal XA can sense obstacles in the surrounding area in a fraction of a second, which tends to be the difference between safe driving and a collision or crash.

Featuring a workload adaptable memory hierarchy, the Xilinx-designed Al engines can run a wide range of different workloads to pinpoint the car’s location in space (“localization”) and scope out the surrounding area ("perception"). As the company pointed out, it can also run “pattern recognition” of signs and other objects as well as identify obstacles in a scene so that it can plot out the safest route for the vehicle on the road. By uniting the AI engine and programmable logic, it’s able to run computer vision and AI using convolutional neural networks (CNNs).

Moreover, the programmable logic can be used separately to pre-process the data traveling through Ethernet PHYs from the car’s cameras, radar, and other sensors, while the AI engine is used to identify and classify obstacles in a scene.

Binding all of the building blocks together in the SoC is a high-bandwidth network-on-chip (NoC). The chip also features hardened IP for connectivity, including:

- MIPI PHY and other I/O to interact with cameras, radar, or other sensors.

- PCIe/CCIX to act as the accelerator for the CPU or other high-end central processor.

- DDR/DDR4 interfaces when more memory must be attached.

Security is also built in.

One of the central requirements for AI chips used in autonomous driving is power efficiency. The new chips run on relatively minute amounts of power, ranging from less than 10 W up to 75 W.

AMD said the programmable SoC is automotive-grade, which means that it has been painstakingly “qualified” against a long list of industry standards to make sure they’re durable and reliable enough to be used in cars.

A New SoC for a New Hardware Architecture

As software takes over more responsibilities in cars, the underlying hardware architecture is evolving, too.

Today, more than 100 electronic control units (ECUs) are typically distributed throughout the average vehicle. Each box generally only has enough computing power to do a single task at once.

However, automakers are moving to “domain-based” architectures that use high-performance SoCs to unite these single-use modules—and the MCUs inside—into larger “domain controllers” that can safely run several distinct functions at once. Such an architecture not only reduces the amount of hardware under the hood, but also the bulky, heavy, and costly wiring inside of it.

The alternative is a “zonal” architecture, in which most of the vehicle’s computing power is located in a central supercomputer. The high-end CPU or SoC at its nucleus links to the sensors and other systems spread out around the car through gateways that each contain a PHY to communicate with each other over Ethernet. It also reduces the cost and weight of wiring by keeping the gateways closer to the devices that they’re connected to.

AMD plans to roll out several different variants of the Versal SoC from entry-level to high-end offerings. As a result, customers have the flexibility to choose the one that’s ideally suited for the car’s underlying architecture.

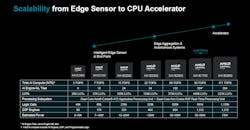

The Versal XA can be used in a fully distributed architecture, where a single chip is attached to a camera, radar, or other sensor—or a group of sensors—to pre-process the data before supplying it to a central control unit.

Another possibility is to place a high-performance variant of the SoC inside the domain controller in charge of the car’s autonomous-driving modes. Then, it can safely run the majority of sensor processing from a central locale (Fig. 3).

The additional computing power doesn’t come at the cost of safety and reliability. AMD said the Versal XA is rated for functional-safety standards (ISO 26262) and other safety certifications (specifically, IEC 61508 and ISO 13849).

The first members of the new automotive-grade family will be released in early 2024.