Cars Will Enlist Thermal Infrared and AI to Shield Pedestrians

What you’ll learn:

- Why governments, industry and insurance companies, associations, regulators, and companies are recommending that all new cars have AEB ADAS technology that can see in total darkness.

- How does the new LWIR thermal camera technology work? What are its advantages versus other automotive safety systems based on LiDAR, radar, VGA cameras, etc.

- How AI can identify what kind of warm object is in the road ahead and its distance.

Deployment of new technology often can’t occur until public outcry and government regulation converge to create a “time has come” moment. We’re now heading into that juncture for nighttime pedestrian safety—and the automotive industry is just starting to grapple with the ramifications.

We’ve seen this phenomenon before. Over the last 100 years, passenger vehicles have been equipped with safety systems only when the underlying technologies have proven effective and reliable, and the public insisted on benefiting from their capabilities. Soon, two new technologies will appear in cars to attack this latest “time has come” moment defined by a steadily worsening problem—accidents involving pedestrians.

The facts are stark (Fig. 1):

- Pedestrian deaths from vehicle crashes are at a 40-year high.

- Over 75% of these deaths occur at night.

- Existing automatic braking systems work poorly in darkness.

What is the NHTSA’s PAEB Mandate?

After examining the latest crash and test data, the U.S. National Highway Traffic Safety Administration (NHTSA) proposed a mandate, expected to be finalized shortly, to require ALL new cars to include pedestrian automatic emergency braking (PAEB) systems that see and classify pedestrians in the roadway day or night, then safely stop. This new requirement to protect pedestrians will likely be mandatory for vehicle speeds up to 37 mph in the daytime, in total darkness, and in challenging visual environments, day or night.

Even since the introduction of the automobile, various mandates have been established and implemented to improve safety. Over the years, these have increasingly involved both manual and automatic features. For example, seat belts are manual while airbags are automatic. Collision warnings advise the driver, while anti-collision braking, lane-keeping technology, and backup protection automatically engage.

This new requirement for PAEB systems that operate day and night differs from earlier mandates because it’s intended to protect those around the cars rather than those inside. Many existing auto safety features help pedestrians by improving control of the vehicles, and some, like prohibiting impaling hood ornaments, reduce pedestrian risk. The new mandate, though, is the first major requirement to add equipment to cars solely to reduce pedestrian injury and death.

For several years, automatic emergency braking systems have been available on some models because the New Car Assessment Program (NCAP) program, which tests and rates cars for safety, has included automatic braking in its scoring system. However, until now, this function has been optional, meaning that the equipment needed to detect potential collisions and determine appropriate action was also optional. With the new mandate, this function—and its associated equipment—will no longer be optional.

Sensors and Software Behind PAEB Tech

This mandate is precisely what’s needed to jumpstart implementation of effective PAEB systems. To begin, PAEB systems must accept images from sensors (currently radar and cameras), align their data in time and space, then classify significant objects and determine their positions relative to the vehicle.

The information derived from this analysis can be displayed as perceived hazard alert for the driver and sent to another program that figures out what the car should do to avoid a collision. The result is a series of commands applied to the brake actuators to stop, or steering controls to avoid the hazard.

There are some software subtleties in this process, such as figuring out whether to brake for a pedestrian who is only walking in the direction of the road, or whether the system should respond like a Grand Prix driver or a grandparent. But at least the image-acquisition hardware seems to have been figured out—well, not quite.

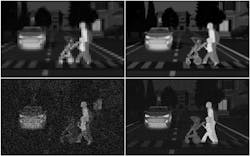

Crash tests performed by the Insurance Institute for Highway Safety (IIHS) last year clearly demonstrated that cameras and radar perform admirably in PAEB systems when the sun is up, but miserably when it’s not. Even in daytime, though, some weather conditions like fog or rain or snow or the presence of dust or smoke, or even the setting sun in the background, can hide pedestrians enough to render cameras useless (Fig. 2).

Clear, brightly lit nights can also be unsafe when chaotic urban traffic and pedestrian environments swamp existing PAEB systems, rendering them ineffective. In those situations, when camera images are compromised, radar alone can’t provide enough data to run a braking system (Fig. 3).

So, if the NHTSA mandates 24/7 PAEB systems, what can be done to comply with those challenging environmental situations?

In preparing to respond to the “time has come” moment, the auto industry is critically examining the technologies it has used in the past, such as RGB visible light cameras, to make a strategic decision: Can we use what we have? Or do we need to search for a new solution that truly solves the problem?

Making the Move to LWIR Cameras

Fortunately, physics provides the answer—use the natural infrared radiation generated by every object above absolute zero. All physical objects, especially living ones, produce radiation easily seen by cameras that can detect in the 8- to 14-µm long-wave infrared (LWIR) band.

The first of these LWIR cameras were made almost 100 years ago and are currently available as handheld thermal leak monitors and imaging thermometers. Right now, they’re still expensive and lack resolution, but the volume inherent in automotive production is forecast to solve that problem and bring the costs into the hundreds of dollars versus $1,000 and more.

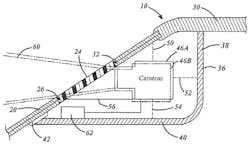

A thermal camera mounted in the vicinity of the rear-view mirror coupled with the capability of AI in the form of convolutional neural networks (CNNs) produces a system that can find warm objects, classify them, and then compute their distance from the vehicle—exactly the data needed to inform PAEB control software. Conveniently, the upcoming automobile computer architecture supports moving the image-processing software to a central processor, so that the camera only needs to supply digital image data (Fig. 4).

LWIR Sensor Demands

Another critical challenge to be met for “next-generation” thermal imaging to be widely deployed in automotive, in addition to driving costs down, is that the performance of the LWIR image sensor focal plane array must meet these stringent performance benchmarks:

- 1280 × 800 HD resolution: Puts more points on targets for wider coverage, longer distance object classification, better ranging accuracy, and an improved ability to separate important objects from clutter.

- <50 mK thermal sensitivity: Radiated energy is measured in very fine increments of contrast of just 0.05°C to provide better thermal object discrimination.

- Shutterless non-uniformity correction: Eliminates the need for a mechanical shutter for scene calibration used in older thermal cameras to avoid the unacceptable interruption in image flow.

- 14-bit digital output: Ideal for intelligent machine-vision operations like classification.

- <0.3 µW per pixel: For best ratio of power to camera image quality—15X better than today’s best thermal cameras.

- 60- to 120-Hz frame rate: Reduces motion blur in acquired images.

- Ability to leverage centralized ECU architecture: Enables low-cost cameras connected via various high-speed serial links for economic advantage.

All at a cost 100X lower than today’s top-of-the-line sensors.

The Need for AI-Enabled HD Thermal Imaging

Integration into a vehicle’s perception framework is also critical. LWIR thermal images are inherently different from RGB and require CNN training for classification accuracy. Significantly, when the AI software is properly designed, it can provide range information from a single LWIR thermal camera. Then, two LWIR cameras aren’t needed to see distance.

Owl AI believes that to solve this challenge, we need a new class of thermal-sensing and machine-learning tools tuned specifically to address next-generation requirements for use in automotive nighttime and degraded visual environments. We refer to this new architecture as AI-enabled HD thermal imaging (Fig. 5).

Within five years, all new cars—not just autonomous vehicles—must be able to see at night to fully protect pedestrians. The new technologies enabling these automotive safety systems will be an essential component of next-gen ADAS solutions.

It’s fortunate that just as the public and regulatory agencies are beginning to emphasize the need to solve the pedestrian fatality problem, a technology has been demonstrated that promises a comprehensive solution. AI-enabled HD thermal imaging applied day or night, in all weather and in chaotic situations, can separate pedestrians from the clutter. Owl has begun to offer this unique capability to automakers as the company prepares to improve pedestrian safety.