Download this article in PDF format.

The prospect of autonomous vehicles can spark lively conversation, well beyond the gearhead fraternity who tend to cherish the idea of an engaged driving experience. In contrast, some vehicle users look forward to the day when we can travel from A to B without the need for human input, and, therefore, greater safety and much more.

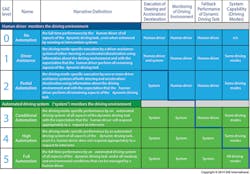

However, recent semi-autonomous vehicle accidents have brought consumer attention to what we in the industry have known for some time. This autonomous driving thing isn’t as easy as some first thought. We’re seeing major OEMs back off earlier predictions for the implementation of Level 3 and 4 semi-autonomous vehicles, with the first mainstream high-end vehicle’s availability now estimated for 2021. The table summarizes the SAE International’s definitions for the levels of autonomous driving.

The race to bring autonomous vehicles to market and the cultural divide between the Silicon Valley and traditional automotive OEMs is heating up. Automotive OEMs are known for taking long and methodical approaches to solving problems, whereas Silicon Valley adopts the mindset of going fast, being agile, and not missing a market window. These two very diverse approaches bring the best of both worlds to the design floor of autonomous vehicles.

A summary of the SAE International “Levels of Driving Automation for On-Road Vehicles,” as defined in its J3016 standard.

To date, this collaborative approach has provided evidence of success. However, as we enter the final stretch of producing these vehicles for real consumers—rather than for testing and research—will this marriage continue to hold together?

Couple this with the emerging component/systems-level technologies, infrastructural roadblocks (~30% of the roads in the U.S. are unpaved), government bureaucracy, security concerns, and the costs associated with making self-driving vehicles mainstream, and you can quickly see that the development of an intelligent, safe, and robust light vehicle has its challenges.

Getting to Level 2

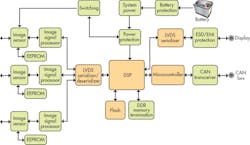

At ON Semiconductor, we’re working to address the component and system challenges as we collaborate directly with the OEMs and Tier 1s. For example, we’re actively working with automotive designers to help them understand today’s requirements for a Level 2 autonomous vehicle and implement systems such as a Surround View System (see figure).

However, enabling vehicles to autonomously navigate without human input through complex settings requires them to “see” and “understand” situations more like humans (examples of these complex settings include city centers, construction zones, snow, fog, heavy rain and remote areas). This requires not only currently available, commercially viable, automotive-qualified products, but will also include technologies, products, and safety standards that aren’t yet mainstream in the industry. And, all of this must be implemented with ZERO accidents, 100% reliability, and continuous learning.

Much of this technology is currently under trial or in low-volume production. In addition, every major OEM is testing vehicles somewhere in the world, and a few high-end manufacturers have viable Level 2.5 vehicles on the street. One such example is the new Mercedes E-Class, which demonstrates numerous advanced semi-autonomous features.

Given these stringent requirements, new automotive capable technologies all have to work seamlessly with the vehicle’s “deep learning” central safety processing unit to fully manage the driving experience. Examples of these automotive-capable technologies include both global and rolling shutter CMOS image sensors for advanced driver-assistance system (ADAS) applications (eyelid detection, lane departure, signage and object detection), LiDAR (short-range object detection), and Radar (long-range object detection).

The “Sensor Fusion” technique—where the correct sensor combination is implemented for the task at hand—enables the central processor to safely complete the task, rather than attempting to operate from just one sensor input. The complexities of semi-autonomous and autonomous driving will dictate the use of a large variety of sensors to safely drive at Levels 3 through 5.

These are the peripheral components required to implement a complete Surround View System in a Level 2 autonomous vehicle.

Addressing Software Safety

Beyond the technology and sensor fusion, we haven’t even mentioned the complexities of reliable, mission-critical hardware and software. We must address the safety aspects of vehicles no longer being 100% controlled by humans.

For years now, the airlines have been dealing with “electronically controlled” systems. The airline industry has confronted with this challenge via the redundant-systems approach. This approach can be absorbed in the overall cost of a multimillion-dollar aircraft. However, multiple redundant systems in a light vehicle, while technically an answer, are far too cost-prohibitive for a production vehicle.

Functional Safety standards become an integral part of each automotive product development flow, spanning all aspects of specification, design, implementation, integration, verification, validation, and production release. ISO 26262 standard, which is an adaptation of the Functional Safety standard IEC 61508, defines Functional Safety acceptance criteria for automotive equipment, and is applicable throughout the lifecycle of the products. The scope of the standard includes all automotive electronic and electrical safety-related systems.

Specifically, the standard encompasses a set of recommended and highly recommended requirements applicable to all safety-critical components through their full lifecycle. During the development of a safety-critical item or component, the need to apply a given requirement is directly linked to an “automotive risk” based approach that allows the developer to determine risk classes, known as Automotive Safety Integrity Levels (ASILs).

By determining the risk level of an application or an element, the necessary requirements for reducing the risk level from a systematic risk to an acceptable residual risk, as well as a random hardware failure point of view, are defined. This will include the definition of a complete set of requirements for validation, but also verification through timely confirmation reviews.

For example, ON Semiconductor designs key features directly into its solutions with ISO 26262 requirements as well as keeping system-level technical safety requirements in mind. Thus, both system and software developers can implement these safety standards for their products in a cost-efficient manner—including requirements derived from safety goals up to ASIL D.

Self-driving vehicles are coming. There’s no stopping it. However, they’re not coming as fast as most of us once thought. The delay will likely not be semiconductor-related, but instead due to infrastructure, regulation, and cost. Yes, we will see fully semi-autonomous high-end vehicles in 2021, and yes, we will have many semi-autonomous options in vehicles prior to that. Fully autonomous vehicles, however, will not become mainstream for quite some time—not until they can navigate the orange barrels on the continuous, winding road to autonomous vehicle reality.

Reference:

The SAE International’s summary of the “Levels of Driving Automation for On-Road Vehicles,” as defined in their J3016 standard.