Fully autonomous vehicles require sensors that provide accurate detection of objects around the car. Many technologies are being used for this purpose, including video imaging, radar, and LiDAR.

I talked with Yakov Shaharabani, CEO of AdaSky, an Israeli startup in the autonomous vehicle space, about the company’s thermal-sensing solution and how it enables fully autonomous vehicles.

Yakov Shaharabani, CEO, AdaSky

Could you provide a brief overview of who AdaSky is?

AdaSky aims to disrupt the autonomous-vehicle market, delivering the smallest, best-resolution thermal sensor to give self-driving cars the gift of sight and perception, in any lighting or weather condition. We’re headquartered in Yokneam, Israel, and our team is made up of experienced industry vets from the Israeli image-processing and thermal-sensing high-tech market, automotive experts, and machine-learning and computer-vision specialists. Our team of experienced engineers has further innovated and adapted the far-infrared (FIR) technology to the specific needs of self-driving cars, making AdaSky’s solution a critical addition to cars to eliminate vision and perception weaknesses for fully autonomous vehicles.

What inspired AdaSky to enter the automotive industry?

AdaSky was founded with the core vision of advancing the autonomous-vehicle market with a perception solution that increases not only performance, but also the safety of autonomous vehicles. We saw a powerful opportunity in the market. The most basic need for autonomous vehicles is to be able to see and interpret all objects and surroundings, in all conditions. Existing sensors and cameras available today can’t meet this need on their own.

To address this, we engineered a state-of-the-art sensing and perception solution that allows autonomous vehicles to reliably detect, segment, and analyze pedestrians, animals, objects, and road conditions in day or night, regardless of the weather condition.

The timing is now. FIR technology has been used for decades in other vertical industries, making it a mature and proven concept with demonstrated scalable technology priced for the mass market. AdaSky leveraged this mature technology and adapted it specifically for autonomous vehicles to create a solution that complements other sensing technology, such as LiDAR, radar, and standard cameras, while providing a crucial additional layer of vision and brains.

In fact, AdaSky’s solution is performing at its best in use cases when other sensors in the car have no perception and are unable to see. When combined with other sensors, AdaSky’s solution gives autonomous vehicles perception of their surroundings in any condition.

What’s different about AdaSky’s sensing solution for autonomous vehicles?

FIR and thermal sensing have been used in military and aviation for years, so it’s a proven, mature technology that’s scalable for the mass market. It allows autonomous vehicles to detect and analyze pedestrians, animals, and other objects in all driving conditions.

The FIR signal (8-12u) passively collects, not only the temperature readings, but also the emissivity of the material or how much heat it emits. Plus, because each material has different characteristics, FIR can also "see" the lines painted on the road, as well as cracks, potholes, and debris, such as tires, rocks, et cetera.

AdaSky’s Viper is the first of its kind—a thermal sensor with shutterless technology. This means that the vehicle’s vision is never mechanically blinded—not even for a millisecond. AdaSky Viper passively collects the FIR signal that radiates from objects and materials and converts it to VGA video. It then applies proprietary deep-learning computer-vision algorithms to provide accurate object detection, classification, and scene analysis.

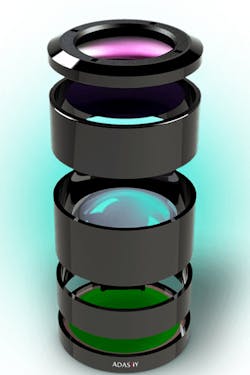

Here's a sectioned view of AdaSky's Viper thermal sensor. (Source: AdaSky)

Viper simply senses signals from objects radiating heat. Most importantly, a FIR thermal camera allows AVs to see and understand the road ahead in almost any lighting or weather condition and can distinguish between living and nonliving objects, and between vehicles, road surfaces, traffic signs, and roadside vegetation.

Moreover, a FIR camera can capture not only the thermal radiation or temperature of an object or material, but also its emissivity—how effectively it emits heat. Since each material has a different emissivity, the thermal signature of each object is different, which contributes to the contrast in the images seen through AdaSky’s Viper. This enables Viper to see a great deal of details from an object. The camera then uses this information to create a visual painting of a roadway, including not only other cars, pedestrians, animals, and other objects, but also potholes, debris, traffic signs, and more.

With a sensitivity of 0.05 Centigrade that allows for high-contrast imaging, AdaSky Viper can precisely detect vehicles, pedestrians, cyclists, animals, debris, and other objects more than 200 meters away, allowing more distance for an AV to react.

Unlike other FIR cameras, Viper’s application-specific integrated circuit (ASIC), combined with state-of-the-art image-processing algorithms, delivers high-resolution video. Other FIR cameras are affected by physical artifacts when pointing directly into the sun, creating a shadow or ghosting effect on the image known as a “sunburn” that can take anywhere from minutes to hours to disappear. To compensate, AdaSky Viper uses an algorithm called “sunburn protection” that presents a crystal-clear image for processing.

Viper is also the best in resolution and in battery life, and, because it’s significantly smaller than other camera solutions at only 2.6 cm in diameter by 4.3 cm in length with very low power consumption, Viper is the ideal sensing solution for the complex autonomous vehicle system. As the smallest sensing solution of its kind, it is incredibly easy to integrate into an autonomous vehicle.

What kind of challenges are there for the technologies currently available for autonomous vehicles?

To meet safety requirements, autonomous vehicles need to be able to fully see and understand the roads around them. And they must able to do this no matter the weather conditions; their sight can’t be comprised in rain, snow, or fog. Vehicles’ sight also needs to be able to withstand dynamic lighting, like direct sunlight, or even drastic changes in lighting, like entering or exiting a tunnel. The sensing technologies that are available today simply can’t do all of this. They experience perception problems far too often, and, to date, no single solution has been able to enable fully autonomous driving.

Accurate image detection is a major challenge for today’s sensing solutions. Examples of use cases where today’s sensing solutions fail include inaccurate segmentation of images, such as humans, animals or bicycles, in advertisements on buildings or buses, and inaccurate perception at night or in inclement weather such as fog, snow or rain. For successful detection, the autonomous vehicle will need either a high-level of confidence detection from one of the sensing modalities, or it will need a consensus between two or more of the sensing modalities.

Most sensors are also confounded by sudden changes in lighting. For instance, consider a vehicle entering or exiting a tunnel. For a human driver, it takes the eyes a few seconds to adjust to the sudden darkness or bright lighting, but cameras and LiDAR are no better—they are also momentarily blinded by the lighting change.

A car driving into the bright sun is blinded from the objects in front of it. Check out this video:

When the light suddenly goes from bright to dark, or vice versa, it takes a human driver a moment to adjust, and, in that millisecond, they are blinded. The same is true for the cameras integrated in autonomous vehicles.

Do you have any insight to what happened with the Uber accident? Could AdaSky’s technology have played a role in preventing the accident?

The engineering team at AdaSky has created simulations of the driving scenario in which the accident took place, and they have determined that if the car had been equipped with thermal sensors in addition to LiDAR and radar, the car could have detected the pedestrian five seconds sooner than the system in place allowed. For successful detection, the autonomous vehicle will need either a high-confidence detection from one of the sensor modalities, or it will need a consensus between two of the sensing modalities.

Our team believes that the addition of thermal-sensing solutions will fill the current gaps of sensor-fusion modality and that FIR cameras will end up being one of the most important sensors in AVs and an essential part of a sensor suite for full autonomy. In fact, policy-makers are going to require redundancy for critical AV systems, and most OEMs are already gearing up to use multiple sensors and other components in a sensor-fusion solution as fail-safe measures.

But until that happens, testing will continue to be limited, and, ultimately, the progress of self-driving cars could be stopped if more incidents like the recent tragedy occur. For more analysis of the time it would take each sensor to stop, click here.

Where does AdaSky see the future of autonomous vehicles going?

It is not a question of if, but when FIR will be used in autonomous vehicles. In the wake of the self-driving Uber accident, the focus should be on who will introduce the safest solution to prevent further accidents like this one. Unlike other current solutions, a FIR thermal camera can function at night with very clear detection of its surroundings, and it can produce segmentation of a pedestrian with a bicycle.

The future mass-market deployment of fully autonomous vehicles relies on FIR technology. One major automotive OEM, BMW, is using thermal-imaging cameras as part of its sensor suites for all self-driving prototypes, and many automakers favor using multiple FIR sensors for the highest level of safety.

Automakers’ goal of deploying autonomous vehicles on public roads by the beginning of the next decade cannot be achieved with the limited capabilities of today’s sensing solutions. Their persistent perception problems mean that vehicles cannot operate safely and reliably without the monitored control of a human driver, making true Level-5 autonomy impossible. FIR cameras are the only technology that can deliver complete classification, identification, and detection of a vehicle’s surroundings in any environment or weather condition and are, thus, the only sensing technology that can make the mass-market adoption of fully autonomous vehicles a reality.