Add Security Best Practices to Automotive Software Development Without Disruption

With the growing complexity of automotive systems, car manufacturers and suppliers have increasingly higher expectations for software developers to reduce the risks associated with security vulnerabilities or system failures. The broadly implemented safety standard ISO 26262 “Road vehicles – Functional safety” requires any threats to functional safety to be adequately addressed, implicitly including those relating to security threats, but the standard doesn’t provide explicit guidance relating to cybersecurity.

At the time of ISO 26262’s publication, that was perhaps to be expected. Automotive embedded applications have traditionally been isolated, static, fixed-function, device-specific implementations, and practices and processes have relied on that status.

But the rate of change in the industry has been such that by the time of its publication in January 2016, SAE International’s Surface Vehicle Recommended Practice SAE J3061 was much anticipated. Created to be complementary to initiatives from other security-related standards groups and organizations like AUTOSAR, SAE J3061 describes a process framework for cybersecurity that an organization can tailor along with its other development processes. This systematic framework supports developers’ efforts to design and build cybersecurity into vehicle systems, as well as to monitor and respond to incidents in the field, and to address vulnerabilities in service and operation.

Beyond Functional Safety

SAE J3061 argues that cybersecurity is likely to be even more challenging than functional safety, stating that, “Since potential threats involve intentional, malicious, and planned actions, they are more difficult to address than potential hazards. Addressing potential threats fully, requires the analysts to think like the attackers, but it can be difficult to anticipate the exact moves an attacker may make.”

Perhaps less obviously, the introduction of cybersecurity into an ISO 26262-like formal development implies the use of similarly rigorous techniques into applications that are NOT safety critical—and perhaps into organizations with no previous obligation to adhere to them. The document discusses privacy in general and personally identifiable information (PII) in particular, and highlights it as a key target for a bad actor of no less significance than the potential compromise of safety systems.

In practical terms, it therefore demands that ISO 26262-like rigor is required in the defense of a whole manner of personal details potentially accessed via a connected car, including personal contact details, credit-card and other financial information, and browser histories. It could be argued that this is an extreme example of the general case cited, which states “…there is no direct correspondence between an ASIL rating and the potential risk associated with a safety-related threat.”

Not only does SAE J3061 bring formal development to less safety-critical domains, it also extends the scope of that development far beyond the traditional project-development lifecycle. Examples include the need to establish an incident response process to address vulnerabilities that become apparent when the product is in the field, consideration for over-the-air (OTA) updates, and cybersecurity considerations when a vehicle changes ownership.

To this end, J3061 provides guidance on best development practices from a cybersecurity perspective and calls for a similar sound development process as the familiar ISO 26262. For example, hazard analyses are performed to assess risks associated with safety, whereas threat analyses identify risks associated with security. The considerations for the resulting security requirements for a system can be incorporated in the process described by ISO 26262, part 8, section 6: “Specification and management of safety requirements.”

Another parallel can be found in the use of static analysis, which is used in safety-critical system development to identify construct, errors, and faults that could directly affect primary functionality. In cybersecurity-critical system development, static code analysis is used instead to identify potential vulnerabilities in the code.

When applying J3061 in a development team that’s already following ISO 26262, the processes are complementary enough that they lend themselves to integration at each stage of the product lifecycle—to the extent that the same test team could be deployed to fulfill both safety and cybersecurity roles. This allows development team managers to breathe a sigh of relief, knowing that they can begin to implement cybersecurity processes immediately without significant impacts to budgets or schedules.

What is J3061?

J3061 establishes a set of high-level guiding principles on cybersecurity for automotive systems and a defined framework for a lifecycle process that incorporates cybersecurity into automotive cyber-physical systems. It also provides information on common tools and methods for designing and validating cyber-physical automotive systems and establishes a foundation for further standards development activities in vehicle cybersecurity.

J3061 recommends a cybersecurity process for all automotive systems responsible for functions that are Automotive Safety Integrity Level (ASIL) rated per ISO 26262, or that are responsible for functions associated with safety-critical systems, including propulsion, braking, and steering, as well as security and safety. Consumer expectations are now that many automotive applications are essentially extensions of smartphone capabilities, which open up entirely new security risks.

For those reasons, J3061 also recommends that a cybersecurity process be applied for all automotive systems that handle PII—even those that may seem trivial today (such as driver preferences) but could present a risk in the future.

The development of J3061 is based on a few guiding principles for developers, including understanding an automotive system’s cybersecurity risks as well as understanding key cybersecurity principles. Security risks include whether a system under development stores or transmits sensitive data or PII, whether it has a role in safety-critical vehicle functions, and whether it will have communication or connection to entities outside of the vehicle’s architecture.

Guiding principles include protecting PII and sensitive data, using the principle of “least-privilege” so that all components run with the fewest possible permissions, and applying defense-in-depth—especially for the highest-risk threats. Developers must also prevent unauthorized changes that could reduce vehicle security after it has been sold, including accessory systems such as Bluetooth-enabled phones.

J3061 offers extensive recommendations on the overall management of cybersecurity within a development organization, from creating and sustaining a cybersecurity culture through incident-response processes. But for today’s development managers, it’s perhaps best-understood through examples of how its engineering processes can be effectively integrated into the current ISO 26262 development lifecycle.

Automating Combined Cybersecurity and Functional Safety Processes

The cybersecurity lifecycle begins with the development of the Cybersecurity Program Plan. Threat analysis and risk assessments (TARA) identify and assess potential threats to the system and determine associated risks. TARA results drive subsequent activities by focusing analysis on the highest-risk cybersecurity threats.

1. An ISO 26262-compliant cybersecurity process.

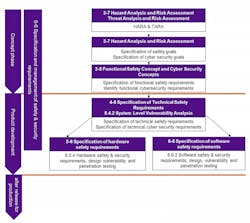

Figure 1 outlines the principles of an ISO 26262-compliant cybersecurity process. It shows the relationship between the concept and product-development phases, and the system, hardware, and software product-development phases. It also illustrates the ability to perform concurrent hazard analysis, safety risk assessment, threat analysis, and security risk assessment using a single integrated template and method.

Applying SAE J3061 Cybersecurity Processes in Accordance with ISO 26262

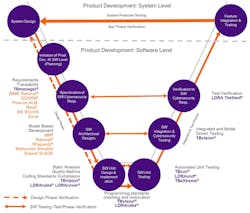

Automated software tools ease the path to certification in the development of SAE J3061- and ISO 26262-compliant applications (Fig. 2). We’ll look at a few examples within the development process to illustrate.

2. Mapping the capabilities of an automated tool chain to SAE J3061 process guidelines; specification of software cybersecurity requirements (SAE J3061 Section 8.6.2).

Specification of software cybersecurity requirements (SAE J3061 Section 8.6.2)

The primary objective in this phase is to review and update the cybersecurity requirements identified in the context of the system as a whole. This references hardware and software interfaces, data flow, data storage, data processing, and any subsystems supporting cybersecurity functionality.

Software architectural design (SAE J3061 Section 8.6.3)

The objective of this phase is to develop a software architectural design that realizes the security requirements, leveraging the analysis of the “data types being used, how the data will flow, how the software will detect errors, and how the software will recover from errors.”

Static-analyses tools help verify the design by referencing to the control and data flow analysis of the resulting code. The tools derive the relationship between some or all of the code components and represent it graphically so that it can be compared with the design.

Software vulnerability analysis (SAE J3061 Section 8.6.4)

This is a security-specific activity, so it’s supplementary to the processes specified by ISO 26262: 2011. SAE J3061 refers to “trust boundaries.” On one side of the boundary, data is untrusted; on the other side, data is assumed to be trustworthy. The software vulnerability analysis (SVA) looks at the cybersecurity requirements and the data flow from the software architectural design to define where these trust boundaries exist.

The first step in the SVA is to decompose the application and analyze the data and control entry and exit points. The second step categorizes threats based on that breakdown. In some models, severity values for process, control, and data areas are determined; in others, any entry point or trust boundary is treated as critical for the final step.

Software unit design and implementation (SAE J3061 Section 8.6.5)

Complementing ISO 26262 (Fig. 3), best practices include the application of secure coding standards such as MISRA, CERT, or CWE. LDRA static-analysis tools can be configured to combine rules from more than one source.

3. Mapping the capabilities of the LDRA tool suite to “Table 1: Topics to be covered by modelling and coding guidelines” specified by ISO 26262-6:2011.

Coding Guidelines

Software architectural design and unit implementation

The architectural design and unit implementation (Fig. 4) principles required by ISO 26262-6:2011 can usually be checked by means of static analysis, just like coding guidelines.

4. Mapping the capabilities of the LDRA tool suite to “Table 8: Design principles for software unit design and implementation” specified by ISO 26262-6:2011.

Software implementation code reviews (SAE J3061 Section 8.6.6) and software vulnerability testing (SAE J3061 Section 8.6.10)

Software implementation code reviews usually occur as the code is written. A fully integrated tool suite automates that process, helping to ensure that the good practices required by ISO 26262:2011 and SAE J3061 are adhered to whether they’re coding rules, design principles, or principles for software architectural design.

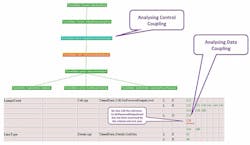

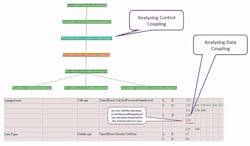

Metrics relating to software component size, complexity, cohesion, and coupling are more concerned with the structure of the code base rather than the use of particular constructs. For them to be meaningful, the code needs to be more complete. Complexity metrics are generated as a product of interface analysis, cohesion evaluated through data object analysis, and coupling through data-control coupling analysis (Fig. 5).

5. Output from control and data-coupling analysis as represented in the LDRA tool suite.

Software unit testing (SAE J3061 Section 8.6.7) and software integration and testing (SAE J3061 Section 8.6.8)

Dynamic-analysis techniques (which involve the execution of some or all of the code) are helpful in both software unit testing, and system integration and testing. Test procedures need to be authored, reviewed, and executed to ensure the software unit meets design criteria, but doesn’t contain any undesired functionality. Unit tests can then be executed on the target hardware or simulated environment based on the verification plan and specification.

Once the test procedures are executed, actual outputs are captured and compared with the expected results. Pass/fail results are then reported and software safety requirements are verified accordingly.

Integration testing ensures that when the units are working together in accordance with the software architectural design, they meet the related specified requirements. All dynamic testing should use environments that correspond closely to the target environment, but that isn’t always practical.

One approach is to develop the tests in a simulated environment and then, once proven, re-run them on the target. Test tools, while not required, make the process far more efficient.

The example, Figure 6 shows how the software interface is exposed at the function scope, allowing the user to enter inputs and expected outputs. The tool suite then generates a test harness, which is compiled and executed on the target hardware. Actual outputs are captured and compared with the expected outputs.

6. Performing requirement-based unit testing using the LDRA tool suite.

SAE J3061 recommends performing penetration (“pen”) and fuzz testing in accordance with software security requirements. Pen testing is usually a system test technique, performed late in the lifecycle. Robustness testing is closely related to fuzz testing and is complementary in that it can be performed at unit or integration level, and can generate structural coverage metrics. Both robustness and fuzz testing are designed to test response to unexpected inputs. Robustness testing can incorporate techniques such as boundary-value analysis, conditional-value analysis, error guessing, and error seeding.

Structural coverage metrics

In addition to showing that the software functions correctly, dynamic analysis also generates structural coverage metrics. By comparing results with unit-level requirements, developers can evaluate the completeness of test cases and demonstrate that there’s no unintended functionality. Statement, branch, and MC/DC coverage are provided by both the unit-test and system-test facilities, on the target if required.

System and unit test can also operate in tandem. So, for instance, coverage can be generated for most of the source code through a dynamic system test and complemented using unit tests to exercise normally inaccessible code, such as defensive constructs (Fig. 7).

7. Examples of representations of structural coverage within the LDRA tool suite.

Verification/validation to software cybersecurity requirements (SAE J3061 Section 8.6.9)

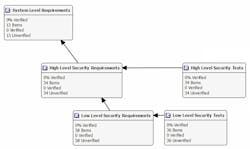

Bidirectional traceability is referenced throughout ISO 26262:2011, and SAE J3061 states that the software cybersecurity design is to be “… traceable to, and validated, against the software cybersecurity requirements” and that the implementation is to be similarly traceable to the design. Tools can help establish traceability from system-level requirements through high- and low-level requirements, and on to source code and test cases (Fig. 8).

8. The Uniview graphic from the TBmanager component of the LDRA tool suite, showing the relationships between cybersecurity tests and requirements.

Conclusions

Although ISO 26262 doesn’t directly reference a need for security, it does require complete functional safety requirements. An insecure automotive system is an unsafe one, implying an obligation to tackle security head on. Given that obligation, adherence to SAE J3061 is a logical step.

Fortunately, SAE J3061 can be considered complementary to ISO 26262 in that it provides guidance on best development practices from a cybersecurity perspective, just as ISO 26262 provides guidance on practices to address functional safety. SAE J3061 also calls for a similar sound development process and mirrors ISO 26262’s development phases. Neither mandates the use of automated tools, but their use is almost essential in all but the simplest projects. Just as the approaches complement each other, the same tools can be used to support them concurrently.

Mark Pitchford is Technical Specialist at LDRA.