This file type includes high-resolution graphics and schematics when applicable.

As almost everyone knows, robots have been widely adopted in manufacturing to implement faster more efficient production in factory automation. As robots have improved, they have co-opted human jobs because they do repetitive tasks faster with fewer mistakes and work in environments unsuitable for humans.

As robots become more accurate and intelligent, they’re expected take on even more human jobs. One prediction is that by 2018, about 1.3 million industrial robots will be introduced to factories around the world. And that’s not all—more consumer robots will emerge to provide a wide range of conveniences and services.

A major problem that must be solved concerns safe robot-human interaction in factory automation. Since humans and robots often work in the same space, accidents often occur that harm humans or interfere with robot action. Existing audible and flashing-light warnings used today can be enhanced or replaced by more sophisticated sensors and warning equipment. One solution to consider is Texas Instruments’ 3D time-of-flight sensors.

Robots are generally classified into five categories: industrial, logistics, collaborative, service, and drones. This article discusses the first three; below are the characteristics of each:Types of Robots

Industrial

• Fixed in position to a floor, wall, or bench.

• Perform specific functions like lifting and moving, welding, painting, palletizing, etc.

• Controlled by a computer that’s programmed to the specific operation.

Logistics

• Mobile robots that access stored goods.

• Mobile robots that move materials from one place to another.

• Programmed to traverse specific routes.

• Require many sensors to determine location, direction, and collision detection.

Collaborative

• Works in cooperation with a human.

• Holds and/or manipulates objects for human access or inspection.

• Operated by a computer programmed for the specific joint task.

Robot-Human Interaction

At some point, all of these robots will have human interaction, whether deliberate or by accident or circumstance. The goal is to protect the human from injury first, then prevent the human from interfering with the robot’s efficient operation.

Industrial robots are usually operated in a fenced-off area to solve the problem. The “fence” may be an actual wall or see-through panel, or an electronic version that uses light beams and optical sensors as an invisible barrier. If the barrier is broken, the robot will shut down. Other audible or visible warnings may also be used.

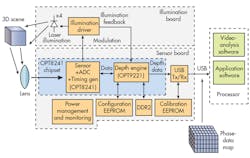

As seen in this system block diagram of the 3D Time-of-Flight evaluation module and processor, the solution consists of two chips: the OPT8241 optical imaging chip and OPT9221 matching controller.

Logistics robots regularly encounter humans during their mobile operations. For that reason, they need sensors to detect humans. The sensors can tell when a human comes within its detection range. The sensors’ signals can stop the robot or cause it to change direction to avoid contact. If a collision occurs, the robot stops and must be restarted by a human.

Collaborative robots are the most sensitive, since they work directly with a human. Multiple sensors are normally used to detect human contact or some minimal proximity. Any contact or violation of distance causes the sensor to stop the robot. Sensor redundancy is common.

All of these robot types require a sensor that reliably detects the presence of, and hopefully movement of, humans. It’s a true design challenge.

Human Detection

A wide range of human-detection devices populate the market these days. Most are of the radar type, meaning they radiate a signal and detect a reflection. Ultrasonic, infrared (IR), and LIDAR have been adopted with mixed effectiveness and cost.

Light detection and ranging (LIDAR) is probably the most accurate and reliable, but also the most intricate and expensive. LIDAR paints the target with laser light and photodiodes detect any backscatter. Analysis of the collected scene reveals objects in 3D and movement. LIDAR cost and complexity keeps it from being adopted in most robot installations.

One of the most useful and cost-effective sensing options is the 3D Time-of-Flight (ToF) system from Texas Instruments. The ToF system was developed for robot obstacle detection, collision avoidance, and general navigation. It works by illuminating a scene with modulated IR light, and then measuring the phase delay of the reflected light signal. The phase delay is proportional to the distance to an object that reflected the signal.

Four 850-nm IR VCSEL laser diodes illuminate the scene. The IR beams are modulated with a 50% duty-cycle square wave in the 12- to 80-MHz range. The reflected light is captured by a camera made up of a lens and light sensor that offers a QVGA resolution of 320 × 240 pixels. An 80- × 60-pixel resolution is also available.

The light level on each pixel is measured, digitized, and used in a parallel computing process that results in a depth map of the scene. Distance is determined by a cross-correlation technique; maximum range is about four meters. The system computes a collection of XYZ data points in space, giving the robot a 3D picture of the scanned area. Depending on the type of processor used, the system can support frame rates from 10 to 60 frames per second.

For more detail on the 3D ToF method, check out the TI’s video series.

The 3D ToF method is available as chips or a complete evaluation module. The OPT8241-CDK-EVM is the official evaluation module for the second-generation 3D Time-of-Flight (3D ToF) sensor from Texas Instruments.

The 3D ToF EVM is a two-chip solution to robot navigation (see figure). The OPT8241 is an optical imaging chip and the OPT9221 is a matching controller that interfaces to a processor via a USB port. Processor type and speed depends on the desired frames per second rate. Typical processors are Cortex-M4, Cortex-A9 (Sitara 437x) and Cortex-A15 (Sitara AM57x). In addition, it requires Linux support. A software development kit is available from Voxel.

The OPT8241 chip drives four modulated, 850-nm laser diodes that paint the area being monitored. The reflected near IR light from the human objects is captured on an internal 320- × 240-pixel QVGA sensor capable of 60-frame/s operation. The phase shift between the emitted light and captured light is detected and digitized, and then sent to the OPT9221 controller. Subsequently, the OPT9221 reconstructs the scene and, using optimized algorithms, creates a 3D output for analysis and eventually display. The processor handles the analytics and end application.

The system’s accuracy enables definitive human detection and even recognizes body movement. As of now, the 3D ToF system displays greater accuracy than almost any other human-detection method.