What you’ll learn:

- What is sensor fusion?

- How will sensor fusion impact various industries?

- What underlying technologies are required for sensor fusion?

Though we’re mostly unaware of it, our senses relentlessly work together to guide us through the world. Our vision, hearing, and sense of touch and smell create mental images of the environment at every instant, helping us make decisions. Is it safe to merge into the next lane? Is this piece of fruit edible?

Since it’s all involuntary, we’re not aware of the actual complexity of the process. But the intricacy of combining sensory channels is revealed when trying to teach machines to master the same skill. While vast improvements in AI capabilities make it less of a challenge, they must be coupled with innovations in everything from hardware and software that power the system to sensors themselves.

However, the accuracy of these insights hinges heavily on the quality of data collection at the edge and real-time processing capabilities. It’s imperative that the data is comprehensive, manageable, and explainable. By intelligently combining hardware and software, we can get closer to mimicking the simplicity and complexity of the human senses through sensor fusion.

The Ins and Outs of Sensor Fusion

Sensor fusion is a process of combining data from multiple sensors to estimate something (Fig. 1). AI applications use sensor fusion to improve the accuracy of predictions by ingesting data from various sources.

Sensor fusion is a staple in a wide range of industries to improve functional safety and performance. Consider, for example, advanced driver-assistance systems (ADAS) in cars. When behind the wheel, we take for granted how our vision, hearing, and touch are in play and can identify potential hazards on the road.

Today, auto giants are taking cues from the human brain, placing sensors like LiDAR, radar, cameras, and ultrasonic sensors around the vehicle and processing the data to interpret conditions on the road ahead. They’re also using sensor fusion to achieve greater object detection certainty.

Another example is the burgeoning drone sector. Drones operate in complex, dynamic environments where they must navigate obstacles and maintain stable flight while handling tasks like surveying or delivery. By fusing data from multiple sensors, such as cameras, inertial measurement units (IMUs), and GPS, among many others, drones can estimate their position, orientation, and velocity. That allows them to adapt to changes in their environment and complete their missions successfully.

In addition to traditional sensors, event-based vision sensors can deliver even more lifelike sensory experiences. These image sensors operate by detecting changes in the arrangement of pixel in a scene, thus enhancing the level of detail that robots or other systems can extract from the environment. Featuring a temporal precision of over 10,000 frames per second (fps) and a wide dynamic range, this technology promises to capture motion better than virtually any other camera.

As image sensors and other types of sensors evolve over time—and as costs continue to decrease—sensor fusion is bound to become even more widespread and move into many new use cases.

Integrating Hardware Plus Software in AI Systems

Hardware is only one part of the equation. Software is equally important. With advances in computer vision and the rising tide of large language models (LLMs), robots and other systems are gaining the ability to not only see the world around them, but also, more broadly, understand their surroundings with unprecedented accuracy.

Software can process the sensory information it receives and only continues to improve in terms of the performance and complexity it can handle. We’re already seeing semi-autonomous cars using a combination of LiDAR, radar, cameras, and other sensors processed by AI tools that run inside hardware accelerators to identify threats and apply the brakes to potentially avoid crashes.

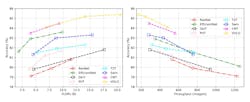

Several different AI models are used in computer vision (CV), namely convolutional neural networks (CNNs) and vision transformers. Transformers are a type of neural network that learns context by tracking relationships in data, and they happen to be used in LLMs. These approaches have evolved over time (Fig. 2). The advances in AI have been bolstered by a surge in computational power and reduced processing and storage costs.

As Figure 2 shows, computer vision can reach accuracy levels ranging from 79% to 85% accompanied by a substantial increase in throughput. Several new techniques, including data augmentation, transfer learning, architecture extensions, prompt-engineering, and custom loss functions, could help increase the accuracy of AI models. This has the potential to push CV into all-new applications.

Further enhancing the capability of sensor fusion is the rise in proprietary AI chips and hardware accelerators specifically tailored to this task. Combined with improved cameras, radar, LiDAR, GPS, and other sensors, hardware is feeding the software brain with ever-more accurate data to work from.

To effectively process inputs from multiple disparate sensor sources, it’s essential to deploy specialized processing units that are optimized to each function. Thus, a heterogeneous processing platform and a software stack that not only supports the training of the AI model in the cloud, but also facilitates seamless deployment onto the edge solutions, can ease development efforts.

It’s also vitally important to use AI models that are able to leverage inputs from several different sensors at once, so that they can bridge the blind spots inherent to each sensor to provide a comprehensive picture of the system’s surroundings. The output is, therefore, greater than the sum of its inputs. A flexible and configurable platform is crucial to enable real-time processing of sensor inputs and ensuring that all these sensors and the data they’re feeding to the system remain in sync.

Hardware acceleration for digital signal processing (DSP), video streaming pipelines, optimized AI acceleration, and a wide range of sensors with varying interfaces are all key to the success of sensor fusion.

The Future of Sensor Fusion

The goal of sensor fusion is to imitate the brain’s sensing capabilities. Not unlike how the human brain takes in inputs from various “sensors” or “systems,” such as the nervous system, muscular system, and more, to stitch together a fuller picture of the world around us, we’re bound to see robots and other machines adopt this approach to tackle ever-more complex tasks.