Download this article in PDF format.

Many of us can relate to battery-powered devices that display how much power or run time is available in that particular device, especially because we have been surrounded by a multitude of gadgets on the home front. From electric shavers to tablets, we rely on all sorts of battery indicators to help determine if and how these devices will be used. In time, we become somewhat familiar with each device’s level of accuracy and know how much confidence to place in a device reporting, for example, 10% power left.

In higher-power multicell applications, situations can be more critical if users are found without adequate power, such as the case with ebikes, battery-backup systems, power tools, or medical instrumentation. A spare battery pack may not always be available or continuous operation for a specific length of time may be required. Thus, we can better appreciate accurate battery gas gauging (aka fuel gauging) or assessing how much charge a battery or battery pack has at any point in time.

Handling Battery-System Challenges

Battery gas gauging is just one of many functions typically found in a smart multicell battery system, in addition to charging, protection and cell-balancing circuitry. Regardless of function, battery systems present a unique set of design challenges simply because batteries are always changing in electrical nature.

For example, a battery’s maximum capacity (also known as state-of-health or SOH) and self-discharge rate always decrease over time, while charge and discharge rates vary over temperature. Well-designed battery systems continuously address as many of these parametric shifts as possible in order to provide end users with consistently accurate battery performance criteria, such as charging time, estimated power, or expected battery lifetime (or number of charges left).

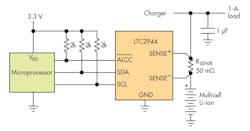

1. Linear Technology’s LTC2944 60-V battery gas gauge offers accurate gauging for single-cell or multicell systems.

Simply put, accurate battery gas gauging requires an accurate battery gas-gauge IC and a relevant battery-specific model to ultimately provide systems with the most coveted parameter in battery gas gauging: state-of-charge (SOC), or the present battery capacity as a percentage of maximum capacity.

While certain battery gas gauges in the market integrate battery models and algorithms to provide direct SOC estimations, peeling back the onion reveals that these devices tend to oversimplify SOC estimation at the tragic expense of accuracy. Moreover, they usually only work with particular battery chemistries and require additional external components to interface with high voltages.

Advances have been made to combat these problems, though. For example, Linear Technology’s LTC2944 (Fig. 1) is a 60-V battery gas gauge that intentionally provides the bare essentials for accurate, single or multicell, battery gas gauging.

Count on Counting Coulombs

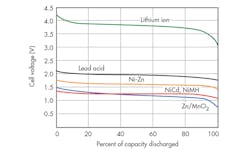

Current studies have shown that precise coulomb counting, voltage, current, and temperature are prerequisites for accurate SOC estimation, which so far have resulted in a minimum of 5% error. These parameters allow us to pinpoint where a battery is at along a charge or discharge curve. Here, coulomb counting not only reinforces voltage readings but also helps differentiate any flat regions of a curve. Figure 2 shows typical discharge curves for different battery chemistries.

2. Shown are typical discharge curves for different battery chemistries.

Counting coulombs helps dodge, for example, a device misleadingly reporting 75% SOC for a long period of time and then suddenly dropping down to 15% SOC, which is what tends to happen in devices that only measure voltage to assess SOC. To count coulombs, users initialize a coulomb counter to a known battery capacity when the battery is fully charged, and then count down when discharging coulombs or count up when charging coulombs (to account for partial charging). The beauty of this scheme is that battery chemistry needn’t be known. Because the LTC2944 integrates a coulomb counter, this device can be copied-and-pasted across multiple designs, agnostic of battery chemistry.

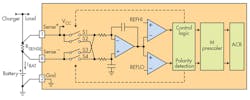

Take a look at how the LTC2944 counts coulombs (Fig. 3). Remember, charge is the time integral of current. The LTC2944 measures charge with up to 99% accuracy by monitoring the voltage developed across a sense resistor with a ±50-mV sense voltage range, where the differential voltage is applied to an auto-zeroed differential analog integrator to infer charge. When the integrator output ramps to the high and low reference levels (REFHI and REFLO), the switches toggle to reverse the ramp direction.

Control circuitry then observes the condition of the switches and the ramp direction to determine polarity. Next, a programmable prescaler allows users to increase integration time by a factor of 1 to 4096. With each underflow or overflow of the prescaler, the accumulated charge register (ACR) is finally incremented or decremented by one count.

It’s worth noting that the analog integrator used in the LTC2944’s coulomb counter introduces minimal differential offset voltage and, therefore, minimizes the effect on total charge error. Many coulomb-counting battery gas gauges perform an analog-to-digital conversion of the voltage across the sense resistor and accumulate the conversion results to infer charge. In such a scheme, the differential offset voltage can be the main source of error, especially during small signal readings.

3. In terms of counting coulombs, the LTC2944 measures charge with up to 99% accuracy, according to Linear Tech.

For example, consider a battery gas gauge with an ADC-based coulomb counter and a maximum specified differential voltage offset of 20 µV that digitally integrates a 1-mV input signal—the charge error due to offset would be 2%. By comparison, the charge error due to offset using the LTC2944’s analog integrator would only be 0.04%—50 times smaller.

Back to Basics: Voltage, Current, and Temperature

If coulomb counting is responsible for reinforcing voltage readings and differentiating flat regions of a charge or discharge curve, then current and temperature are parameters responsible for fetching the most relevant curve to begin with. The challenge is that a battery’s terminal voltage (voltage while connected to a load) is significantly affected by the battery current and temperature.

As a result, voltage readings must be compensated with correction terms proportional to the battery current and open-circuit voltage (voltage while disconnected from load) versus temperature. Because it isn’t practical to disconnect a battery from a load during operation for the sole purpose of measuring the open-circuit voltage, it’s good practice to at least adjust terminal voltage readings per current and temperature profiles.

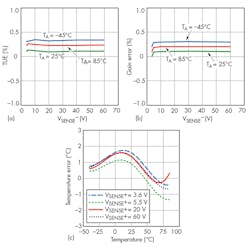

Since high SOC accuracy is the ultimate design goal, the LTC2944 uses a 14-bit No Latency ΔΣ ADC to measure voltage, current, and temperature with up to 1.3% and ±3°C guaranteed accuracy respectively. Typical performance of the LTC2944, however, goes beyond that.

The graphs in Figure 4 show how certain accuracy figures of merit in the LTC2944 vary with temperature and voltage. Fig. 4a shows that the ADC total unadjusted error when measuring voltage is typically less than ±0.5% and fairly constant over sense voltage. Similarly, Fig. 4b shows that the ADC gain error when measuring current is typically less than ±0.5% over temperature. Lastly, Fig. 4c shows that the temperature error only varies by about ±1°C over temperature for any given sense voltage.

All of these accuracy figures add up and can easily compromise SOC accuracy. That’s why it’s important to note how accurate a particular battery gas gauge measures voltage, current, and temperature amongst many specifications.

The LTC2944 provides four ADC modes of operation when measuring voltage, current, and temperature. In automatic mode, the device continuously performs ADC conversions every couple of milliseconds versus scan mode, which converts every 10 s and then goes to sleep. In manual mode, the device performs a single conversion on command and then goes to sleep. Anytime the device is in sleep mode, quiescent current is minimized to 80 µA.

The entire analog section of the LTC2944 can also be completely shut down to reduce quiescent current even further to 15 µA—the last thing users want is a battery gas gauge that ironically consumes a lot of battery power.

4 These plots illustrate ADC gain error voltage measurement (a), ADC gain error current measurement (b), and temperature error vs. temperature (c) for the LTC2944.

Convenient Interfacing

Users can read out battery charge, voltage, current, and temperature from the LTC2944 using a digital I2C interface. Users can also read out status, control on/off, and set alertable high and low thresholds for each parameter by configuring a few 16-bit registers via I2C. The alert system eliminates the need for continuous software polling and frees the I2C bus and host to perform other tasks.

In addition, an ALCC pin serves as both a SMBus alert output or a charge complete input that can be connected to a charge complete output of a battery charging circuit. With all of this digital functionality, someone might still ask, “Why weren’t battery profiles or capacity/SOC estimation algorithms built into the LTC2944?” The answer is simple – it all comes down to (perhaps not surprisingly) accuracy.

While battery gas gauges with built-in battery profiles and algorithms may simplify designs, they’re often times inadequate or irrelevant models of real-world battery behavior and sloppily sacrifice SOC accuracy in the process. For example, users may be forced to work with generic charge and discharge profiles that were generated by unspecified sources or over unknown temperature ranges; exact battery chemistries may not be supported which causes another hit to SOC accuracy.

The point is accurate battery modeling typically considers many variables. It’s also complex enough whereby it makes sense for users to model their own battery in software in order to obtain the highest level of SOC accuracy, rather than rely on inaccurate generic built-in models.

Furthermore, these built-in models make battery gas gauges inflexible and difficult to reuse in designs. Put another way, it’s a lot easier to make changes in software than in hardware, where changing application-specific code is a much simpler task than swapping out a battery gas gauge that also needs to be configured.

In terms of high-voltage capabilities, the LTC2944 can be directly powered from a battery as small as 3.6 V to a full-blown battery stack up to 60 V, and thus can handle applications ranging from low-power portable electronics to power-hungry electric vehicles. Direct connections between the battery (or battery stack) and the LTC2944 is possible, which helps significantly simplify hardware design. Minimizing the number of external components also lowers the overall power consumption and increases accuracy since components, like resistive dividers, aren’t present.

Conclusion

Battery gas gauging is an art in itself because of the many interdependent parameters that influence SOC. Experts around the world agree that accurate coulomb counting, coupled with voltage, current, and temperature readings, provide the most accurate method to estimate SOC.

The LTC2944 battery gas gauge provides these fundamental measurements and excludes internal battery modeling, allowing users to implement their own relevant profiles and algorithms in application-specific software. Moreover, measurements and configuration registers can be accessed over I2C, while up to 60-V multicell connections can be made directly to the LTC2944.