How Hyperscalers Turned into Ethernet Roadmap Influencers

What you’ll learn:

- What trends are driving new hyperscale data centers to be Ethernet roadmap influencers?

- How challenges transitioning Ethernet to higher data rates impacted design.

- How can an Ethernet IP partner help you when moving to 400 GbE, 800 GbE, 1.6 TbE, and beyond?

Massive amounts of ever-increasing data are moving across great distances to keep our digital lives from crashing. The need for increased bandwidth isn’t a new story, but with new emerging applications such as AI, AR/VR, and 5G, we’re in a perfect storm of bandwidth hunger.

To meet the capacity and performance of these new applications, Alibaba, Amazon, Apple, Baidu, ByteDance, Google, Meta, Microsoft, and Tencent; software-as-a-service (SaaS) companies; and others are building capacity-enhancing hyperscale data centers. Such applications not only need additional user-to-data-center internet bandwidth, but also require an exponential increase in intra-data-center bandwidth.

Thus, the push for faster Ethernet is mounting even as IEEE protocol standards are yet to be set. 400 GbE is just rolling out now. It’s estimated that 800 GbE or even 1.6 TbE could be years out, and even these higher rates could lag behind what’s needed to manage the projected data load. To meet the challenges of this new era, Ethernet IP is a critical part of the Ethernet puzzle, helping designers bring the future—800 G/1.6 TbE and beyond—to life.

Let’s take a look at some of today’s bandwidth challenges and explore how you can navigate through them.

Why Build Hyperscale Data Centers in the First Place?

The reality is that the COVID pandemic shoved more of our lives online than ever before. And guess what? There’s no going back. Living more of our lives online forced many enterprises to scale their data infrastructure quickly. A hyperscale data-center architecture allows for modular and distributed flexibility: During great demand, it’s a fast ramp in the most efficient way possible, using the least amount of energy and space.

And while you may have considered COVID an anomaly, the reality is that bandwidth demand will continue to explode. The pandemic was simply an accelerant for what we already knew was coming in big data. In fact, in a 2020 report, Global Market Insights expects the hyperscale data-center market to increase by 15% compound annual growth rate (CAGR) to $60 billion by 2027. According to the IEEE 802.3 Industry Connections NEA Ad Hoc Ethernet Bandwidth Assessment Part II, here’s why it will continue to grow:

- Billions more people accessing the internet: Only 57% of the global population was online as of 2019, and adoption is growing rapidly. As the world closes in on eight billion people, that means potentially billions more people to drive a new era in bandwidth hunger.

- 9 billion more devices, a 19% CAGR increase from 2017 to 2022: More people have more devices. It’s a trend that’s not expected to level off any time soon. In addition, as machines begin connecting with other machines, sharing and transmitting data through IoT, access points and devices will proliferate, as will the need for bandwidth.

- Increased services: As video and gaming has driven much of the bandwidth needs of today, this will only increase as new technologies come online, such as augmented reality/virtual reality (AR/VR), autonomous automotive applications, and more. Innovation will further escalate services and with it, the need for more bandwidth.

Today’s push for managing our data is just a prologue for what’s coming. Web services companies have traditionally provided data-center services. However, because of this new environment and the future we face, many enterprises are beginning to build and customize their own hyperscale data centers. The ability to ramp up and down in preparation for any context is simply good business. And that good business is influencing the Ethernet roadmap.

The Need for a Big, Fast Data Pipe

So, the need for speed has become insatiable in data management. While networking equipment companies have been the traditional influencers of Ethernet speeds and IEEE Ethernet protocols, today’s hyperscalers are the disruptors.

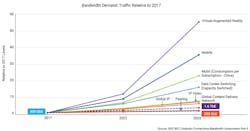

In the last few years, the sheer amount of data has ballooned, outpacing our current and future Ethernet speeds (see figure). As a result, hyperscalers have supplanted the network equipment companies, customizing their own networking solutions and becoming Ethernet roadmap influencers.

What Are the Challenges of Ethernet?

System designers choose Ethernet to connect their systems chiefly because it allows hyperscalers to disaggregate network switches and install their software operating systems independently. You also can use application-specific systems on chip for AI and ML accelerators to increase speed and performance.

Ethernet is ideal because as hardware gets upgraded or replaced with something next-gen, you simply plug in the new hardware and it works. And this can happen whether you’re using copper cables, fiber optics, or PCB backplane.

While Ethernet is the networking technology of choice for many reasons, it comes with the following challenges, too, especially when transitioning to higher data rates:

- Restrictive power envelope: While the physical size of one rack unit in hyperscalers has remained constant, its bandwidth has increased by 80 times in the last 12 years. This has significantly increased power density. With 800 GbE/1.6 TbE, it will rise even further. Thus, system designers are looking for ways to manage this kind of heat dissipation.

- Electrical difficulties and digital logic throughput: Moving across channel reaches from one Ethernet speed to another can present electrical and throughput difficulties. The next-generation switch bandwidths demand 512 lanes of 112-Gb/s SerDes integrated into one SoC. Monolithic integration of such a large SoC is posing implementation challenges with current manufacturing processes. The switch designers are looking at co-packaged and near-packaged implementations to address this challenge.

- Lowered latency: With next-generation designs, Ethernet is used in latency-sensitive applications as well. Minimizing latency requires the data path to be efficient. A PHY + controller integrated design offers ways to optimize the data path and reduce latency through solution-level optimization.

How Can You Prepare for the Future with Ethernet IP?

Ethernet is a technology that defines the data-link layers and physical layers, the first two layers of the seven-layer open systems interconnect (OSI) communications stack. And Ethernet IP is a critical component in connectivity. Integrated Ethernet IP featuring the MAC, PCS, and PHY can save you a lot of headaches, supporting the increase in bandwidth while ensuring interoperability and lowering latency.

Let’s look at the common scenario of using copper cabling for short-distance connectivity between servers in a hyperscaler. Copper is a preferred choice for this application to skirt the cost of fiber optics. For rack switch communication, you’ll need a long-reach, high-performance SerDes to keep the links free of error. But a 51.2-Tb/s switch that has 112-Gb/s SerDes requires 512 lanes in your SoC, which will give you trouble when it comes to signal and power integrity. Because temperature is a critical component to keep everything running smoothly, you’ll need to consider how you will keep it down with this kind of integration.

1.6 T Ethernet is Coming

IEEE has made an 802.3df task force to define standards for next-generation Ethernet after 400 GbE. While it’s still very early for 1.6 TbE, prototypes will likely start emerging this year even while the protocol specifications aren’t yet set by IEEE.

It’s not too soon to start thinking about how you will support 1.6 T when it inevitably arrives. Will 1.6 T Ethernet continue with end-to-end forward error correction (FEC) or adopt a segmented FEC? What would the FEC overhead be and what would the raw PHY bit error rate (PHY BER) targets with 800 G/1.6 T channels be? And MAC, PCS, and PMA must be optimally integrated into your Ethernet connectivity solution for the best performance and latency profile. If each of the sublayers of the OSI stack is from a different vendor, it will complicate your interoperability.

Although there will be multiple configurations in the future, the emergence of the OSFP-XD form factor suggests that initial 1.6 T designs will use 112 G SerDes and must support 16 lanes for the PCS to get to 1.6 T. Going forward, when 200 G lambda optics comes along, 224 G SerDes matching optical modulation (PAM4 or PAM6) will be needed for power-optimized implementation.

Choose Your Ethernet IP Wisely

As hyperscalers become more prevalent—and multiple hardware vendors service the ecosystem—they’re shaping the Ethernet roadmap. Software will be critical for lowering latency and smoothing over interoperability and electrical issues.

For enterprises to navigate this future, choosing your Ethernet IP vendor—one with deep experience and complete solutions—is key. Your vendor should have solutions for the gamut of Ethernet speeds 200/400/800 G and 1.6 T when it comes online, with configurable controllers and silicon PHYs, verification IP, development kits and interface IP subsystems. Because verification is so critical, make sure your verification can be executed in Native System Verilog and UVM to help you easily integrate, configure, and customize your systems.

With the pandemic, the future has arrived sooner than we expected. But with the right IP, your road ahead is fast and wide.