High-Level Synthesis Drives Next-Gen Edge AI Accelerators

This article is part of the TechXchange: AI on the Edge.

What you’ll learn:

- Insight into high-level synthesis (HLS).

- Advantages of using HLS with AI acceleration.

All things in your life are getting smarter. From the vehicles that will move you around, to the house you live in, the things you wear, and more, devices are increasingly imbued with intelligence thanks to the revolution in artificial intelligence. In turn, AI makes these devices more capable and useful.

But AI algorithms are compute-intensive. The neural-network models being employed are growing larger to meet the increasing expectations of what AI systems can deliver. For example, a well-known annual image-recognition contest has seen the size of its yearly winner (as measured by the number of floating-point operations) grow by a factor of 100 over the past five years.

The newest generation of large language models (LLMs) requires trillions of floating-point operations for a single inference. Larger models take even more compute power, with typical neural-network models taking billions of multiply/accumulate operations to perform a single inference.

The Need for Fast, Efficient Inferencing

Many of these inferencing systems also have real-time response requirements. For example, a smart audio speaker product is expected to respond to a wake-up request within a second or so, and respond to questions and commands at a conversational pace. Autonomous vehicles such as a drone helicopter or a self-driving car need to complete inferences and act on them in a fraction of a second.

Embedded-systems designers have a real challenge delivering the compute and performance capabilities needed for complex neural networks while meeting their cost, size, weight, and power-consumption limitations. For many applications, it’s simply impractical to have a bank of GPUs burning hundreds of watts of power. Sometimes sensor data can be sent to a data center and processed there with inference results sent back, but response time and privacy requirements might preclude these aggressive response requirements.

For some systems, it’s practical to run inferences in a machine-learning framework hosted on a general-purpose CPU. This will likely match the environment where the neural network was developed, making deployment easy. But it will only work for the smallest of neural-network models.

As more performance and efficiency is needed, a GPU could be employed. GPUs bring a lot of parallel computing capability, which nicely enables computing inferences—and they’re much faster and more efficient than CPUs. Several vendors make embedded GPUs that can help meet the weight and form-factor requirements of some embedded systems.

GPUs, while faster and more efficient than CPUs, still leave much room for improvement. For more demanding applications, tensor processing units (TPUs) could be an option (these are also known as neural processing units or NPUs). Like GPUs, a TPU has an array of parallel-processing elements. TPUs improve the operating characteristics of a GPU by omitting some of the hardware specific to processing images and graphics. They also customize the circuitry specifically for handling neural networks.

Turning to Customized Accelerators

The greater the customization of the hardware to a specific task, the better the performance and efficiency. Customization of an accelerator for inferencing can be extended well beyond what an off-the-shelf TPU offers.

A commercial TPU needs to handle any and every neural network that anyone could possibly dream up. The developer of a TPU, whether a discrete component or design IP, wants to have as many design wins as possible. This drives TPU developers to keep their implementations very generalized.

For embedded developers wishing to differentiate their products with faster, more efficient inferencing, or for those cases where even a TPU can’t deliver the needed performance, developing a bespoke neural-network accelerator is imperative. The accelerator can be deployed either as an ASIC or FPGA. With the accelerator tailored to perform one specific inference, it can easily outperform even the best TPU while consuming fewer resources.

Of course, developing an ASIC accelerator, or even an FPGA accelerator, is a daunting task. But teams that can develop customized hardware in the form of an ASIC or FPGA will be able to deploy superior systems. However, hardware design cycles run months or even years, and often are expensive and labor intensive. One way to address this challenge is to consider high-level synthesis (HLS) technology.

What is High-Level Synthesis?

HLS allows designers to operate at a higher level of abstraction. Traditionally, hardware designers work at the register transfer level (RTL). This means designers need to describe every wire, every register, and every operator in the design. Obviously, as design sizes and complexity increase, this becomes less and less tenable.

With HLS, designers operate at the algorithmic level. They describe the functions and data transformations that need to take place using a high-level programming language like C++. From there, a compiler determines how to implement that algorithm as a set of state machines, control logic, and data paths. The compiler can quickly and easily consider more implementations than the best engineer possibly could. And it supports global optimizations and resource sharing in ways that simply aren’t possible in a manual design process.

When applying HLS, the designer is still able to have fine-grained control over the implementation. Using compiler directives, the developer can control pipelining, loop unrolling, and data flow in the design. As a result, intelligent and informed tradeoffs can be made between area and performance as the design is synthesized.

Why HLS is Key in Designing AI Accelerators

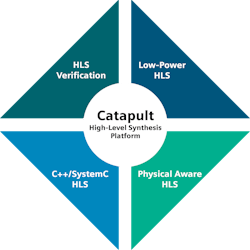

HLS solutions such as Siemens’ Catapult platform (see figure) bring a number of benefits that facilitate designing a bespoke inferencing accelerator. First, designing at a higher level of abstraction means there are fewer lines of code to write.

The number of source code lines in a design is highly correlated to designer productivity. Developers tend to produce a fairly constant number of lines of source code per day, regardless of the language and level of abstraction. Developers also produce a fairly constant number of bugs per line of source.

Therefore, fewer lines of source code can result in fewer bugs to hunt down and fix, contributing to a shorter design cycle. Shorter design cycles are especially important in fields like AI, where advances in algorithms are happening quickly. A shorter design cycle allows developers to take advantage of those latest advances in the field.

Secondly, HLS designs are easier to verify. Verification is one of the biggest challenges in hardware design. With HLS, the strategy is to verify the high-level algorithm, then prove equivalence between the algorithmic code and the synthesized RTL. This can be done with both formal and dynamic methods. Proving equivalence is significantly faster than verifying RTL from scratch.

As a neural-network model is migrated to customized hardware, the designer will want to make a couple of changes. First is a change in the numeric representation. General-purpose hardware and machine-learning frameworks will typically use 32-bit floating-point numbers. However, a purpose-built accelerator will not need the full range and precision provided by 32-bit floating-point numbers. Reducing the number of bits and representing the numbers as fixed-point value—a process called quantization—will shrink the size of the operators and reduce the amount of data that must be stored and moved.

The designer will also want to balance the computational resources with the communication capability of the design. This may involve modification of the layers and the channels in the layers. These changes can significantly increase performance and efficiency.

Simplifying Verification with HLS

However, the accuracy of the algorithm needs to be re-verified as implemented in hardware, with the same bit-level representation and including any changes to the architecture of the neural network. Inferencing algorithms are probabilistic and not deterministic. The correct execution of a single inference doesn’t show that the algorithm is implemented correctly. Verifying the accuracy can mean running tens of thousands of inferences, or more.

That’s impossible at the RTL level with logic simulation because it would take too long. It’s possible with hardware acceleration—emulation or FPGA prototypes—though these platforms are usually only available late in the design cycle. HLS supports bit-accurate data types, which exactly match the behavior of the design that will be synthesized, but it runs thousands of times faster. With HLS, running the high-level algorithm is both quick and easy.

Since designing an accelerator in HLS is faster than traditional RTL, and the compiler takes care of all the low-level details of the RTL implementation, it’s much more practical to create and evaluate a variety of different potential architectures for an accelerator.

Because it takes so long to design RTL, few design teams have the luxury of evaluating multiple architectural alternatives. Designing a meaningfully different implementation is simply too expensive for almost all RTL design teams.

With HLS, though, new architectures can be created by changing the HLS compiler directives and letting the computer do the work. In new areas, like AI and inferencing, this is essential.

Design teams working on a particular type of system for years can easily determine the right architecture, so they don’t need to do a lot of exploration. But designers developing a new type of accelerator will not have multiple generations of design experience to inform their decisions. Very few teams building AI accelerators have more than one generation to look back on. Thus, it’s even more critical to explore alternatives and make well-informed decisions. HLS makes this possible.

AI Helps Build a Smarter Future

Building intelligent systems requires taking increasingly complex algorithms and fitting them into a broad range of products that have a vast range of power, performance, cost, and form-factor requirements. For many systems, this means customizing the electronics to achieve the highest performance and efficiency.

On that front, high-level synthesis becomes an essential technology, as it enables higher productivity, faster verification, and design space exploration that’s not practical with traditional design methodologies.

Read more articles in the TechXchange: AI on the Edge.