More design is being done at the system level than ever before. The enabling technology for much of it is emulation. Emulation allows the register transfer level (RTL) source code to be used as the model but with enough processing performance to enable system-level work, especially when it involves software development or running software workloads.

This file type includes high resolution graphics and schematics when applicable.

As a result, hardware emulation has taken center stage, replacing the beloved RTL simulator, a place it owned for about three decades. Mind you, I am not proclaiming the demise of the RTL simulator. For hardware verification in the early design stage, the RTL simulator is unbeatable. Blazingly fast to compile a design, it has a degree of interactivity for “what if” analysis that no other tool can match as long as the design size is of limited capacity.

Today, that’s true for intellectual property (IP) blocks. But for system integration and system validation when hardware and software must be tested together, simulation is slow and impractical. Need a data point? Let’s consider a design team attempting to simulate one second of real data on a design of 100 million ASIC equivalent gates running at 100 MHz. To be generous and assuming the simulator clicks on at 100 Hz, it would take 1 million seconds—that is, 277 hours or 11 days. By comparison, an emulator running at 1 MHz would take 100 seconds. I rest my case.

The solution comes with a rather steep cost of ownership, though, which is why emulation datacenters to support verification engineers will become popular. Three criteria must be met to build an emulation design datacenter that may serve a large, worldwide community of verification engineers and software developers. The system must support:

• Very large design capacity and multiple concurrent users

• Remote access

• Sophisticated resource management

Let’s discuss each in detail.

Meeting Design Capacity and Multi-User Requirements

Design sizes are pushing the distribution curve upward into hundreds of millions of ASIC-equivalent gates. Extreme designs already exceed 1 billion gates. Routinely, IP blocks reach into tens of millions of gates. At the same time, design teams are expanding their organizations with software developers, largely outpacing hardware designers. Serving such a diverse design community at one single company would require an emulation platform with a capacity of tens of billions of gates, running 24/7 year round.

Currently, the largest emulation platforms offer a maximum capacity reaching into low-single-digit billions of gates, enough to map the largest designs ever created, but not adequate to accommodate the total capacity demand of a large company. Processing embedded software requires a run of several billions of cycles in sequence. At a speed of 1 MHz, 1 billion cycles would need 1000 seconds. If a single design capacity consumes all the emulation resources, such a task would seize the emulator for the entire run and lock out any other user for the duration of the execution. That could be several hours.

Two complementary approaches help solve the problem. In one approach, the architecture of the emulation platform ought to support multiple concurrent users sharing the emulation resources, with the caveat that none of the jobs would consume the whole capacity. The second approach calls for building an emulation farm with several emulation platforms. The emulator vendors would favor this approach.

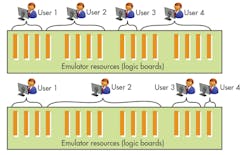

For example, Mentor Graphics’ Veloce2 can map a design of about 2 billion gates in its double Maximus platform and can serve up to 128 concurrent users. Trading off the number of users for larger designs, Veloce2 Maximus supports any combination of the above (Fig. 1).

The hardware architecture of Veloce2 has been designed from the ground up to avoid patching together multiple standalone boxes. Similar to a computer server, it comprises multiple racks, loaded with logic boards, power supplies, and interconnect backplanes. It includes advanced verification boards (AVBs), power racks, and matrix boards with active switches for interconnecting several AVBs. Overall, the system is stable and reliable. A farm of double Maximus platforms would meet the capacity requirement for any company, both in terms of large single designs and of a multitude of users with a variety of design sizes.

Remote Access

Remote access is the death knell for a popular deployment mode for emulation that dominated the verification landscape for about 30 years. In in-circuit emulation (ICE) mode, the design under test (DUT) mapped inside the emulator connects to the target system where the actual chip is going to rest.

The target system could include a plethora of physical devices. Unfortunately, a straight connection is not possible due to the large difference in clock speed between the fast target system and real-world-speed devices and the relatively slow design inside the emulator that can be two or three orders of magnitude wide.

Basically, the connection requires a speed adapter to accommodate the fast clock rate of the chip to the slow clock rate of the emulator. Speed adapters depend on the design and on the type of interface to the target system, such as PCI Express, USB, or Ethernet. ICE is great for testing with real-traffic scenarios such as a Serial Advanced Technology Advancement (SATA) disk drive plugged into a design to see it working.

Save and restore also is something that’s challenging in ICE mode in the presence of physical targets. Suppose the user has a disk drive connected to a DUT mapped inside the emulator and wants to save the DUT state. Using an emulator’s built-in capabilities, the user cannot perform that task because the disk drive is spinning away and does not stop because the state of the disk drive cannot be saved.

Remote access makes an emulator a shared resource among a community of users distributed across a large geography, where some of them may be at the opposite end of the world, a half-day behind or ahead. To accomplish this mission, the ICE mode should require a squadron of technicians available 24/7 to plug and unplug the speed adapters for each user and for each design, making the task totally impractical.

If not ICE, is there an alternative to support remote access? The answer is yes. Sometimes called targetless emulation, it replaces the physical testbed with a software test environment. In the simplest implementation, it may be based on a synthesizable testbench that removes any dependency from the outside world to the advantage of processing a design at full emulation speed.

However, synthesizable testbenches constrain the creativity and flexibility of the designers. On the other hand, non-synthesizable testbenches, especially if written in a hardware verification language (HVL), require a simulator for the execution and a programming interface language (PLI) interface to the emulator running the DUT. All kill performance.

There is an option to this state of affairs.

In the late 1990s, IKOS (acquired by Mentor Graphics in 2002) pioneered the idea of moving the bit-signal level interfaces of a testbench driving a DUT in the emulator away from the testbench into separate blocks that can be reused. Any interface is a protocol-dependent state machine or bus-functional model that, by definition, is synthesizable.

This approach produced two significant benefits. The first is the ability to write testbenches at a higher level of abstraction using few lines of code. These are easier to write and faster to execute, and the prospect of mapping the bus-functional model inside the emulator achieved dramatic acceleration. IKOS named the bus functional blocks transactors and the new emulation mode co-modeling.

The transaction-based verification mode, called TBX for testbench acceleration, is the emerging trend in the industry. It does not require staffed supervision to plug/unplug speed adapters when the user swaps one design with another or a new user signs in. This mode is paving the way to remote access.

All three emulation vendors—Cadence Design Systems, Mentor Graphics, and Synopsys—support remote access via the transaction-based approach. One vendor created a virtual verification environment around the concept of a virtual lab similar to a physical lab but built with virtual devices. The virtual lab is made possible by merging three technologies: emulation, transaction-based verification, and ICE-targets.

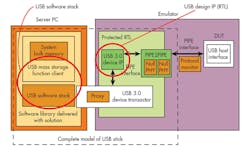

A virtual device includes a software stack running on the host workstation that communicates with a protocol IP running on the emulator via a transactor interface. The bundle creates protocol solutions for users to verify their IP at the device driver level and verify the DUT with realistic software, the device driver itself (Fig. 2).

Functionally equivalent to ICE target solutions, a virtual lab eliminates cables and hardware adapters because virtual devices use existing, verified software IP to communicate with the protocol-specific RTL design IP and DUT on the emulator. Compared to hardware ICE targets, virtual devices present several advantages:

• Easier remote usage because it can be installed with no additional hardware connected to emulation, once the requisite co-model host machines are installed

• Greater flexibility for sharing a single emulator resource among multiple design teams because there are no cables to connect and fewer partition constraints on the DUT running in the emulator

• Visibility over the target protocol software stack running on the function controller can be defined without the need for a specific access mechanism into the dedicated hardware

• Visibility/traceability over the target protocol function controller core can be defined in terms of simple IP protection for the delivered RTL source code, and access to standard buses is easily available for monitors and checkers to operate upon

The virtual environment enables the user to debug embedded software via a virtual Joint Test Action Group (JTAG) probe instead of a physical JTAG probe. The benefit is that the JTAG protocol used by the probe is generally unaffected by the slower clock rates of an emulator. When connecting physical devices to a virtual design running in the emulator, the clock and data rates need to be reduced to match the speed of the design in the emulator. With a virtual JTAG, the emulator can be started and stopped at any time, and the clock frequency can be varied without concern for interrupting the connection to the software debugger.

The downside is that the JTAG connection is intrusive and will impact the state of the design being debugged. An alternative to the JTAG probe technology is to use trace-based systems to enable debug of programs running on the emulator. A basic processor trace is a tabular listing of events that took place in the processor.

One vendor offers an offline software debug tool for emulation. Based on trace, it includes a traditional debugger view of the state of the processor and performs all the symbol table and processor state decoding. Since it is based on tracing technology, it does not intrude on or interfere with the operation of the system being run. It can be run off the replay database after the emulation has completed, and it can run at speeds of 100 MIPS.

Sophisticated Resource Management

More and more companies creating embedded systems include large teams of hardware designers and embedded software developers, typically spread over a large territory that may encompass more than one continent. Supporting such enterprise with an emulation platform requires adequate design capacity and remote access, but that’s not all. A not-so-obvious and more subtle necessity calls for a state-of-the-art resource management.

Any modern emulation system is made up of boards interconnected via a backplane hosted in a chassis. Multiple chassis are joined together to expand the design capacity to well over a billion gates. These resources must be managed automatically to make the tool attractive to the development team.

From the early stages of the development cycle to the final phase of system integration and bring-up, a team would submit numerous emulation jobs around the clock. The jobs would include hardware verification tasks at the IP, subsystem, and full system levels, as well as any type of embedded software validation chore, from software verification routines to drivers, operating systems, apps, and diagnostics. Some of the jobs would require a limited capacity, others the full capacity of the entire design. And, this is just for one design. Large companies tend to have tens of designs under development at any given time, although only a few of them make it to production. The scenario gets complicated.

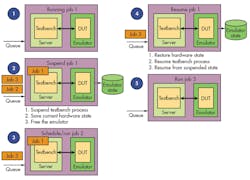

As an example, consider Veloce2 from Mentor Graphics again. Its fully expanded configuration double Maximus comprises eight Quattro chassis, each including 16 boards. Such a platform can support up to 128 concurrent users. At any given time, jobs are swapped in and out requiring the relocation of the resources (AVBs) in real time. Performing the task manually would be a nightmare (Fig. 3).

Further, to be effective, the resource manager must implement a job scheduling priority mechanism, since some jobs may have higher priority over others. Commercial software such as Platform Computing’s Load Sharing Facility (LSF) for job scheduling may help to queue jobs, but more functionality is required.

To complicate the situation, priorities may change constantly. Supporting a “suspend/resume” mechanism to allow for stopping a job when a higher-priority job must be served immediately is mandatory (Fig. 4).

Emulators are by far more reliable than a decade ago, but they are not perfect. It is in the nature of hardware that failures occur, and when that happens, it is of the utmost importance to avoid any downtime of the emulation platform that would impact the time to tapeout. The resource manager must isolate the failing board without forcing a recompilation of a design running on that board. Equally important is the ability to track usage of the emulator, including scheduling of routine maintenance, running of diagnostics, and filing reporting results.

Conclusions

A modern verification methodology based on emulation requires remote server farms that could be used simultaneously by dozens of hardware and software engineers to verify increasingly complex designs.

To be effective, an emulation server must be designed from the ground up with a modular approach that allows for capacity expansion without extensive use of cables. The overall capacity must be adequate to support the largest designs with multiple billions of gates as well as several tens of concurrent users managed transparently.

Multiple emulation jobs submitted simultaneously should be administered through a queuing process that privileges higher-priority jobs without disrupting the service. Users should be shielded from the details of the job handling and should not be required to adjust their compiled designs driven by hardware dependencies.

Reliability of the emulation hardware enhanced by a failure protection mechanism should be of primary concern to the emulation vendor. Within reason, the emulation server should also be environmentally friendly, consume limited power, and possess physical dimensions to fit comfortably in a lab environment.

Lauro Rizzatti is a verification consultant. He was formerly general manager of EVE-USA and its vice president of marketing before Synopsys’ acquisition of EVE. Previously, he held positions in management, product marketing, technical marketing, and engineering. He can be reached at [email protected].