This file type includes high resolution graphics and schematics when applicable.

In the history of functional verification for complex chips, increasing automation has replaced tedious and expensive manual effort. Register transfer level (RTL) languages have replaced the manual generation of zeroes and ones, and testbench languages then automated it. RTL constructs and then flexible scoreboards replaced results checking via waveforms. And, standard assertion and coverage formats have replaced inline hardware description language (HDL) code to check for interesting corner cases. The latest step in this process is happening now, as automatically generated C test cases replace handwritten tests for embedded processors.

Testing the Limits of the UVM

Constrained-random stimulus was a big step forward, and the technique was standardized as the Universal Verification Methodology (UVM). However, the UVM tries to verify deep behavior in a complex design purely by manipulating the chip inputs. This can be likened to “pushing on a rope,” trying to make the far end of the rope go around corners and into small spaces with limited control. Verification teams have begun to wonder if there is a way to “pull on the rope” and somehow guide the input stimulus from the desired outcomes.

A related limitation of the UVM arose when system-on-chip (SoC) designs began appearing. The defining characteristic of SoCs is that they include one or more embedded processors. These processors typically program the various intellectual property (IP) blocks and control the flow of data around the chip. Since the power of the SoC resides in its processors, trying to verify such a chip without leveraging the processors is a challenge. Trying to set up an embedded processor to do useful work from the chip inputs is the ultimate “pushing on a rope” problem and one that few SoC verification teams attempt.

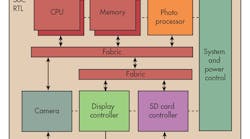

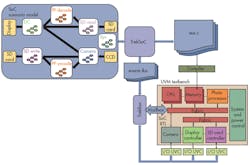

Figure 1 shows a small but representative SoC, one that might be found in a digital camera. It includes two embedded processors and a variety of peripherals and memories connected to the processor bus. It can take an image from the camera, display it on the screen, and save it to an SD card in raw (bitmap) or JPEG compressed format. It also can retrieve a previously saved photo from the SD card, convert back from JPEG if necessary, and show the image on the screen. All of the IP blocks are programmed by the processors to cooperate and create scenarios that reflect real use cases for the camera.

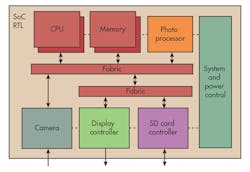

The most common UVM-based solution to verifying such a chip is to remove the processors, replacing them with a model for the processor’s bus. The result is a traditional UVM testbench in which a UVM verification component (UVC) replaces the processor bus. The UVM approach calls for the UVCs, each of which includes a sequencer to generate constrained-random stimulus, to be coordinated by a virtual sequencer (Fig. 2). A scoreboard keeps tracks of the results and may gather coverage metrics as well.

The problem with this approach is that it’s hard to replicate processor bus cycles for realistic user cases without an embedded processor to actually run code. For example, to JPEG-encode and store a new photo, the processors must:

- Program the camera to receive an image from the testbench and transfer it to memory via direct memory access (DMA)

- Program the photo processor to read the raw image from memory via DMA, convert it to a JPEG with the selected quality and file size, and write it to memory via DMA

- Program the SD card controller to read the JPEG image from memory via DMA and write the result to the testbench

Given definitions of the chip’s registers and memory, it’s easy to imagine writing a C routine that performs this sequence. It’s harder to imagine sending sequences to the bus UVM to produce the same bus cycles.

Most of the time, the SoC verification team includes a few embedded programmers who write test code to run on the processors in simulation. This is a manual and tedious process for a single processor and virtually impossible when multiple processors or even multiple threads on one processor are involved. Humans are not good at thinking and programming in parallel.

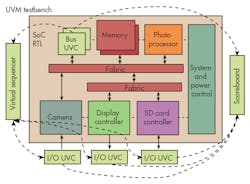

The other problem is that neither the UVM nor any other constrained-random methodology provides guidance on how to connect the embedded processors with the testbench (Fig. 3). The user scenario of storing a new photo has two additional steps. Somehow the camera UVC needs to know when to supply an image to the design so the camera block can grab it and store it. Similarly, the SD card UVC needs to know when to receive the JPEG image, compare it to the expected result, and model store it on the SD card.

Verification teams who want their manual C tests to interact with the UVCs then must create a non-standard mechanism to coordinate the code and testbench. This takes effort and, quite often, embedded programmers write only C tests that manipulate the internal data paths without sending data on or off chip. Clearly, this leaves a large gap in the SoC verification effort and reduces realistic user scenarios that can be run. A test might verify that the photo processor can properly convert bitmaps to JPEG, and vice versa, but this is not an end-to-end use case.

Beyond the UVM with C Test Case Generation

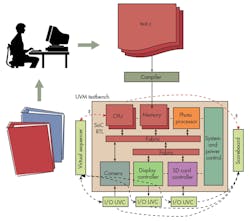

As happened during the previous bottlenecks in the evolution of chip verification, the answer for SoCs is to automate a process that had been manual. In fact, technology exists today to automatically generate sophisticated C test cases that can run on embedded processors in simulation. Figure 4 shows the Breker solution where test cases are generated from graph-based scenario models that document the SoC’s verification space and the team’s verification plan. The test cases are compiled, loaded into SoC memory, and run just like handwritten tests or production software.

This automated approach offers several advantages. The test-case generator keeps track of parallel threads and programs, so the C tests it produces can run with multiple threads on multiple processors. The scheduler is aggressive at moving user test cases from thread to thread, processor to processor, and memory region to memory region. This ensures thorough exercise of paths between all processors and all allowable memory regions, which is excellent for helping to verify the SoC’s cache and memory coherency scheme.

The solution includes a simulation run-time element that communicates with the UVCs. Along with the C code, the generator produces an “events” file with a list of everything that has to happen in the testbench during the test case run. When the C code gets to a point where testbench coordination is needed, it sends a simple message with an event ID via a memory-mapped mailbox. The run-time element looks up this ID in the events file and takes the appropriate action. This mechanism provides synchronization between the running test case and the UVCs with no user effort at all.

In the previous example of saving an image, when the test case is ready to grab the image from the camera port, it will send a message and the camera UVC will respond. Likewise, when the JPEG image is ready to be sent to the SD card UVC, another message triggers this action as well as a comparison of observed and expected results. To minimize embedded memory usage, input data and expected results are stored in the simulator and not in the SoC. Likewise, all comparisons happen in the simulator to maximize the performance of the embedded processors and the SoC.

The C test cases can be generated from a single scenario model not just for simulation, but also for acceleration, emulation, FPGA prototyping, and even actual silicon in the lab. Each stage of generation can be tuned for best results in the target platform. Graph-based scenario models are also reusable from IP blocks through complete chips. Graphs are simply instantiated to form a scenario model for the next level in the design hierarchy. Finally, automatic analysis of the graphs reveals any unreachable portions and generates the test cases needed for coverage closure on the remainder.

Assessing Return on Investment

As chip verification has advanced via automation of previously manual steps, some amount of new effort is required for each enhancement. It might be a new language to learn, new knowledge to acquire, or a new methodology to embrace. For automated generation of C test cases, the new effort is the specification of graph-based scenario models. Any verification team considering the adoption would and should ask how this new effort compares to the gain in productivity, improvement in design quality, reduction in time-to-market, and increased likelihood of first-silicon success.

Fortunately, scenario models are not hard to develop. They look like a standard data flow diagram for the SoC, although flipped so results are on the left and inputs are on the right (Fig. 4, again). The generator then can start with each possible outcome, trace a path to determine how to make that outcome happen, and produce a test case following the path. This “pulling on the rope” approach makes it easier to verify deep design behavior. Importantly, there is no new language to learn. Scenario models are specified in standard C/C++ with only a few extensions based on Backus-Naur Form (BNF), another standard language.

Repeated experience has shown that a verification engineer or embedded programmer can start writing a scenario model after only a day of training and generating interesting cases in well under a week. Thus, syntax is not a barrier, but the user does have to accurately capture the chip’s verification intent, and the knowledge required is almost the same knowledge needed to write manual C tests. In the digital camera example, anyone working on the verification team knows how to program each IP block to do its job and how to string the blocks together in user case scenarios.

For about the same amount of effort as handwriting a test or two, a user can produce a scenario model that can generate an unbounded number of test cases, each one far more complex than what could be written by hand. One leading cell-phone SoC company reduced the number of verification team members writing embedded tests from 10 to two because of the increase of productivity with test case automation. The other eight engineers were not laid off. They were redirected to work on production software and applications that would add value to the final product.

Conclusions

The evolution of chip verification has been the process of replacing expensive manual work by automated processes. The next step for the industry is eliminating the need to hand-code tests for verification or prototype bring-up and the problem of “pushing on a rope.”

Leading SoC teams today are automatically generating multi-threaded, multi-processor, self-verifying C test cases that stress the design much more thoroughly than manual methods. The minimal investment is compensated many times over by better productivity, a shorter verification schedule, and increased design quality.

This file type includes high resolution graphics and schematics when applicable.