Enforcing Programming Standards Protects Against Software Security Risks

Growing concerns regarding security drive today’s software system design. For connected systems, it’s no longer enough to choose both a software process standard and a programming standard that focus on quality. Ensuring that the software contains no known exploitable vulnerabilities or weaknesses must also be considered.

Incidents of the loss of personally identifiable information (PII) due to software security breaches continue to make headlines. In 2014 alone, Target, Home Depot, and JPMorgan were a few of the big-name businesses to announce security breaches that compromised payment-card data and accounts for millions of customers. Add to this the Heartbleed OpenSSL and POODLE SSL vulnerabilities, and you realize that poor security has put hundreds of millions of Internet users at risk of losing PII. All of these examples represent vulnerabilities in the fundamental cryptographic software components used to secure Internet communications.

This file type includes high resolution graphics and schematics when applicable.

Each announcement of a new breach further increases awareness of the vulnerability of critical infrastructures connected to the Internet, as well as the need for secure system components that are immune to cyber-attack. And embedded systems, which are typically highly connected, are no exception. Organizations are starting to demand that developers not only produce reliable and safe embedded software, but also ensure their software systems are impenetrable.

To be considered secure, software must exhibit three properties:

• Dependability: It must execute predictably and operate correctly under all conditions.

• Trustworthiness: It must contain few, if any, exploitable vulnerabilities or weaknesses that can be used to subvert or sabotage the software’s dependability.

• Resilience: It must be resilient enough to withstand attack and recover as quickly as possible, and with as little damage as possible from those attacks that it can neither resist nor tolerate.

So, what’s the best way to enforce these software properties while writing the software? The answer is secure programming standards. Programming standards represent an invaluable approach for building security into software. They encapsulate the knowledge and experience of dozens of programmers on best practices for writing software in a given language for a given domain, and provide guidance for the creation of secure code. Like safety-critical systems, however, secure systems can only be developed as part of a rigorous development process.

Building Security into Software

Most software is written to satisfy functional, not security, requirements. But according to research done by the National Institute of Standards and Technology (NIST), 64% of software vulnerabilities stem from programming errors. Building secure software requires adding security concepts to the quality-focused software development lifecycle where security is considered a quality attribute of the software under development. Building secure code is all about eliminating known weaknesses, including defects. Therefore, by necessity, secure software is high-quality software, because an intruder may exploit even minor defects for an attack that can result in a significant breach of security.

Security must be addressed at all phases of the software-development lifecycle, and team members need a common understanding of the security goals for the project and the course of action to do the work. As a result, the same process disciplines that are necessary for creating safety-critical software must also be employed to create secure software, including:

• Requirements traceability

• Use of established design principles

• Application of software-coding standards

• Control and data-flow analysis

• Requirements-based test

• Code-coverage analysis

In the same way that developing safety-critical systems starts with a system safety assessment, a secure software project starts with a security risk assessment—a process that ensures the nature and impact of a security breach are assessed prior to deployment. Once assessed, then the security controls needed to mitigate any identified impact can be identified and, subsequently, become a system requirement. This way, security is included in the definition of system correctness that then permeates the development process.

The most significant impact on building secure code is the adoption of secure coding practices, including both static and dynamic assurance measures. The biggest return on investment stems from the enforcement of secure coding rules via static-analysis tools. With the introduction of security concepts into the requirements process, dynamic assurance via security-focused testing is then used to verify correct implementation of security features.

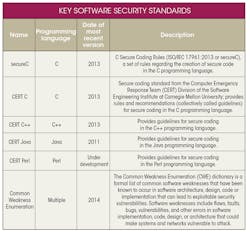

Many of the software defects that lead to software vulnerabilities stem from common weaknesses in code. An ever-increasing number of dictionaries, standards, and rules have been created to help highlight these common weaknesses so that they may be avoided, and potentially vulnerable defects can be detected and eliminated at the point of injection (see the table). Detecting defects at the point of injection, rather than later in the development process, also greatly reduces the cost of remediation and ensures that software quality isn’t degraded with excessive maintenance.

Enforcing Secure Programming Standards

It’s possible to enforce these coding standards via manual inspection. However, this process is not only too slow and inefficient, but for large and complex software applications, it’s not consistent or rigorous enough to uncover the variety of defects that can result in a security vulnerability. As a result, these secure coding standards are best enforced by using static-analysis tools, which help to identify both known and unknown vulnerabilities while also eliminating latent errors in code. In addition, use of these tools helps to ensure that even novice secure software developers can benefit from the experience and knowledge encapsulated within the standards (Fig. 1).

Static software-analysis tools assess the code under analysis without actually executing it. They are particularly adept at identifying coding standard violations. In addition, they can provide a range of metrics to assess and improve the quality of the code under development. For instance, the cyclomatic complexity metric identifies unnecessarily complex software that’s difficult to test.

When using static-analysis tools to build secure software, the primary objective is to identify potential vulnerabilities in code. Examples of potentially exploitable vulnerabilities that static analysis tools help to identify include:

• Use of insecure functions

• Array overflows

• Array underflows

• Incorrect use of signed and unsigned data types

Since secure code must, by nature, be high-quality code, static-analysis tools can be used to bolster the quality of the code under development. The objective here is to ensure that the software under development is easy to verify. The typical validation and verification phase of a project can take up to 60% of the total effort, while coding typically only takes 10%. Eliminating defects via a small increase in the coding effort can significantly reduce the burden of verification—this is where static analysis can really help.

Ensuring that code never exceeds a maximum complexity value helps to enforce the testability of the code. In addition, static-analysis tools identify other issues that affect testability, such as having unreachable or infeasible code paths or an excessive number of loops.

By eliminating security vulnerabilities, identifying latent errors, and ensuring the testability of the code under development, static-analysis tools help ensure that the code is of the highest quality and secure against current and unknown threats.

The most commonly sought certification for secure devices comes via the Evaluation Assurance Level (EAL) process. Here, a product or system is assigned an assurance level value from 1 through 7 (from least to most secure) after first completing a Common Criteria (CC) security evaluation defined in the ISO/IEC 15408 international standard. For every EAL assurance level, it must be proven that the software meets a minimum development standard for the assurance level. This requires documentary evidence that a rigorous software-development process for secure software was followed (Fig. 2).

Modern workflow-management tools facilitate the job of managing secure software project and certification standard objectives alongside software requirements. As code is developed, an entry can then be submitted to the tool, allowing the code to be traced back to the requirements. Downstream, the workflow manager can also be used to map the code to the software-verification activities and results. The workflow manager then becomes the seat of all verification evidence required for certification.

Complementing this, test-automation tools are able to automatically generate and execute test cases that can be run often, providing feedback in minutes. The test-case generation, execution, results, and test status are then controllable from within the workflow manager to provide visibility in to development progress.

For certification, code coverage is recommended as a measure of test completeness. Certification requires an appropriate level of testing rigor, meaning that all testing must be requirements-based and performed at the system level. The feedback, knowledge, and understanding required to improve test effectiveness is simply not possible without code-coverage analysis. It also provides an additional measure of assurance that the potentially deployable system security objective is being met.

When building a secure embedded system, adopting and enforcing a secure programming standard is essential to identifying and removing potentially exploitable vulnerabilities, either in the form of defects or known weaknesses. Using a workflow manager as the host for the static- and dynamic-analysis tools, as well as for results generated throughout the secure software development process, makes generating the documentation required for certification extremely straightforward. All of the project artefacts can be accessed from the tool, assisting in the preparation of data that’s presented to the certification authorities.

This file type includes high resolution graphics and schematics when applicable.

References:

Jay Thomas, Technical Development Manager, LDRA. “Code Quality Blog: is 100 Percent Code Coverage Analysis Essential,” Military Embedded Systems, 9 paragraphs. November 19, 2014.

Deepu Chandran, Senior Technical Consultant, LDRA - India. “Building Security in: Tools and Techniques for Reducing Software Vulnerabilities,” EE Catalog, 33 paragraphs. August 21, 2014.

Chris Tapp, Field Engineer, and Mark Pitchford, Field Applications Engineer, LDRA. “MISRA C:2012: Ideal for Life-and-Death Applications,” EE Catalog, September 12, 2013.

Related articles:

Jay Thomas, Technical Development Manager, LDRA. “Enforce Programming Standards to Eliminate Human Error,” Electronic Design, 34 paragraphs. November 14, 2014, 26 paragraphs. September 13, 2013.