Download this article in PDF format.

Formal verification, which uses mathematical analysis rather than simulation tests, has been available in commercial EDA tools for more than 20 years and in academia much longer. As with many new technologies, initial adoption was slow and limited to companies who had in-house formal experts. This has changed dramatically in the last dozen years or so. Almost every chip-development team makes some use of formal tools, and the market continues to grow. Nevertheless, some myths about formal persist, and they may still be deterring some engineers who could benefit from it. It’s time for the truth to be told.

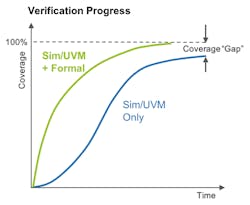

The main attraction of a formal methodology is clear: Exhaustive analysis of a semiconductor design. Simulation provides only scattershot verification by its very nature. No matter how long simulation (or emulation) runs, only a tiny portion of possible design behavior will be exercised. Unverified scenarios may hide serious bugs.

Since formal verification is exhaustive, it considers all legal design behavior over all time throughout the entire design. It finds corner-case design bugs, but also proves that no bugs are remaining. It relies on assertions about intended design functionality and constraints to keep the analysis restricted to legal behavior. This ensures that no false “bugs” are found by violating the input rules or protocols for which the chip was designed.

1. Formal verification can only be performed by PhDs.

This was largely the case for the early academic tools targeted at research projects rather than industrial applications. Today, proving end-to-end properties for large designs may require significant formal expertise, though not a PhD in mathematics. Moreover, many applications of formal analysis are being used every day by designers and verification engineers who have no special training. The tools, languages, and methodologies have all improved a great deal since the pioneer days.

2. It takes a lot of work to get results from formal tools.

This is another myth that applied to early tools, but is no longer the case. Electronic design automation (EDA) vendors offer a wide range of formal applications (apps) that run automatically on the design and deliver results immediately. These include such important verification challenges as connectivity checking, tracing clock and reset networks, avoiding propagation of unknown values, and analyzing the effects of random faults during chip operation.

3. Formal results require writing lots of assertions and constraints.

One of the advantages of formal apps is that they don’t require any assertions to be written by the development team. These tools generate their own assertions from the design and related supplemental files. Just as with user-written assertions, the generated assertions are analyzed to find design bugs and then prove that no further bugs exist. In some cases, the analysis can be refined by user-provided constraints, but these tend to be quite simple.

4. Assertions and constraints for formal verification are hard to write.

This myth also applies to early academic tools, which often used abstruse mathematical expressions as inputs. Today, SystemVerilog Assertions (SVA) are a subset of the widely used SystemVerilog design and verification language. Some formal tools also support SVA with designs written in VHDL and SystemC. All designers and verification engineers receive training in SystemVerilog, so expressing assertions and constraints is easy and natural.

5. It’s hard to write assertions for complex protocols.

Capturing all rules for a complex interface protocol isn’t a simple task, but it has more to do with the complexity of the rules than the format used. Writing a Universal Verification Methodology (UVM) simulation model isn’t simple either. Pre-packaged verification intellectual property (VIP) for standard protocols is commercially available for UVM simulations. Formal tool vendors have extended this approach to make standards-based assertion-based VIP available as well (see figure).

The combination of UVM-based simulation and formal verification eliminates the coverage gap associated only with simulation and UVM models.

6. There’s no way to know when enough assertions have been written.

This limitation has only been overcome fairly recently, with the “model-based mutation coverage” metrics provided by OneSpin’s tools. Other methods of estimating assertion completeness are overly optimistic, resulting in missed bugs. Mutation coverage reports the portions of the design in which a bug would not be detected by any existing assertion. This makes it easier for designers or verification engineers to determine which assertions must be added for full coverage.

7. Formal verification works only on small design blocks.

Once again, this is a myth whose roots lie in experience with early formal tools. Every aspect of formal technology, from the underlying algorithms to ease of use, has been improved and continues to improve. Though running end-to-end assertions on large designs takes effort, it’s feasible today. In addition, many apps focusing on specific verification challenges automatically minimize the formal model, so they routinely run on complete chips.

8. Formal verification doesn’t work on data paths.

Users traditionally focused formal tools on control logic where bugs lurked due to combinations of corner-case conditions never hit in simulation. But some of these conditions often derive from data-path logic––an arithmetic overflow, for example. Today’s leading formal tools handle both control and data equally well. In fact, Xilinx recently presented a paper at DVCon documenting the full formal proof of a 32-bit multiplier, long considered impossible.

9. Formal equivalence checking is limited to application-specific integrated circuits (ASICs).

Equivalence checking is formal verification in which two designs are compared, rather than one design and a set of assertions. Equivalence checking is routinely applied in ASIC development. One common use is to prove that the register-transfer-level (RTL) design and its corresponding synthesis netlist implement exactly the same functionality. Historically, tools have been able to handle only combinational logic, so there had to be a 1-to-1 mapping between state elements in the two designs.

Commercially available formal-verification tools include sequential equivalence checkers that compare two designs even when state elements don’t match. A good example is when timing has been optimized by moving logic across register stages. This is crucial to enable the application of formal equivalence checking to field-programmable gate-array (FPGA) design flows, where many optimizations change the state between RTL and netlist.

10. There’s no way to integrate coverage results from simulation and formal.

One common past complaint from chip project managers was that formal teams “worked off in the corner” and it was hard to assess their contribution to the verification effort. This is no longer a major issue due to the Unified Coverage Interoperability Standard (UCIS) and various EDA vendor partnerships. These provide ways to have formal verification and simulation work on some of the same coverage targets and for their results to be integrated.

11. There’s no way to get a unified view of verification progress.

Beyond integrating coverage metrics, project managers need to have a single view of verification progress across all techniques. Today, verification engineers can annotate the verification plan to indicate which goals will be addressed by simulation, emulation, and formal tool. Results, including uniquely formal metrics such as bounded and complete proofs, can be reported back against the plan.

This final myth can’t be entirely dismissed. The complex web of EDA vendor partnerships and the lack of an accepted industry standard for verification plans means that not all combinations of tools provide seamless interoperability. However, this should not dissuade potential users from adopting and embracing formal verification, secure that none of the other myths will impede their progress.

Tom Anderson is technical marketing consultant at OneSpin Solutions.