Machine learning (ML) has taken the developer community by storm, but implementing many algorithms with any efficiency have required FPGAs, multicore CPUs, or high-performance GPGPUs. Though developers have used ML on microcontrollers, the ML models are more limited. ML performance on these smaller conventional platforms is also a bit slower—useful but not capable of handling more ambitious projects.

This is about to change with Arm’s announcement of the Cortex-M55 and the Ethos-U55. The Cortex-M55 can be used alone as it has its own ML augmentation. The Ethos-U55 can be added if ML applications are especially demanding. It could be paired with other Cortex-M platforms, too, but it will make more sense to be matched with the Cortex-M55, allowing ML support to be distributed.

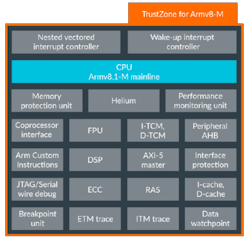

The Cortex-M55 (Fig. 1) is built around a conventional Cortex-M that includes the Helium ARMv8.1-M machine-learning hardware-acceleration support. Helium adds 128-bit vector extensions including gather/scatter support and complex math support such an 8-bit vector dot product. This enables the Cortex-M55 to deliver 15X the performance of a basic Cortex-M platform.

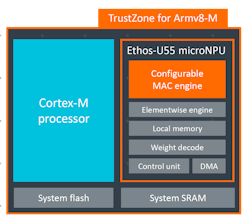

The Ethos-U55 (Fig. 2) is what Arm calls a microNPU (neural processing unit). It can provide 32X the performance of a base Cortex-M. Together, the Ethos-U55 and Cortex-M55 and deliver up to 480X the ML performance of a non-accelerated microcontroller.

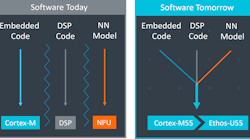

The microNPU is designed to handle the ML heavy lifting while the Cortex-M manages the system and takes on lightweight ML models. This distributed approach allows many models to be employed in the system. It also lets developers balance performance and power. It's possible to employ just the Cortex-M55 for lighter-weight applications using less power while Ethos-U55 is powered down. Of course, the Ethos-U55 is able to efficiently handle video streams that would cripple lesser systems.

The Ethos-U55's scalable design can contain 32, 64, 128, or 256 MACs. DMA support for moving model weights supports on-the-fly decompression. There's also a built-in weight decoder. The accelerator communicates with the micro via system SRAM. The Ethos-U55 can be configured in a number of ways that run applications in parallel to the micro.

Both platforms support popular ML frameworks. For example, the Cortex-M55 can run TensorFlow Lite models. Both will handle multiple models at the same time. A unified development tool chain allows models to target either platform.

Expect to see chips based on the Cortex-M55 and the Cortex-M55 with an Ethos-U55 in about a year. A Cornerstone-300 reference design is available to get chip developers started.

“Enabling AI everywhere requires device makers and developers to deliver machine learning locally on billions, and ultimately trillions, of devices,” said Dipti Vachani, senior vice president and general manager, Automotive and IoT Line of Business, Arm. “With these additions to our AI platform, no device is left behind as on-device ML on the tiniest devices will be the new normal, unleashing the potential of AI securely across a vast range of life-changing applications.”

The Cortex-M55/Ethos-U55 sets the bar for microcontroller-based artificial intelligence (AI). ML applications that once targeted higher-end platforms will be practical on this micro-based solution that will consume less space and power.