Matrox MGA: The Longest-Produced Graphics Chip Ever

Series: The Graphics Chip Chronicles

Dorval Canada-based Matrox is the oldest continuously operating graphics add-in board company in the world. It started in 1979 before IBM introduced the PC. Matrox’s first AIB was the ALT-256 for S-100 bus computers, released in 1978. ATI started seven years later (also in Canada) and eight years after that Nvidia started. Hercules developed their AIB in 1982, three years after Matrox.

Companies in those days built AIBs with discrete logic and an integrated chip known as a CRT controller, or CRTC. It handed the timing for the display and displays based on TV standards. You still can find references to TV today with the 60 Hz frame sync used in games and supported by all modern AIBs and GPUs.

Artist Graphics was the first private company to develop a proprietary graphics chip in 1992. Matrox developed its own graphics ASIC in 1994. Artist Graphics shut down in 1995, Matrox is still shipping AIBs. NEC developed a commercial graphics LSI chip in 1982, and many AIB suppliers used it (including Artist). Committing to an ASIC for just internal use was a big step for a company in those days, with a small, albeit growing market. Nvidia introduced their NV1 in 1995, but Matrox beat them with the Matrox Impression that came out 1994.

What makes the Matrox MGA noteworthy is its longevity—in the semiconductor industry that’s called “having legs.”

The Matrox Impression Plus 220 ISA (MGA-IMP+/A/220) AIB was based on the IS-ATHENA R1 graphics chip and came with 2 MB of VRAM.

Matrox was able to extend the life of the MGA and during the 1990s produced the Matrox Millennium and Mystique series AIBs

- 1994: Matrox introduced the Matrox Impression, an AIB that worked in conjunction with a Millennium card to provide 3D acceleration.

- 1996: Matrox introduced the Mystique.

- 1997:Matrox introduced the MGA-based Millennium II AIB.

- 1998: The company introduced the Millennium G200 AGP with 8MB.

Based on the MGA‑1024SG, a new SGRAM 3D‑Video Graphics Controller, Matrox unveiled the Mystique in May 1996. The tweaked design included hardware texture mapping with texture compression.

Matrox was known for years as a significant player in the high-end 2D graphics accelerator market. The AIBs they produced were excellent Windows accelerators, and some of the later boars excelled at MS-DOS as well. Matrox their Impression Plus in 1994 to innovate with one of the first 3D accelerator boards, but that board only could accelerate a limited feature set (no texture mapping) and targeted primarily at CAD applications.

Seeing the slow but steady growth and interest in 3D graphics on PCs with Nvidia, Rendition, and ATI’s new AIBs, Matrox began experimenting with 3D acceleration more aggressively and produced the Mystique. Mystique was their most feature-rich 3D accelerator in 1997, but still lacked key features including bilinear filtering. Then, in early 1998, Matrox teamed up with PowerVR to produce an add-in 3D board called Matrox m3D using the PowerVR PCX2 chipset. This board was one of the very few times that Matrox would outsource for their graphics processor and was certainly a stopgap measure to hold out until the G200 project was ready to go.

Designated as the successor to its successful MGA Millennium – the Matrox Mystique. Based on the company’s fifth‑generation of its home-grown graphics/video accelerator, the MGA‑1064SG, the Matrox Mystique was the first product Matrox has explicitly targeted at the consumer market.

The MGA‑1064SG was a 64‑bit SGRAM controller with a 32‑bit VGA core that integrated a 135 MHz, triple 8-bit RAMDAC. The PCI bus mastering controller also incorporated hardware 3D texture mapping, including a hardware divide engine for efficient perspective‑correction of texture maps. The chip had a Gouraud shading engine and supported CLUT 4 (4-bit Color Look-Up Table) and CLUT 8 texture compression allowing developers to reduce the size of source texture maps by 2:1 or 4:1, respectively. Each source texture map could have a unique 256‑color palette associated with it.

According to the company at the time, the MGA‑1064SG could process 25 million texels/second where the texels are perspective correct, Gouraud shaded, transparent, CLUT 8 expanded to 16‑bit RGB, and Z‑buffered. A 16-bit hardware Z-buffer is a way for developers to enable shared SGRAM, and texture transparency is a 1-bit control.

The MGA‑1064SG’s video engine implemented both X and Y interpolation which Matrox said was an improvement over the earlier generation MGA‑2064W (Millennium) and provided hardware color space conversion.

Game and 3D API support included DirectDraw and Direct3D as well as Criterion’s Renderware. As was typical at the time, Matrox has an in‑house 3D API called MSI (for Matrox Simple Interface).

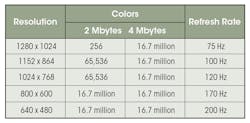

The MGA Millennium has been both a critical and a popular favorite. The board’s success as a game platform was a surprise to Matrox, particularly since gamers scorned the Millennium’s predecessors due to lackluster VGA performance. As the first mainstream graphics accelerator with hardware 3D, the Millennium rode the wave of 3D hype and cast favorable light on Matrox in the market even though the company wasn’t positioning its product for interactive entertainment applications. The Mystique stood to benefit from this history. The Mystique sold fort $279 for a 2 MB board, $149 for a 2 Mbyte SGRAM upgrade module and $399 for a factory configured 4 MB board.

With the G200, Matrox sought to combine its past products’ 2D and video acceleration with a full-featured 3D accelerator. The G200 chip powered the Millennium G200 and Mystique G200.

G200 was Matrox’s first fully AGP-compliant graphics processor. While the earlier Millennium II featured AGP, it did not support the full AGP feature set. The G200 took advantage of DIME (Direct Memory Execute) to speed texture transfers to and from main system RAM. That allowed the G200 to use system RAM as texture storage if the AIB’s local RAM was of insufficient size for the task at hand. G200 was one of the first cards to support this feature.

The chip was a 128-bit core with dual 64-bit buses in what Matrox called a “Dual Bus” organization. Each bus was unidirectional and designed to speed data transfer to and from the functional units within the chip. By doubling the internal data path with two separate buses instead of just a more extensive single bus, Matrox reduced latencies in data transfer by improving overall bus efficiency. The memory interface was 64-bit.

The G200 supported 32-bit color depth rendering, which substantially pushed the image quality upwards by ending dithering artifacts caused by the then-more-typical 16-bit color depth. Matrox called their technology Vibrant Color Quality (VCQ). The chip also supported features such as trilinear mipmap filtering and anti-aliasing (though rarely used). The G200 could render 3D at all resolutions supported in 2D. Architecturally, the 3D pipeline looked like a single pixel pipeline with a single texture management unit. The core had a RISC processor called the “WARP core,” that implemented a triangle setup engine in microcode.

The Millennium G200 used the new SGRAM memory and a faster RAMDAC, while Mystique G200 was cheaper and equipped with slower SDRAM memory but gained a TV-out port. Most G200 boards shipped with 8 MB RAM and were expandable to 16 MB with an add-on module. The AIBs also had ports for individual add-on boards which could add various functionality. The G200 was Matrox’s first graphics processor to require added cooling in the form of a heatsink.

The company made iteration after iteration of the basic MGA from 1994 to 2001 when it introduced its first new architecture in almost a decade, the Parhelia. That Matrox was able to extend and successfully use the MGA so long is a tribute to the design, and designers.

In 2014, Matrox announced going forward it would give up making its own chips and build graphics AIBs with AMD GPUs.