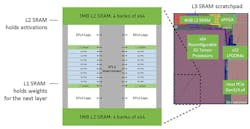

Flex Logix has unveiled its InferX X1 machine-learning (ML) inference system, which is packed into a 54-mm2 chip. The X1 incorporates 64 1D Tensor processing units (TPUs) linked by the XFLX interconnect (Fig. 1). The dual 1-MB level 2 SRAM holds activations while the level 1 SRAM holds the weights for the next layer of computation. An on-chip FPGA provides additional customization capabilities. There’s also a 4-MB level 3 SRAM, LPDDR4 interface, and x4 PCI Express (PCIe) interface.

The company chose to implement one-dimensional Tensor processors (Fig. 2), which can be combined to handle two- and three-dimensional tensors. The units support a high-precision Winograd acceleration option. This approach is more flexible and delivers a high level of system utilization.

The simplified concept and underlying embedded FPGA (eFPGA) architectural approach allows the system to be reconfigured rapidly and enables layers to be “fused” together. This means that intermediate results can be given to the next layer without having to store them in memory, which slows down the overall system (Fig. 3). Moving data around ML hardware is often hidden, but it can have a significant impact on system performance.

The inclusion of an eFPGA and a simplified, reconfigurable TPU architecture makes it possible for Flex Logix to provide a more adaptable ML solution. It can handle a standard confv2d model as well as a depth-wise conv2d model.

The chips are available separately or on a half-height, half-length PCIe board (Fig. 4). The PCIe board includes a x8 PCIe interface. The X1P1 board has a single chip while the X1P4 board incorporates four chips. Both plug into a x8 PCIe slot. The reason for going that route rather than a x16 for the X1P4 is because server motherboards typically have more x8 slots than x16 and the throughput difference for ML applications is minimal. As a result, more boards can be packed into a server. The X1P1 is only $499, while the X1P4 goes for $999.

The X1M M.2 version is expected to arrive soon. The 22- × 80-mm module has a x4 PCIe interface and will be available in 2021. It targets embedded servers, PCs, and laptops.