Are We Doomed to Repeat History? The Looming Quantum Computer Event Horizon

This article is part of the Library Series: Dealing with Post-Quantum Cryptography Issues.

What you’ll learn:

- The challenges facing security in a post-quantum world.

- The current efforts to create post-quantum cryptography.

- The importance of post-quantum cryptography.

A couple examples from history highlight our failure to secure the technology that’s playing an increasingly larger role in both our personal lives and business. When computers were first connected to the internet, we had no idea of the Pandora’s Box that was being opened, and cybersecurity wasn’t even considered a thing. We failed to learn our lesson when mobile phones exploded onto the world and again with IoT still making “fast to market” more important than security. This has constantly left cybersecurity “behind the 8 ball” in the ongoing effort to secure data.

As we race to quantum computing, we’ll see another, and perhaps the greatest, fundamental shift in the way computing is done. Quantum computers promise to deliver an increase in computing power that could spur enormous breakthroughs in disease research, understanding global climate, and delving into the origins of the universe.

As a result, the goal to further advance quantum-computing research has rightfully attracted a lot of attention and funding — including $625 million from the U.S. government.1 However, it also will make many of our trusted security techniques inadequate, enabling encryption to be broken in minutes or hours instead of the thousands of years it currently takes.

Two important algorithms that serve as a basis for security of most commonly utilized public-key algorithms today will be broken by quantum computers:

- Integer Factorization, used by RSA

- Discrete Logarithm, used by Diffie-Hellman key exchange, as well as Elliptic Curve Cryptography.

As we prepare for a post-quantum world, we have another opportunity to get security right. The challenge of replacing the existing public-key cryptography in these applications with quantum-computer-resistant cryptography is going to be formidable.

Today’s state-of-the-art quantum computers are so limited that while they can break “toy” examples, they don’t endanger commercially used key sizes (such as specified in NIST SP800-57). However, most experts agree it’s only a matter of time until quantum computers evolve to the point of being able to break today’s cryptography.

Cryptographers around the world have been studying the issue of post-quantum cryptography (PQC), and NIST has started a standardization process. However, even though we’re likely five to 10 years away from quantum computers becoming widely available, we’re approaching what can be described as the event horizon.

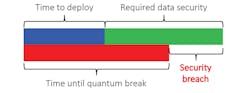

Data that has been cryptographically protected by quantum-broken algorithms up to Day 0 of the PQC deployment will likely need to remain secure for years — decades in some cases — after quantum computers are in use. This is known as Mosca’s Theorem (see figure).

Deploying any secure solution takes time. Given the inherent longer development time of chips compared to software, chip-based security becomes even more pressing. Throw in the added challenge that PQC depends on entirely new algorithms, and our ability to protect against quantum computers will take many years to deploy. All this adds up to make PQC a moving target.

PQC Standardization Process

The good news is that, and I take heart in this, we seem to have learned from previous mistakes, and NIST’s PQC standardization process is working. The effort has been underway for more than four years and has narrowed entrants from 69 to seven (four in the category of public-key encryption and three in the category of digital signatures) over three rounds.

However, in late January 2021, NIST started reevaluating a couple of the current finalists and is considering adding new entries as well as some of the candidates from the stand-by list. As mentioned previously, addressing PQC isn’t an incremental step. We’re learning as we go, which makes it difficult to know what you don’t know.

The current finalists were heavily skewed toward a lattice-based scheme. What the potential new direction by NIST indicates is that as the community has continued studying the algorithms, lattice-based schemes may not be the holy grail we first had hoped.

Someone outside the industry may look at that as a failure, but I would argue that’s an incorrect conclusion. Only by trial and error, facing failure and course correcting along the way, can we hope to develop effective PQC algorithms before quantum computers open another, potentially worse cybersecurity Pandora’s box. If we fail to secure it, we risk more catastrophic security vulnerabilities than we’ve ever seen: Aggressors could cripple governments, economies, hospitals, and other critical infrastructure in a matter of hours.

While it’s old hat to say, “It’s time the world took notice of security and give it a seat at the table,” the time to deliver on that sentiment is now.

Reference

1. Reuters, “U.S. to spend $625 million in five quantum information research hubs”