This article is part of Then and Now in our Series Library

What you'll learn:

- The advent of the cathode ray tube.

- A look at display controllers, including the NEC µPD7220 and IBM VGA.

- How GPUs ushered in a new era of graphics display.

- The latest in graphics from 3D displays and ray tracing.

A lot has happened over the past 70 years in the electronic and display space. Electronic Design has been covering the technologies and continues to present the latest that are significantly more powerful than those of the past. In fact, graphics processing has exceeded what conventional CPUs can do.

Displays and printed output have been a way to get information that’s been fiddled with by a computer into a form that we poor humans can understand. Printers are still around and hard copy remains a necessity, but video displays dominate the human machine interface (HMI) these days. It shows up in smartphones, HDTVs, and nav systems in cars. Underlying these systems are display processors and typically more robust graphics processing units (GPUs).

The history of graphics processors is an ongoing effort by Jon Peddie in our Graphics Chip Chronicles series. It’s up to Volume 7 and covers dozens of chips. I’m snarfing a significant chunk of this article from this series that provides more in-depth coverage of each chip.

Rise of the CRTs

We’ll skip past the individual status lights found on early computers to the cathode ray tubes (CRTs), which became ubiquitous with the rise of the television. CRTs were driven by custom controllers that usually processed signals based on analog standards like those from the National Television System Committee (NTSC).

The CRT was versatile in that it supported vector and raster images (Fig. 1). Actually, the raster image is nothing more than a fixed format vector image. The difference is that the encoding for a raster image maps to the regular movement of the electron beam, while a vector display moves the beam based on positions provided by the display controller. An analog oscilloscope is an example of a vector display designed to show analog signals versus a time frame.

Raster displays are simply an 2D matrix of pixels mapped to a physical display. The value used to encode a pixel varies depending on the device and associated controller. A single bit works for a single-level, monochrome display while the latest displays use as many as 48 bits to describe a single pixel (16 bits for red, green, and blue). Multiple encoding schemes have RGB (red, green, blue), YUV, HIS (hue, saturation, intensity), and so on.

CRTs were the basis for analog television and early digital displays, and they’ve now been replaced by flat-panel LCD and LED technology. CRTs also were used in computer display terminals, often referred to as video display terminals (VDTs) (Fig. 2).

Enter the Display Controllers

One of the first display controllers listed in the Graphics Chip Chronicles is the NEC µPD7220 Graphics Display Controller (Fig. 3). According to Jon Peddie, “In 1982, NEC changed the landscape of the emerging computer graphics market just as the PC was being introduced, which would be the major change to the heretofore specialized and expensive computer graphics industry.”

The µPD7220 was a 5-V dc part that consumed 5 W and was housed in a 40-pin ceramic package. It had support for a lightpen and could handle arcs, lines, circles, and special characters. The processor featured a sophisticated instruction set along with graphics figure drawing support and DMA transfer capabilities. It could drive up to 4 Mb of bit-mapped graphics memory, which was a significant amount for the time.

A slew of chips followed with increasing functionality and performance. One of the most dominant chips was the IBM VGA (Fig. 4) used with the IBM PC, which brought the personal computer (PC) to the forefront. Its big brother, the IBM XGA, was a superset with more resolution and performance.

A significant feature of the VGA was the use of color lookup tables (cLUTs) along with a digital-to-analog converter (DAC) in a single chip. A ROM was used to store three character maps. The VGA supported up to 256 kB of video memory and had a 16-color and 256-color palette. Each color had an 18-bit color resolution, 6 bits each for red, green. and blue. The VGA offered a refresh rate up to 70 Hz. The 15-pin VGA interface also became a ubiquitous connector on PCs and laptops (Fig. 5).

3D Displays

Three-dimensional displays have been around for a while, from movie theaters using 3D glasses to HDTVs. These days virtual and augmented reality are likely homes for 3D content. There are 3D displays that don’t require glasses for viewing, but they’ve yet to achieve much success at this point.

3Dfx was one of the first companies to deliver 3D support in graphics processors with its Voodoo Graphics chipset introduced in 1996. Originally delivered as an add-on card, 3Dfx chips eventually found their way into display adapter cards for graphics adapter companies like STB.

3D presentation on 2D displays is another matter that we’ll touch on later.

GPUs Take Over

NVIDIA's GeForce 256 was the first fully integrated GPU—it was a single-chip processor that incorporated all of the lighting, transform, rendering, and triangle setup and clipping (Fig. 6). It delivered 10 Mpolygons/s. The 256-bit pipeline was built from four 64-bit pixel pipelines.

At this juncture, gaming was a big factor in GPU design. Other GPUs targeted CAD. These days, there are still GPUs optimized for one of many applications. In general, though, they’re all powerful enough to handle any chore, just not necessarily as fast or as efficient as a GPU optimized for a particular application.

The industry has seen significant consolidation in the GPU space with AMD, Intel, and NVIDIA now dominating the space. AMD and NVIDIA sell chips that third parties can incorporate into their products. Some wind up in embedded systems while others target consumers. They all sell their own brands as well.

GPGPUs Add Computational Chores

Initially, GPUs were an output-only device. They were programmable to a degree and typically connected to a display such as a CRT, projector, or flat panel. Unfortunately, GPUs started out as closed/proprietary systems that were only accessible via drivers provided by the chip vendors. Nonetheless, engineers and programmers eyed the parallel programming potential that often exceeded CPU performance.

Eventually general-purpose GPU (GPGPU) computing became available. Standards like OpenCL and NVIDIA’s proprietary CUDA make these platforms suitable for general processing chores. Programming was different from a CPU, but languages like C were augmented to work with the new, exposed hardware.

Part of the challenge was mapping programs to the hardware that started out supporting gaming and graphical displays. Mapping 3D environments onto a 2D presentation were part of the driving factors for developing the single-instruction, multiple-data (SIMD) and single-instruction, multiple-thread (SIMT) architectures. The surge of this type of programming is relatively recent, starting about 2006.

Since then, the scope and chip architectures have changed radically, especially with the incorporation of machine-learning (ML) and artificial-intelligence (AI) acceleration. The GPGPUs were ideal for handling AI/ML models compared to CPUs, although the GPGPUs are being bested by AI/ML accelerators optimized for specific tasks. Likewise, CPUs and GPGPUs designs now implement AI/ML acceleration and AI/ML optimized opcodes along with data handling, making them even better for these kinds of applications.

Originally GPGPUs were a compute island with their own memory and programming elements (Fig. 7). The original split-memory approach was one reason why GPGPU parallel programming object code was delivered in “kernels” that were executed on the GPU. Data and kernels were copied to the GPU’s memory and the resulting data was copied back to the CPU’s memory.

The next step was having the GPU move data around, finally unifying the CPU and GPU space, which put the GPU on the same level as a CPU. A CPU is normally required within a system with one or more GPUs. However, in many instances, the CPU simply handles the initial boot sequence and acts as a traffic cop to manage the overall system. GPUs often deal directly with peripherals and storage.

One reason for unifying the environment is the dominance of PCI Express (PCIe) and the standards built on top of it, such as Non-Volatile Memory Express (NVMe) storage and RDMA over Converted Ethernet (RoCE).

2D, 3D, and Ray Tracing

3D displays are one thing, but these days 2D displays still dominate this space. Mapping 3D environments to 2D displays is one of the major chores performed by GPUs, driven greatly by 3D gaming.

Initially, 3D to 2D mapping was done without taking ray tracing into account because of the overhead required. Graphic artists handle textures and lighting explicitly to mimic how a scene would be rendered in the real world.

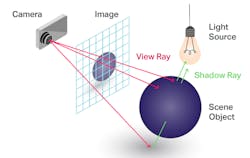

Explicit transformations done by artists and designers provide the fastest but least realistic way of turning a 3D gaming world into a 2D presentation. Ray casting is a methodology that’s fast and provides more realistic rendering based on a 3D model, while ray tracing matches a real-world scenario only limited by the resolution of the system (Fig. 8). Check out Jon Peddie’s “What’s the Difference Between Ray Tracing, Ray Casting, and Ray Charles?” article if you get a chance.

Ray-tracing hardware, now available from a number of sources, is found in very-high-end GPGPUs. This includes embedded GPGPUs like those intended for mobile devices (e.g., smartphones). These provide amazingly realistic and beautiful images (Fig. 9).

Imagination Technologies identified five levels of ray-tracing support from software through low-level hardware support to full hardware ray-tracing support with a scene hierarchy generator.

What makes the ray-tracing support interesting these days is that it’s being done in real-time. Ray-tracing support initially used in movie computer generated imagery (CGI) special effects often required days and a supercomputer of that time to render part of a video clip. This is now being accomplished on a single GPGPU card at 4K resolution in real-time and will eventually be done on a mobile device.

Not all graphical display applications require this level of performance or sophistication, but computer image and video content continue to dazzle users. Expect the future to make things even more interesting.

Read more articles in Then and Now and Electronic History in our Series Library