DDR5 DRAM: How a New Interface Improves Performance with Less Power

What you’ll learn:

- Why a new memory interface is needed.

- Features and benefits of DDR5.

- How DDR5 will usher in a new era of composable, scalable data centers.

The move to DDR5 will probably be more important than most other changes you make in your data center this year. But you’re likely only vaguely aware of the transition as DDR5 moves in to altogether replace DDR4.

It seems inevitable that your processors will change, and that they will have some new memory interface. It’s what happened with the last several DRAM generations, from SDRAM up through DDR4.

But DDR5 is more than simply an interface change. It’s changing the concept of the processor memory system. In fact, the change to DDR5 may be justification enough to upgrade to a compatible server platform.

Let’s see why.

Why a New Memory Interface?

There’s no need to explain that computing problems are becoming increasingly sophisticated. It’s the nature of the business and has been going on since computers first arrived. This inevitable growth has driven not only evolutionary change in the form of higher server counts, growing memory and storage capacities, and greater processor clock speeds and core counts—it’s also driven architectural changes including the recent adoption of disaggregation and the implementation of AI techniques.

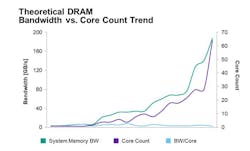

A data-center manager might first think that everything is moving along in synch, since all of these numbers are on the rise. However, while processor core count is increasing, DDR bandwidth hasn’t kept up, so the bandwidth per core has been declining. Figure 1 shows a view up to DDR4.

Since data sets have been ballooning — especially for HPC, gaming, video encoding, machine-learning inference, big data analytics, and in-memory cache/database — other means have been employed to improve the bandwidth of memory transfers. Though more memory channels are being added to CPUs, this consumes significantly more power and drives the processor pin count through the roof, limiting the viability of this approach. In the end, the number of channels can’t increase forever.

Some applications, particularly high-core-count subsystems like GPUs and dedicated AI processors, use an exotic form of memory called high-bandwidth memory (HBM). This technology, which runs data from stacked DRAM chips to the processor through a 1,024-bit memory channel, is a good solution for memory-intensive applications like AI, where processor and memory need to be as close as possible to deliver fast insights. However, it's also more costly and chips can’t be mounted on replaceable/upgradeable modules.

DDR5 was recently introduced to improve the channel bandwidth between the processor and memory, while still supporting upgradability. Let’s check out the features of this new technology.

Bandwidth and Latency

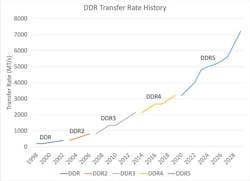

DDR5 runs at faster transfer rates than any prior DDR generation; in fact, DDR5 will be more than twice the transfer rate when compared to DDR4. DDR5 also introduces additional architecture changes to make those transfer rates perform better than a simple incremental gain and will boost the observed data-bus efficiency.

Furthermore, the burst length doubles from eight to 16 transfers, enabling two independent subchannels per module and essentially doubling the available channels in the system. You’re not just getting higher transfer speeds, but you get a re-architected memory channel that would outperform DDR4 even without the higher transfer rates.

Memory-bound processes will realize a big boost from the DDR5 conversion, and many of today’s data-intensive workloads, in particular AI, databases, and online transaction processing (OLTP), fit this description.

But the transfer rates are important. Speeds of today’s DDR5 memories range from 4,800 to 6,400 million transfers per second (MT/s), with higher transfer rates anticipated as the technology matures (Fig. 2).

Energy Consumption

DDR5 uses a lower voltage than DDR4—1.1 V rather than 1.2 V. While that 8% difference may not sound like much, it alone accounts for a power dissipation difference related to the squares of these numbers: 1.1²/1.2² = 85%, so you get a 15% savings right there.

The architecture changes introduced by DDR5 optimizing bandwidth efficiency and the higher transfer rates also add to the benefit. However, these numbers are more difficult to quantify without measuring the exact application environment that will use the technology. More simply said, due to the improved architecture and higher transfer rates, the end-user will observe improvements in energy per bit of data.

Add to this the fact that the DIMM modules regulate their own voltages and you reduce the need for highly regulated, high-current 1.1-V rails on the motherboard, providing additional energy savings.

Savvy data-center managers don’t simply evaluate the capital cost of the equipment that goes into the data center, but they pay equal attention to the operating budget: How much power does a server consume, and what will it cost to cool? When all of these factors are considered, a more energy-efficient module will almost always throw the decision in favor of the newer technology, and DDR5 will be the memory technology winning that argument.

Error Correction

DDR5 also incorporates the use of on-die error correction. As DRAM process geometries continue to shrink, many users worry about increasing single-bit error rates and overall data integrity.

In the case of server applications, on-die ECC corrects single-bit errors during READ commands prior to outputting data from the DDR5 device. This moves some of the ECC burden from the systems correction algorithms to the DRAM to lighten the load on the system.

DDR5 also introduces an error check and scrub feature where the DRAM device will read internal data and write back corrected data if an error occurred, if enabled.

Where Does CXL Fit?

With all of the recent talk about CXL, many might wonder if it competes directly against DDR5 or do these two technologies complement each other?

CXL is actually a high-speed serial interface for processors to increase memory capacity beyond direct-attach DDR5. The CXL memory can be either directly attached to the CPU or in a memory pool that’s shared between servers. Those memories can be DDR4, DDR5, or some other interface.

While CXL is an exciting new architectural direction for the industry, CXL itself doesn’t compete against DDR.

Simplifying the Change

Micron (an Objective Analysis client) has created a Technology Enablement Program (TEP) to provide early and easy access to ecosystem partners for technical information, support, and collaboration to streamline DDR5 design/integration challenges.

The program is intended to give designers a single site to download all of the support they need, including datasheets, behavioral and functional models, signal-integrity models, thermal models, training materials, and support. Micron’s collaboration with chipset, design, and verification IP partners is expected to enable faster time-to-market for DDR5-based products.

Conclusion

Although DRAM interfaces aren’t generally the first factor that data-center managers consider when implementing an upgrade, DDR5 deserves a close look. This technology promises to deliver much-improved performance while saving power.

DDR5 is an enabling technology that will help early adopters move gracefully into the composable, scalable data center of the future. IT and business leaders should evaluate DDR5 and determine how and when the move from DDR4 to DDR5 fits into their data-center transformation plans.