New Neoverse Platforms Take on the Cloud, HPC, and the Edge

Last year, Arm delivered the first of its Neoverse solutions targeting the enterprise and cloud computing. The Neoverse N1 and E1 platforms are available now (Fig. 1). The N1 architecture is applied in Amazon’s Graviton processor, which is used in a number of AWS installations.

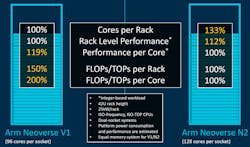

Now, Arm has unveiled its next-generation systems—the Neoverse V1, code-named Zeus, and Neoverse N2, code-named Perseus, platforms. The V1 and N2 deliver 50% and 40% more performance, respectively, compared to the existing Neoverse N1 implementations.

This space is dominated by Intel platforms, but the Neoverse solutions have given them considerable competition thanks to their power efficiency. Data centers are changing significantly, from incorporation of FPGA-based SmartNICs to GPGPUs to machine-learning (ML) accelerators.

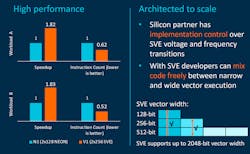

The new Neoverse platforms address ML and high-performance-compute (HPC) applications with bFloat16 support and the addition of the Scalable Vector Extensions (SVE). SVE handles SIMD integer, bfloat16, or floating-point instructions on wider vector units (Fig. 2). A key feature of SVE is that the programming model is data-width agnostic. Vectors can be 128 bits up to 2048 bits. This simplifies programming in addition to providing increased performance.

Although the cloud and HPC environments are one primary use of the Neoverse architecture, it’s not the only space being targeted by Arm. The high-performance edge is where Neoverse is at home, albeit with fewer cores (Fig. 3). On the plus side, power requirements are also reduced. The 5G edge infrastructure can benefit from the large number of power-efficient cores the Neoverse architecture is able to bring to the design table.

Different configurations can fill rackspace depending on application requirements. The Neoverse V1 looks to pack a lot of HPC cores into a chip, while the Neoverse N2 packs in more cores (Fig. 4). A typical dual-socket motherboard will house hundreds of cores.

The V-series delivers maximum performance with larger buffers, caches, and queues. The N-series is optimized for performance and power, while the E-series is optimized for power efficiency and area. Right now, the N2 comes with dual 128-bit SVE pipelines while the V1 has two 256-bit SVE pipelines.

CCIX (pronounced see six) and CXL are two PCI Express-based interprocessor communication links (Fig. 5). Earlier versions of Neoverse supported CCIX; the latest incarnations support both. CXL has been used to split out memory expansion, and CCIX provides coherent, heterogeneous multicore interconnects.

Neoverse is already invading the data center. Platforms like NXP’s LX210A Neoverse-based system-on-chip (SoC) target 5G RAN solutions. This latest crop of new architectures is likely to improve on these existing solutions.