Accelerate Machine Learning at the Edge with Open-Source Dev Tools

What you’ll learn:

- How machine- and deep-learning technologies are growing rapidly, which brings about new challenges for developers who need to seek ways to optimize ML applications that run on tiny edge devices with power, processing, and memory constraints.

- Easy-to-use, open-source development tools that will simplify the process of creating ML/deep learning projects on embedded platform.

The Internet of Things (IoT) has brought billions of connected devices into our homes, cars, offices, hospitals, factories, and cityscapes. IoT pioneers envisioned vast networks of wireless sensor nodes transmitting trillions of bytes of data to the cloud for aggregation, analysis, and decision-making. However, in recent years, the vision of IoT-fueled, cloud-based intelligence is giving way to a new paradigm: intelligence at the edge.

Leveraging the latest advances in machine-learning (ML) technologies, embedded developers are extending the power of artificial intelligence (AI) closer to the network edge. Today’s low-power IoT devices are now capable of running sophisticated ML and deep-learning algorithms locally, without the need for cloud connectivity, minimizing concerns for latency, performance, security, and privacy. New and emerging ML/neural-networking edge applications include intelligent personal assistants, factory robotics, voice and facial recognition in connected cars, AI-enabled home security cameras, and predictive maintenance for white goods and industrial equipment.

The ML market is expanding rapidly and use cases for intelligent edge applications are growing exponentially. According to TIRIAS Research, 98% of edge devices will use some form of machine learning by 2025. Based on these market projections, 18-25 billion devices are expected to include ML and deep-learning capabilities in that timeframe. By early 2021, ML/deep-learning applications will reach mainstream status as more embedded developers gain access to the low-power devices, development frameworks, and software tools they need to streamline their ML projects.

ML Dev Environments Geared to Needs of Mainstream Developers

Until recently, ML development environments were primarily intended to support developers who have serious expertise in ML and deep-learning applications. However, to accelerate ML application development at scale, ML support must become easier to use and more widely available to mainstream embedded developers.

The advent of ML at the edge is a relatively recent trend with unique application requirements compared to classic cloud-based AI systems. IC, power, and system-level resources for embedded designs are more constrained, requiring new and different software tools. ML developers have also devised entirely new development processes for intelligent edge applications, including model training, inference engine deployment on target devices, and other aspects of system integration.

After an ML model is trained, optimized, and quantized, the next phase of development involves deploying the model on a target device, such as an MCU or applications processor, and allowing it to perform the inferencing function.

Before going further, let’s take a closer look at a new class of target devices for ML applications: crossover microcontrollers (MCUs). The term “crossover” refers to devices that combine performance, functionality, and capabilities of an applications processor-based design, but with the ease-of-use, low-power, and real-time operation with low interrupt latency of an MCU-based design. A typical crossover MCU, such as an i.MX RT series device from NXP, contains an Arm Cortex-M core running at speeds ranging from 300 MHz to 1 GHz. These MCUs have sufficient processing performance to support ML inferencing engines (e.g., without requiring additional ML acceleration), along with the low power consumption required for power-constrained edge applications.

Ideally, embedded developers can use a comprehensive ML development environment, complete with software tools, application examples, and user guides, to deploy open-source inference engines on a target device. For example, the eIQ environment from NXP provides inferencing support for Arm NN, the ONNX Runtime engine, TensorFlow Lite, and the Glow neural-network compiler. Developers can follow a simple “bring your own model” (BYOM) process that enables them to build a trained model using public or private cloud-based tools, and then transfer the model into the eIQ environment to run on the appropriate silicon-optimized inference engine.

Many developers today now require ML and deep-learning tools and technologies for their current and future embedded projects. Again, ML support must become more comprehensive and easier to use for most of these developers. Comprehensive support encompasses an end-to-end workflow that enables developers to import their training data, select the optimal model for their application, train the model, perform optimization and quantization, complete on-target profiling, and then move on to final production.

For most mainstream developers, ease of use means access to simplified, optimized user interfaces that hide the underlying details and manage the complexity of the ML development process. The ideal user interface allows the developer to select a few options and then easily import training data and deploy the model on the target device.

The number of processing platforms, frameworks, tools, and other resources available to help developers build and deploy ML applications and neural-network models continues to expand. Let’s examine several development tools and frameworks and how they can help developers simplify their ML development projects.

Simplifying Workflows with a Machine-Learning Tool Suite

The DeepView ML tool suite from Au-Zone Technologies is a good example of an intuitive graphical user interface (GUI) and workflow. It enables developers of all skill levels, from embedded designers to data scientists to ML experts, to import datasets and neural-net models, and then train and deploy those models and workloads across a wide range of target devices.

NXP recently augmented its eIQ development environment to include the DeepView tool suite to help developers streamline their ML projects (Fig. 1). The new eIQ ML workflow tool supplies developers with advanced features to prune, quantize, validate, and deploy public or proprietary neural-net models on NXP devices. On-target, graph-level profiling capabilities provide developers with run-time insights to optimize neural-net model architectures, system parameters, and runtime performance.

By adding the runtime inference engine to complement open-source inference technologies, developers can quickly deploy and evaluate ML workloads and performance across multiple devices with minimal effort. A key feature of this runtime inference engine is that it optimizes system memory usage and data movement for unique device architectures.

Optimizing Neural Networks with the Open-Source Glow Compiler

The Glow neural-network model compiler is a popular open-source backend tool for high-level ML frameworks that support compiler optimizations and code generation of neural-network graphs. With the proliferation of deep-learning frameworks such as PyTorch, ML/neural-network compilers provide optimizations that accelerate inferencing on a wide range of hardware platforms.

Facebook, the leading pioneer of PyTorch, introduced Glow (shorthand for “graph lowering compiler”) in May 2018 as an open-source community project, with the goal of providing optimizations to accelerate neural-network performance. Glow has evolved significantly in recent years thanks to the support of more than 130 worldwide contributors.

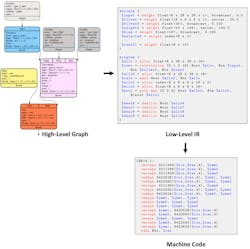

The Glow compiler leverages a computation graph to generate optimized machine code in two phases (Fig. 2). First, it optimizes the model operators and layers using standard compiler techniques. In the second backend phase of model compilation, Glow employs low-level virtual-machine (LLVM) modules to enable target-specific optimizations. Glow also supports an ahead-of-time (AOT) compilation mode that generates object files and eliminates unnecessary overhead to reduce the number of computations and minimize memory overhead. This AOT technique is ideal for memory-constrained MCU targets.

ML tools like the Glow compiler can simplify ML/neural-net development and enhance edge-processing performance on low-power MCUs. The standard, out-of-the-box version of Glow from GitHub is device-agnostic, giving developers the flexibility to compile neural-network models for leading processor architectures, including those based on Arm Cortex-A and Cortex-M cores.

To help simplify ML projects, NXP integrated Glow with the eIQ development environment, as well as its MCUXpresso SDK. It combines the Glow compiler and quantization tools into an easy-to-use installer, along with detailed documentation to help developers get their models running quickly. This optimized Glow implementation targets Arm Cortex-M cores and the Cadence Tensilica HiFi 4 DSP as well as provides platform-specific optimizations for i.MX RT series MCUs.

Using CIFAR-10 datasets as a neural-network model benchmark, NXP recently tested the i.MX RT1060 MCU to assess performance differences between different Glow compiler versions. NXP also ran tests on the i.MX RT685 MCU, currently the only i.MX RT series device with an integrated DSP optimized for processing neural-network operators.

The i.MX RT1060 contains a 600-MHz Arm Cortex-M7, 1 MB of SRAM, as well as features optimized for real-time applications such as high-speed GPIO, CAN-FD, and a synchronous parallel NAND/NOR/PSRAM controller. The i.MX RT685 contains a 600-MHz Cadence Tensilica HiFi 4 DSP core paired with a 300-MHz Arm Cortex-M33 core and 4.5 MB of on-chip SRAM, as well as security-related features.

NXP’s Glow implementation is closely aligned with Cadence’s neural-network library, NNLib. Although the RT685 MCU’s HiFi 4 DSP core is designed to enhance voice processing, it’s also capable of accelerating a wide range of neural-network operators when used with the NNLib library as an LLVM backend for Glow. While NNLib is similar to CMSIS-NN, it provides a more comprehensive set of hand-tuned operators optimized for the HiFi4 DSP. Based on the same CIFAR-10 benchmark example, the HiFi4 DSP delivers a 25X performance increase for neural-network operations compared to a standard Glow compiler implementation.

Using PyTorch for MCU-based ML Development

PyTorch, an open-source machine-learning framework primarily developed by Facebook's AI research lab and based on the Torch library, is widely used by developers to create ML/deep-learning projects and products. PyTorch is a good choice for MCU targets since it poses minimal processing platform restrictions and is able to generate ONNX models, which can be compiled by Glow.

Since developers can directly access Glow through PyTorch, they’re able to build and compile their models in the same development environment, thereby eliminating steps and simplifying the compilation process. Developers also can generate bundles directly from a Python script, without having to first generate ONNX models.

Until recently, ONNX and Caffe2 were the only input model formats supported by Glow. PyTorch can now export models directly into the ONNX format for use by Glow. Since many well-known models were created in other formats such as TensorFlow, open-source model conversion tools are available to convert them to the ONNX format. Popular tools for format conversion include MMdnn, a toolset supported by Microsoft to help users interoperate among different deep-learning frameworks, and tf2onnx, which is used to convert TensorFlow models to ONNX.

Conclusion

Machine- and deep-learning technologies continue to evolve at a rapid pace. At the same time, we’re seeing strong market momentum for IoT and other edge devices capable of running ML/deep-learning algorithms and making autonomous decisions without cloud intervention. While migrating intelligence from the cloud to the network edge is an unstoppable trend, it comes with challenges as developers seek ways to optimize ML applications to run on tiny edge devices with power, processing, and memory constraints.

Just as architects and builders require specialized tools to construct homes and cities of the future, mainstream developers need optimized, easy-to-use software tools and frameworks to simplify the process of creating ML/deep-learning projects on embedded platforms. The DeepView ML tool suite, Glow ML compiler, and PyTorch framework exemplify a growing wave of development resources that will help embedded developers create the next generation of intelligent edge applications.