Marvell Fights Nvidia and Intel With Latest Octeon Family of DPUs

Marvell Technology is striking back against Nvidia, Intel, and other semiconductor giants in the data center market with the latest generation of its Octeon family of data processing units (DPUs) due out in late 2021.

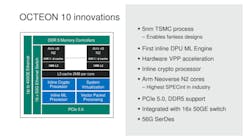

On Monday the Santa Clara, California-based company said the Octeon 10 family of DPUs are systems on a chip based on the latest 5-nanometer node from TSMC and integrating Arm's Neoverse N2 core for the first time in a server-grade processor. The combination brings three times more performance than the previous generation of Octeon TX2 while burning up 50% less power and boosting the data throughput to 400 Gbps.

The Arm CPUs are complemented by a cluster of accelerators and other building blocks in hardware that move, process, secure, store, and manage the deluge of data traveling through the sprawling networks of servers or cellular base stations. Marvell upgraded many of these to state-of-the-art accelerators, ranging from the machine learning engine to "vector packet processing" pipeline. It also improved the cryptograhy accelerator in the chip to run encrypt and decrypt data in real time (400+ Gbps) without CPU intervention.

“To meet and exceed the growing data processing requirements for network, storage, and security workloads, Marvell focused on significant DPU innovations across compute, hardware accelerators, and high-speed I/O,” John Sakamoto, vice president of Marvell’s infrastructure processors business unit, said in a statement. Marvell said the first Octeon DPU in the family will start shipping in the second half of 2021.

The DPU has become a battleground in the data center segment in recent years. Nvidia has expanded its data center ambitions with its Bluefield family of DPUs that, like Marvell's Octeon DPUs, are used to offload networking, storage, security, and other infrastructure workloads from the CPU in the server and accelerate them, saving CPU capacity for other tasks. Nvidia plans to start supplying its future Bluefield-3 DPU in 2022.

Intel is also wrestling to win market share in the category with what it calls infrastructure processing units (IPUs) which are based on FPGAs instead of the more general-purpose chips at the heart of the Octeon 10. Intel said IPUs have been developed with and deployed by Microsoft, Baidu, and other major cloud vendors. Marvell is also up against industry rivals Xilinx and Broadcom as well as startups Fungible and Pendsando.

Marvell said Octeon has become the most popular infrastructure processor in the world since it came to market a decade-and-a-half ago, with millions of chips deployed in data centers and 3G, 4G, and 5G RAN.

But it is aggressively pushing the envelope with its Octeon 10 family of DPUs. The Octeon 10 is the first server processor in its class based on on TSMC's 5-nm node and also the first to feature Arm's N2 cores, giving it a competitive edge in performance-per-watt, thus reducing the cost of cooling and powering the chips. Marvell said Octeon 10 has a wide range of industry-first features for a DPU, such as its integrated machine learning engine. The chips also contain advanced IO interfaces, including PCIe Gen 5 and DDR5.

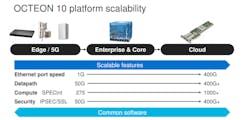

Intel, Nvidia, and other vendors are trying to convince Amazon, Google, Microsoft, and other cloud services players to attach DPUs to the millions of servers in the colossal data centers they use themselves and rent out to millions of clients over the cloud. But unlike its rivals, Marvell said that it is looking to stand out with more scalable platforms. The Octeon DPUs are not only targeted at cloud data centers but also wireless and wired networking gear such as switches, routers, secure gateways, firewalls and 5G base stations.

The Octeon 10 DPUs can also be attached to plug-and-play server networking cards also called SmartNICs. "This is a platform that is scalable from the edge out to hyperscale cloud," Sakamoto told Electronic Design.

Under the hood, the Octeon 10 DPUs have up to 36 N2 cores clocked at up to 2.5 GHz and arranged on the same slab of silicon. The N2 is the first in a family of central processing cores for the data center based on the Armv9 architecture. Designed on the Perseus microarchitecture, the Arm cores support up to 40% more instructions per clock compared to the N1 core crammed in Amazon's Graviton2 and Ampere's Altra CPUs.

For years, Marvell used its architecture license with Arm to create the CPU cores at the heart of its Octeon family of server-grade processors from the ground up. But with the Octeon 10 generation, Marvell swapped its in-house TX2 core in favor of Arm's standard infrastructure cores, giving it the freedom to spend more of its engineering resources on the accelerators and other features instead of tangling itself up in CPU design.

Octeon 10 delivers up to three times more single-threaded performance than its prior generation based on the TX2. "If you compare Octeon 10 to other DPU families, this is clear compute leadership," Sakamoto said.

The 64-bit cores are provisioned with 64 kB of L1 instruction cache plus 64 kB of L1 data cache. Marvell also enlarged the L2 cache to 1 MB per core to reduce latency for a wide range of workloads. The cores (which have 32-bit hardware as well) can also access 2 MB of L3 cache each, totaling up to 36 MB of L2 and 72 MB of L3 cache in the flagship Octeon 10 DPU. According to Marvell, it also attached advanced hardware scheduling to the cores, reducing latency from the CPU to the accelerators by a factor of three.

Because it is based on the Armv9-A architecture, the CPU also integrates 128-bit wide processing pipelines that take advantage of Arm's SVE2 instruction set. The SVE2 technology is a new series of instructions that improve the data processing and machine learning capabilities of Arm CPUs. That potentially gives Marvell an extra edge over Nvidia's newest Bluefield-3 DPU, which builds on Armv8.2-based Cortex-A78 CPU cores.

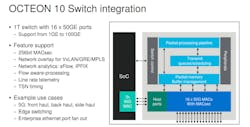

The chips also incorporate up to eight PCIe Gen 5 lanes, up to 16 50G Ethernet ports, and up to 56G SerDes lanes, upgrading from PCIe Gen 4 and DDR4 DRAM lanes in its previous generation Octeon TX2 processors.

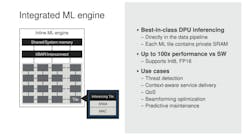

Surrounding the Arm CPU cores are a group of state-of-the-art accelerators and other hardware modules, including its in-house machine learning engine. According to Marvell, the module is based on a mosaic of inferencing tiles that incorporate SRAM and MACs that can run Int8 and FP16 operations. The inferencing tiles are linked to each other with a crossbar interconnect, which also attaches to shared system memory.

The machine learning engine in the Octeon 10 DPUs can be used by software to identify potential intruders traveling along the sprawling networks of servers in the cloud or corporate data centers. The AI capabilities can also be used in 4G and 5G base stations to improve beamforming technology that shoot out signals at smartphones and other devices directly instead of broadcasting over a wide area sort of like a floodlight.

Marvell said that it integrated the machine learning engine "directly in the data pipeline" to reduce latency in the system to the levels required by 5G networks and high-throughput workloads in cloud data centers. The company said the tile-based architecture means that it can scale up or down depending on the end market. More tiles equal faster performance for AI chores. The machine learning engine can scale up to more than 100 trillion operations per second (TOPS) at a cost of it occupying more die area and draining more power.

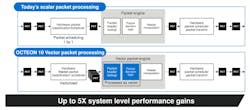

Marvell said that it upgraded the packet processing pipeline in the Octeon 10 DPUs to process more than one packet at a time, reducing system latency and improving data throughput. Marvell said that the "vector packet processing" engine can intercept data traveling through the network, group the packets together in a set, and process the complete set as a vector in hardware instead of processing all the packets one by one.

According to Marvell, the improved pipeline is able to boost packet processing throughput at the system level by a factor of five compared to the scalar processing engines in its previous Octeon TX2 generation.

Another area of improvement is the integrated 1 terabit-per-second switch. The chips incorporate a set of 16 50 gigabit-per-second Ethernet ports that are configurable from 1G up to 100G Ethernet. By integrating the switch on the same silicon chip as the hardware accelerators and other computing modules, Marvell said its Octeon 10 DPUs cut system costs by reducing the amount of hardware used in 5G base stations and edge networking switches. Other advanced features in the switch include 256-bit MACsec and TSN.

For storage workloads, the chips natively support up to 20 million IO operations per second (IOPS) over NVM Express (NVMe).

“A significant amount of compute is required to process the deluge of data generated from cloud to edge devices today,” said Chris Bergey, senior vice president and general manager of the infrastructure business at Arm, in a statement. “The combination of leading-edge 5-nm technology, Neoverse N2 cores, and Octeon 10 will enable Marvell to take on complex workloads, and showcase its strengths in DPU computing.”

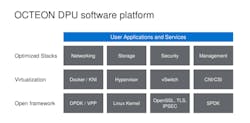

Marvell said that it would also roll out development tools to make it easier for customers to deploy software on the Octeon DPUs, which can take advantage of the Arm ecosystem because they are based on the same instruction set. The tools include software stacks for networking, storage, and security as well as support for virtual machines and containers. The chips can also support a wide range of open and standard APIs.

"Our objective on the software side is to make it very easy for users to run and accelerate applications on the Octeon 10," Sakamoto added. “Our strategy is to have an open platform. Other companies have taken this approach where they want to create a closed ecosystem by offering their own proprietary toolkits and then trying to secure some vendor lock-in. We're trying to keep things open and support open frameworks."

Marvell plans to roll out a software development platform for the Octeon 10 DPU family by the end of 2021.

Marvell plans to roll out a wide range of different SKUs, ranging from the CN103xx—ideal for wireless and wired networking gear in a wide range of energy-efficient, or even fanless, form factors—to the DPU400—targeted at network infrastructure in cloud data centers. The flagship processor in the family fits in a 60W envelope, which is up to 50% more power efficient than its 80W to 120W predecesor, the CN98xx.

Marvell has also shipped millions of Octeon DPUs in 3G and 4G base stations globally and Tier-1 telecom infrastructure OEMs such as Nokia and Samsung Electronics have already selected Octeon DPUs for new 5G base stations. Marvell also rolled out CN106XX with up to 24 N2 CPU cores to run 4G and 5G base stations as well as corporate and wireless carrier data centers, while consuming around 40W to 50W.

Marvell said the first processor in the family—the CN106xx—is now in production at TSMC and should be available to early customers by the fourth quarter. Marvell plans to start shipping the other SKUs in 2022.