Check out our coverage of the Hot Chips 2022 event.

What you’ll learn

- What is Compute Express Link (CXL)?

- Why CXL is important.

- What’s new with CXL 3?

One of the more interesting and extensive tutorials at Hot Chips 2022 was Compute Express Link (CXL) and the newly released CXL 3 specification (Fig. 1). This is a compressed overview of the tutorial that can still be accessed by signing up for archive access.

CXL provides a partitionable, scalable, coherent memory environment that can support an array of CPUs, GPUs, FPGAs, and artificial-intelligence (AI) accelerators, as well as communication devices like SmartNICs.

It’s based on PCI Express (PCIe), the de facto peripheral interface found on all high-performance compute chips. CXL requires additional support over and above PCIe, but it takes advantage of PCIe’s interface and switched-based scalability. Another standard based on PCIe is the Non-Volatile Memory Express (NVMe) standard. Though NVMe isn’t a cache-coherent interface, it’s become the de facto standard for solid-state storage in the enterprise.

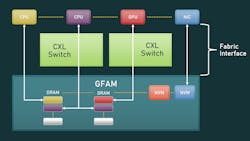

CXL started as a cache-coherent, memory interface. With CXL 3, there’s support for Global Fabric Attached Memory (GFAM), which allows different types of memory to be directly attached and made accessible to multiple processor nodes (Fig. 2).

Nodes come in various types in addition to host nodes like CPUs, GPUs, and FPGAs:

- Type 1 includes devices with a cache such as a SmartNIC.

- Type 2 involves devices with cache and memory. These are devices like AI accelerators.

- Type 3 covers devices with memory such as a memory expander.

PCIe peripheral devices also can be connected via the fabric, but they don’t provide memory to the fabric.

A coherent storage fabric like CXL provides memory disaggregation support, enabling more memory to be added to the fabric. The memory and nodes can be partitioned, with each partition being isolated from each other for security and performance reasons.

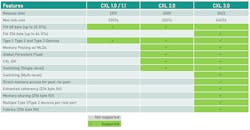

The CXL standard incorporates the CXL.io, CXL.cache, and CXL.mem protocols. The CXL.io protocol is a basic extension of PCIe 5 and works with non-coherent load/stores. CXL.cache provides cache-coherent memory support using block transfers. CXL.mem operates at the load/store level. Type 1 supports CXL.io and CXL.cache. Type 2 supports all three, while Type 3 supports CXL.io and CXL.mem.

The fabric is connected via CXL switches (Fig. 3). The same fabric can be used to connect hosts to PCIe peripherals, since PCIe support is a subset of the CXL specification. Fabrics can be configured in a spine-leaf configuration that’s similar to those used for Ethernet connectivity.

CXL 2 added features like memory pooling with support for multiple logical devices (MLL). It also provides an IDE that offers system management, but it only defined a single level or switching. The CXL 3 specification greatly expands the functionality of the standard in addition to increasing bandwidth to 64 Gtransactions/s using a PCIe 5 PHY (Fig. 4). The Flow Control Unit (FLIT) was expanded to 256 bytes in PAM-4 transfer mode.

Hardware for CXL 1 and 2 has been available. It includes memory-expander boards that support standard memory devices like DDR RAM. CXL 3 adds support for Port Based Routing (PBR), which can handle up to 4,096 nodes.