Check out more coverage of the Hot Chips 2022 event. This article is also part of the TechXchange: SmartNIC Accelerating the Smart Data Center.

AMD has swiped serious market share in server CPUs from Intel in recent years. But it has bold ambitions to become a premier supplier in the data center by offering a host of accelerators to complement them.

The Santa Clara, California-based company has poured about $50 billion to buy programmable chip giant Xilinx and startup Pensando, expanding its presence in the market for a relatively new type of networking chip called a SmartNIC. These chips—sometimes called data processing units (DPUs)—are protocol accelerators that plug into servers to offload infrastructure operations vital to running data centers and telco networks from a CPU.

At Hot Chips last month, AMD mapped out how it plans to compete in the increasingly crowded market for these infrastructure-class chips and how it hopes the underlying technology evolves in the years ahead.

The company said it plans at some point to roll out a high-performance 400-Gb/s SmartNIC based on Xilinx’s adaptive SoCs that unites FPGA and ASIC logic—instead of one or the other—with embedded Arm CPU cores.

Intelligent Offload

Today, the CPUs at the heart of a server function as a traffic cop for the data center, managing networking, storage, security, and a wide range of other behind-the-scenes workloads that primarily run inside software.

But relying on a CPU to coordinate the traffic in a data center is expensive and inefficient. More than one-third of the CPU's capacity can be wasted on infrastructure workloads. AMD's Jaideep Dastidar said in a keynote at Hot Chips that host CPUs in servers are also becoming bogged down by virtual machines and containers. This is all complicated by the rise in demand for high-bandwidth networking and new security requirements.

“All of this resulted in a situation where you had an overburdened CPU," Dastidar pointed out. "So, to the rescue came SmartNICs and DPUs, because they help offload those workloads from the host CPU."

These devices have been deployed in the public cloud for years. AWS’s Nitro silicon, classified as a SmartNIC, was designed in-house to offload networking, storage, and other tasks from the servers it rents to cloud users.

Dastidar said the processor at the heart of the 400-Gb/s SmartNIC is playing the same sort of role, isolating many of the I/O heavy workloads in the data center and freeing up valuable CPU cycles in the process.

Hard- or Soft-Boiled

These server networking cards generally combine high-speed networking and a combination of ASIC-like logic or programmable FPGA-like logic, plus general-purpose embedded CPU cores. But tradeoffs abound.

Hardened logic is best when you want to use the SmartNIC to carry out focused, data-heavy workloads because it delivers the best performance per watt. However, this comes at the expense of flexibility.

These ASICs are custom-made for a very specific workload—such as artificial intelligence (AI) in the case of Google’s TPU or YouTube’s Argos—as opposed to regular CPUs that are intended for general-purpose use.

Embedded CPU cores, he said, represent a more flexible alternative for offloading networking and other workloads from the host CPU in the server. But these components suffer from many of the same limitations.

The relatively rudimentary NICs used in servers today potentially have to handle more than a hundred million network packets per second. General-purpose Arm CPU cores lack the processing capacity to devour it all.

“But you can’t overdo it,” said Dastidar. “When you throw a whole lot of embedded cores at the problem, what will end up happening is you recreating the problem in the host CPU in the first place.”

Because of this, many of the most widely used chips in the SmartNIC and DPU category, including Marvell’s Octeon DPU and NVIDIA’s Bluefield, use special-purpose ASICs to speed up specific workloads that rarely change. Then the hardened logic is paired with highly programmable Arm CPU cores that are responsible for the control-plane management.

Other chips from the likes of Xilinx and Intel use the programmable logic at the heart of FPGAs, which bring much faster performance for networking chores such as packet processing. They are also reconfigurable, giving you the ability to fine-tune them for specific functions and add (or subtract) different features at will.

There are tradeoffs: Server FPGAs are very expensive compared with ASICs and CPUs. “Here, too, you don’t want to overdo it because then the FPGA will start comparing unfavorably [to ASICs],” said Dastidar.

Intel has plans to roll out a new family of what it terms its infrastructure processing units (IPUs) based on FPGAs as early as 2023 or 2024, supporting the same 400 GB/s of networking as AMD’s future SmartNICs.

Three-in-One SoC

Instead of playing tug-of-war with different architectures, AMD said that it plans to combine all three building blocks in a single chip to strike the best possible balance of performance, power efficiency, and flexibility.

According to AMD, this allows you to run workloads on the ASIC, FGPA, or Arm CPU subsystem that is most appropriate. Alternatively, you can divide the workload and distribute the functions to different subsystems.

"It doesn't matter if one particular heterogeneous component is solving one function or a combination of heterogeneous functions. From a software perspective, it should look like one system-on-a-chip," said Dastidar.

The architecture of the upcoming high-performance SmartNIC is comparable to the insides of Xilinx's Versal accelerator family, which is based on what Xilinx calls its Adaptive Compute Acceleration Platform (ACAP).

AMD said the SmartNIC, based on 7-nm technology from TSMC, will leverage the hardened logic where it works best: Offloading cryptography, storage, and networking data-plane workloads that require power-efficient acceleration.

For customers forced to deal with networking-related and other workloads that are changing frequently, "you can also completely hot add or remove new accelerator functions in the programmable logic,” said Dastidar.

Embedded CPU cores are responsible for control-plane management. They are also tapped for telemetry—logging data about traffic traveling on the network to help identify delays and get congestion under control.

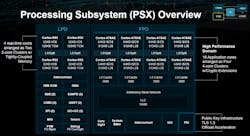

Specifically, the processor features 16 Arm Cortex-A78-AE cores to run high-performance workloads, arranged in quad-core clusters that each have access to 8 MB of L2 cache. Supplementing them is 16 MB of L3 cache.

These are complemented by four real-time Arm Cortex-R52 cores ideal for workloads where power efficiency is the focus. These CPU cores, each equipped with tightly coupled memory (TCM), control the processor’s power-saving “lights-out” mode.

Everything is interconnected with a programmable network-on-chip (NOC) that assists with data movement. The NOC enables all of the subsystems in the SoC to access programmable logic and memory as necessary.

So-called “adapter interfaces” also exist between different subsystems in the SoC. Therefore, the hardened NIC and embedded CPUs can interact with the programmable logic in cases when you want more customization.

It is unclear whether the SoC at the heart of the SmartNIC will use the Xilinx-designed artificial-intelligence (AI) engines that are baked into the digital-signal-processing (DSP) cores in other members of the Versal family.

Flexibility for All

Memory is attached to the infrastructure processor with up to eight 32-GB LPDDR5-6400 or DDR5-5600 memory controllers with error-correction code (ECC).

As networking is one of the sweet spots for SmartNICs, two 200-Gb/s Ethernet interfaces are included. To connect to the host CPU or other resources in a server, there are 16 lanes of PCIe 5.0 or CXL 2.0 connectivity.

AMD is also baking advanced security into the adaptive SoC, including secure boot and memory, peripheral protection units, and several additional features that secure data while traveling the network or in storage.

In the end, the utility of the adaptive SoC at the heart of the SmartNIC is its flexibility. “You can optimize the same adaptive SoC to run different workloads in data centers at different nodes, and once you have already deployed it, you can upgrade, hot-swap, or change the functionality inside the SoC,” said Dastidar.

It's unclear when AMD plans to bring the Xilinx-designed SmartNIC to market. But it's confident that it will complement—not compete against—Pensando's DPUs, which promise many of the same pros as SmartNICs.

“You know the deployments in the data center are not homogenous,” said Dastidar, adding that offering both DPU- and adaptive SoC-based SmartNICs “gives the customer the choice to engage in any of those models.”

Read more articles from our coverage of Hot Chips 2022, and in the TechXchange: SmartNIC Accelerating the Smart Data Center.