What’s the Difference Between CUDA and ROCm for GPGPU Apps?

What you’ll learn:

- Differences between CUDA and ROCm.

- What are the strengths of each platform?

Graphics processing units (GPUs) are traditionally designed to handle graphics computational tasks, such as image and video processing and rendering, 2D and 3D graphics, vectoring, and more. General-purpose computing on GPUs became more practical and popular after 2001, with the advent of both programmable shaders and floating-point support on graphics processors.

Notably, it involved problems with matrices and vectors, including two-, three-, or four-dimensional vectors. These were easily translated to a GPU, which acts with native speed and support on those types. A significant milestone for general-purpose GPUs (GPGPUs) was the year 2003, when a pair of research groups independently discovered GPU-based approaches for the solution of general linear algebra problems on GPUs that ran faster than on CPUs.

GPGPU Evolution

Early efforts to use GPUs as general-purpose processors required reformulating computational problems in terms of graphics primitives, which were supported by two major APIs for graphics processors: OpenGL and DirectX.

Those were followed shortly after by NVIDIA's CUDA, which enabled programmers to drop the underlying graphical concepts for more common high-performance computing concepts, such as OpenCL and other high-end frameworks. That meant modern GPGPU pipelines could leverage the speed of a GPU without requiring complete and explicit conversion of the data to a graphical form.

NVIDIA describes CUDA as a parallel computing platform and application programming interface (API) that allows the software to use specific GPUs for general-purpose processing. CUDA is a software layer that gives direct access to the GPU's virtual instruction set and parallel computational elements for executing compute kernels.

Not to be left out, AMD launched its own general-purpose computing platform in 2016 dubbed Radeon Open Compute Ecosystem (ROCm). ROCm is primarily targeted at discrete professional GPUs, such as AMD's Radeon Pro line. However, official support is more expansive and extends to consumer-grade products, including gaming GPUs.

Unlike CUDA, the ROCm software stack can take advantage of several domains, such as general-purpose GPGPU, high-performance computing (HPC), and heterogeneous computing. It also offers several programming models, such as HIP (GPU-kernel-based programming), OpenMP/Message Passing Interface (MPI), and OpenCL. These also support microarchitectures, including RDNA and CDNA, for myriad applications ranging from AI and edge computing to the IoT/IIoT.

NVIDIA's CUDA

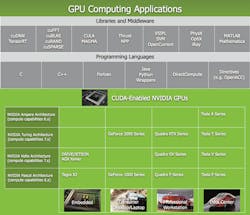

Most cards in NVIDIA's Tesla and RTX series come equipped with a series of CUDA cores (Fig. 1) designed to tackle multiple calculations at the same time. These cores are similar to CPU cores, but they come packed on the GPU and can process data in parallel. There may be thousands of these cores embedded within the GPU, making them incredibly efficient parallel systems capable of offloading CPU-centric tasks directly to the GPU.

Parallel computing is described as the process of breaking down larger problems into smaller, independent parts that can be executed simultaneously by multiple processors communicating via shared memory. These are then combined upon completion as part of an overall algorithm. The primary goal of parallel computing is to increase available computation power for faster application processing and problem-solving.

To that end, the CUDA architecture is designed to work with programming languages such as C, C++, and Fortran, making it easier for parallel programmers to use GPU resources. This is in contrast to prior APIs like Direct3D and OpenGL, which required advanced skills in graphics programming. CUDA-powered GPUs also support programming frameworks such as OpenMP, OpenACC, OpenCL, and HIP by compiling such code to CUDA.

As with most APIs, software development kits (SDKs), and software stacks, NVIDIA provides libraries, compiler directives, and extensions for the popular programming languages mentioned earlier, which makes programming easier and more efficient. These include cuSPARCE, NVRTC runtime compilation, GameWorks Physx, MIG multi-instance GPU support, cuBLAS, and many others.

A good portion of those software stacks is engineered to handle AI-based applications, including machine learning and deep learning, computer vision, conversational AI, and recommendation systems.

Computer-vision apps use deep learning to gain knowledge from digital images and videos. Conversational AI apps help computers understand and communicate through natural language. Recommendation systems utilize images, language, and a user's interests to offer meaningful and relevant search results and services.

GPU-accelerated deep-learning frameworks provide a level of flexibility to design and train custom neural networks and provide interfaces for commonly used programming languages. Every major deep-learning framework, such as TensorFlow, PyTorch, and others, is already GPU-accelerated, so data scientists and researchers can get up to speed without GPU programming.

Current usage of the CUDA architecture that goes beyond AI includes bioinformatics, distributed computing, simulations, molecular dynamics, medical analysis (CTI, MRI, and other scanning image apps), encryption, and more.

AMD's ROCm Software Stack

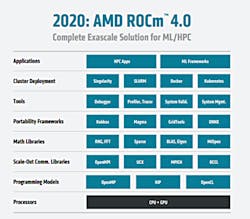

AMD's ROCm (Fig. 2) software stack is similar to the CUDA platform, only it's open source and uses the company's GPUs to accelerate computational tasks. The latest cards in the Radeon Pro W6000 and RX6000 series come equipped with compute cores, ray accelerators (Ray tracing), and stream processors that take advantage of the RDNA architecture for parallel processing, including GPGPU, HPC, HIP (CUDA-like programming model), MPI, and OpenCL.

Since the ROCm ecosystem is comprised of open technologies, including frameworks (TensorFlow/PyTorch), libraries (MIOpen/Blas/RCCL), programming models (HIP), interconnects (OCD) and up-streamed Linux Kernel support, the platform is routinely optimized for performance and efficiency for a wide range of programming languages.

AMD’s ROCm is built for scale, meaning it supports multi-GPU computing in and out of server-node communication via remote direct memory access (RDMA), which provides the ability to access host memory directly without CPU intervention. Thus, the greater the system’s RAM, the larger the processing loads that can be handled by ROCm.

ROCm also simplifies the stack when the driver directly incorporates RDMA peer-sync support, making it easy to develop applications. In addition, it includes ROCr System Runtime, which is language independent and takes advantage of the HAS (heterogeneous system architecture) Runtime API, providing a foundation to execute programming languages like HIP and OpenMP.

As with CUDA, ROCm is an ideal solution for AI applications, as some deep-learning frameworks already support a ROCm backend (e.g., TensorFlow, PyTorch, MXNet, ONNX, CuPy, and more). According to AMD, any CPU/GPU vendor can take advantage of ROCm, as it isn't proprietary technology. That means code written in CUDA or another platform can port to the vendor-neutral HIP format, and from there, users can compile the code for the ROCm platform.

The company offers a series of libraries, add-ons, and extensions to further ROCm's functionality, including a solution (HCC) for the C++ programming language that allows users to integrate CPU and GPU into a single file.

The feature set for ROCm is extensive and incorporates multi-GPU support for coarse-grain virtual memory, the ability to process concurrency and preemption, HSA signals and atomics, DMA, and user-mode queues. It also offers a standardized loader and code-object formats, dynamic and offline compilation support, P2P multi-GPU operation with RDMA support, trace and event-collection API, and system-management APIs and tools. On top of that, there’s a growing, third-party ecosystem packaging custom ROCm distributions for any given application in a multitude of Linux flavors.

Conclusion

This article is just a simple overview of NVIDIA's CUDA platform and AMD's ROCm software stack. In-depth information, including guides, walkthroughs, libraries, and more, can be found at their respective websites linked above.

There’s no top platform in the parallel computing arena—each provides an excellent system for easy application development in various industries. Both also are easy to use with intuitive menus, navigation elements, accessible documentation, and educational materials for beginners.