Arm Unveils Its Most Compact AI-Capable Cortex-M CPU

This article is part of the TechXchange: TinyML: Machine Learning for Small Platforms.

Arm is pumping up its ambitions for on-device AI. The world’s largest semiconductor IP vendor rolled out its smallest and most power-efficient 32-bit CPU core yet based on its Helium technology—the Cortex-M52.

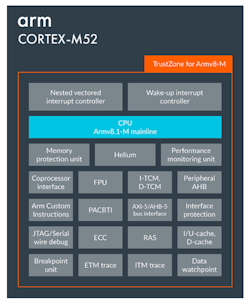

Specifically designed to bring machine learning (ML) out of the cloud and into battery-powered IoT devices, Arm said the Cortex-M52 CPU core ushers in a more than 5.6X uplift in performance for AI inference over its predecessors without spending additional power, silicon area, and cost on a separate neural processing unit (NPU). It also implements the company’s TrustZone technology to keep the core and the system it belongs to secure.

The M52 expands Arm’s Helium extension into a new class of Cortex-M, making it possible to run larger ML models on the billions of microcontrollers (MCUs) that have been largely off-limits for AI.

While the CPU core can be configured in a number of ways, Arm said the Cortex-M52 will make a major difference in tiny battery-powered chips that typically only cost a couple of dollars. With it, these chips can run AI for the purposes of predictive maintenance, using microphones to listen for specific noises such as squeaking wheels and rattling gears in factory machinery or other sensors to identify irregular vibrations. Other possibilities for the CPU include intelligent motor control and power management.

According to Arm, the M52 brings enough performance into the fold to run real-time keyword detection and gesture detection on IoT devices such as smart speakers and lights, or even sensor fusion in wearable devices.

“As this technology advances, on-device intelligence is being deployed in smaller, more cost-sensitive and often battery-powered devices at the lowest cost points, with greater privacy and reliability due to less reliance on the cloud,” said Paul Williamson, senior vice president and general manager of Arm’s IoT business. He cited the Cortex-M52 as a potential future substitute for the Cortex-M3, -M33, and -M4.

Arm indicated that customers will start rolling out chips based on the Cortex-M52 by 2024.

Hardware: At the Heart of On-Device AI

Today, machine learning is no longer limited to data centers stocked with AI accelerators that can carry out many trillions of operations per second (TOPS) and incorporate vast amounts of on-chip high-bandwidth memory.

But it’s uniquely challenging to run ML directly on tiny power-limited IoT devices based on Cortex-M or other CPU cores in the same class. These chips run at a fraction of the clock frequency (in the 100-MHz range) of Arm’s Cortex-A cores used in smartphones, coupled with relatively small amounts of on-chip SRAM, creating a tight fit for the neural networks at the heart of machine learning. Even though times are changing, they’re usually un-augmented by neural engines or other AI cores.

To solve the challenges, everyone from technology giants to startups is trying to create more compact ML models that can run directly on MCU-class CPUs—or software tools that can do the job.

Placing ML models directly on the device limits latency, which can take a toll on performance. Only connecting to the cloud when necessary is more efficient, and it reduces security and privacy risks.

While software is invaluable, hardware is the limiting factor for the types of tiny AI that can fit inside Arm’s Cortex-M cores, which have been baked into more than 100 billion chips designed by its clientele to date.

The company has been trying to turn the tide with its latest Cortex-M CPU architecture, called Armv8.1-M. It’s meant to run ML directly in ultra-low-power embedded and IoT devices without resorting to a more muscular CPU. In 2020, Arm introduced the first CPU blueprint in the new family, the Cortex-M55. Arm filled out the high-end of the family with the Cortex-M85, which brings even more performance to the table.

The Cortex-M55 and -M85 (and -M52) are also designed to be paired with Arm’s Ethos-U55, its first “micro” NPU. The NPU can handle more of the heavy lifting of ML before being forced to use the cloud.

Secure and Intelligent: The Anatomy of the Cortex-M52

While it covers the high-end of the market with the M85 and mid-range with the M55, Arm said customers are keen to bring Helium technology into even smaller form factors and tighter power budgets.

Enter the Cortex-M52. It fits into a silicon area approximately 25% smaller than the Cortex-M55, while bringing a large generational leap in performance over the Cortex-M33. At its heart is Helium, a 128-bit-wide vector processing pipeline that can run single (32-bit) and half-precision (16-bit) floating-point data. It can also run 8-bit data formats, which are widely used in computations that factor into the neural networks at the heart of ML.

In addition, Helium can deliver a large uplift in digital-signal-processing (DSP) performance—up to 2.7X over its previous generation in the Cortex-M family—which can be used for signal pre-processing.

“As machine-learning workloads become more of a standard part of the average software developer’s toolkit, I think we will see the M52 being used widely as a mainstream MCU,” Williamson told Electronic Design.

The Cortex-M52 adds a more advanced direct-memory-access (DMA) controller to manage all of the data proceeding in and out of the CPU. Arm said it facilitates the use of tightly coupled memory (TCM) that can supplement the SRAM inside the CPU and allows for larger ML models to fit inside a device.

Keeping IoT devices secure is part of the plan for the Cortex-M52, which adds TrustZone technology to isolate and partition the secure and non-secure sectors of the CPU. Other extensions, including pointer authentication and branch target identification (PACBTI), are part of the package. These security features help defend against return-oriented programming (ROP) and jump-oriented programming (JOP) attacks.

If all of these new features are insufficient, Arm said companies can create custom instructions to optimize the processor for specific workloads and squeeze more processing per clock cycle out of the Cortex-M MCU.

Helium MCUs Offer Unified Software Development

One of the key advantages of the M52 is the use of a unified software development environment, said Arm.

According to the company, it would traditionally take a separate CPU, DSP, and NPU to match the performance of its Helium-based Cortex-M cores. So, once physical hardware is in hand, developers must create and test code for three separate chips that use three separate sets of tools, or spread out the code over the three separate IP blocks inside the SoC. That presents a challenge for traditional embedded and IoT developers grappling with the data analysis, tool expertise, and programming skills required for AI, said Arm.

Helium-based Cortex-M cores offer an alternative, giving customers a unified set of development tools, software libraries, and models that can tap into ML scalar, vector, and matrix operations from a single CPU core, saving silicon area and cost. “Now, we are bringing AI within reach” on a single set of tools and a single CPU architecture, said Williamson.

Since it forms a sort of family with the M55 and M85, Arm stated the Cortex-M52 is fully software-compatible with the growing ecosystem of software, tools, and models already present around its Helium technology.

The Cortex-M CPU core will also be accessible as “virtual hardware” in the cloud. This allows anyone to safely start building and testing software before physical silicon with Arm IP inside is delivered.

Moreover, the M52 MCU works with common frameworks for ML, including TensorFlow Lite.