We all are being conditioned by our smartphones. It happens every time we use finger gestures to tap, drag, zoom, or resize. It also happens whenever we use our phones to connect to payment systems, cloud services, and other devices, including PCs, TVs, and tablets.

Related Articles

- M2M Client-Side Challenges Emerge In Mobile Embedded System Updates

- Use Lua To Develop M2M Embedded Applications

- A Successful "Internet Of Things" Hinges On M2M

Every time people use a smartphone or tablet, they become more conditioned to the user experience it delivers. And the more conditioned they become, they more they expect a similar experience in other systems they use.

Consequently, people now expect an intuitive, touchscreen interface in almost every device they interact with, whether it’s a gas pump, vending machine, point-of-sale payment system, or consumer-grade GPS device. These expectations are influencing the design of medical devices, industrial control panels, in-vehicle infotainment and HVAC systems, and a host of other traditional embedded devices.

It’s no surprise, then, that many device manufacturers are now looking to build devices that integrate 2D/3D graphics, touch gestures, multimedia, and speech interfaces that offer speech recognition, text-to-speech, or speech-to-text, all with the goal of delivering an attractive and intuitive user experience. At the same time, these devices still must address traditional demands for fast boot-ups, predictable response times, and reliable operation. They also must remain secure in a world that is becoming increasingly interconnected. And as more embedded designs become mobile, efficient power management comes to the fore.

This file type includes high resolution graphics and schematics when applicable.

A Single User Interface

To develop embedded devices that include all this functionality, along with support for the various device types consolidated in recent system-on-chip modules (e.g. audio, video encode and decode, CAN, USB, Bluetooth, Ethernet, I2C, SPI, SATA, GPIO, UART), device manufacturers often need to integrate several third-party software components, even just in the graphics subsystem.

To create their user interfaces (UIs), many embedded development teams use UI frameworks like Qt, OpenGL ES, Elektrobit EB GUIDE, and Crank Software Storyboard. These frameworks, which can be used in everything from deeply embedded devices without GPUs to high-end multi-GPU systems, typically offer better performance, a smaller footprint, and faster boot times than interpreted environments like Adobe AIR, Android, HTML5 (JavaScript), Java, and Silverlight. The problem is that these frameworks can’t always be used in isolation. In many cases, the development team must also integrate graphics content based on HTML5, video, and other technologies.

Take the UI for a modern car infotainment system. The home screen, which features icons for diagnostics, user preferences, Bluetooth-phone integration, and other onboard functions, might be based on Qt, while the GPS navigation system might be based on OpenGL ES. Meanwhile, some applications and middleware might be based on HTML5, which is highly popular among app developers and is supported by every major operating system (OS) platform and all smartphone platforms.

To combine these environments successfully, a system needs a graphical composition manager that can consolidate output from multiple application windows onto a single display. The composition manager might need to tile, overlap, or blend these windows. To perform these operations efficiently, it should take advantage of hardware acceleration in the GPU. For its part, the development team needs the deep graphics programming skill to arrange the frame buffers and handle the transformations (e.g. scaling, translation, rotation, alpha blending) when building the final image.

For example, each application that supplies a portion of the user interface for a sophisticated in-car infotainment system allocates a separate window and frame buffer from the graphical composition manager (Fig. 1). An HTML page provides the background wallpaper, which may come from a mobile device or a cloud service. On the left in Figure 1 is an analog video input from an iPod or iPhone device, digitized by a video capture chip into a frame buffer. On the right is a navigation display, built from the underlying OpenGL ES map rendering software, with an overlaid control application made with yet another graphical UI (GUI) technology. Properly implemented, graphical composition lets the user interact with components created in these different environments without having to manually switch environments or, indeed, noticing any “seams” between the environments.

Better Security Measures

To no one’s surprise, security is gaining prominence—a natural outcome of government agency spying and the encryption bugs found in popular smartphone and desktop OSs. The more an embedded system connects to open networks, the more vulnerable it can become, and the more it needs to implement strict control on system resources and user data.

To access system resources, a user-space process must elevate itself to a higher level of system privilege. This, of course, isn’t new. Promoting a process to super-user status, or root privilege, is a common and time-proven mechanism. The process remains at user-level privilege until the instant it needs to open sockets, communicate over inter-process communications (IPC), allocate and free memory, access the file system, mask and respond to interrupts, or spawn threads, at which point it is assigned heightened privilege. The problem is that a process assigned root privilege can, if compromised, monitor or modify system resources that it has no business accessing.

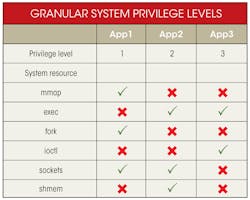

One way to reduce this exposure and its attendant risks is to let the system architect define many more system privilege levels, each having greatly reduced, and specific, capabilities. When a user process needs access to a specific resource, it elevates itself to the privilege level defined for the system call, provided it has been granted the permission to do so at system build time. In other words, the privilege levels need to be coded into a system configuration file and the process startup code. The extra effort required is minimal, given that the system architect typically has a very good idea as to which resources each process needs to access.

When started, the process and its threads are assigned the requisite permission level. When the process needs to elevate to execute the system call, the OS kernel checks the permission against the resource. If it finds a match, the call proceeds. Otherwise, it fails, just as if the process didn’t elevate to root level.

With this approach, compromised processes no longer have global access to system resources. They are restricted to the subset of system services, even if they are elevated to root. For instance, in the table, App1 has been assigned access to mmap() and fork() and can communicate over sockets, but cannot access shared memory or perform exec() functions. It still needs to elevate to a higher privilege level (Level 1). But even when elevated, it cannot access resources that it hasn’t been specifically assigned, even if it becomes a root process. Similarly, App2 and App3 cannot access mmap(), even if compromised or hacked.

Better Power Management

For many years, FedEx delivery teams have been carrying mobile devices to capture recipients’ signatures and to enable real-time package tracking. This is not new. What is new, however, is the sheer number and variety of mobile devices now used in industry, from barcode scanners used in inventory control to smartphones used as cash registers by retail sales associates. It’s unacceptable for stockroom material handlers or sales associates to have to replace their devices, mid-transaction, with another device that has a fresh charge. Power consumption needs to become smarter and more efficient, and it can do so by leveraging some key assumptions.

Interrupts, timers, and system clock ticks all wake up the CPU and must be handled in a timely manner, but we can assume acceptable latencies for interrupts and timers associated with keyboards, login screens, and other UI components. Most people, for example, can easily tolerate a 500-µs delay in a keyboard interrupt as the system wakes from sleep (Fig. 2).

Given these assumptions, the OS kernel can change its behavior for interrupts and timers associated with human interfaces. The system architect can assign acceptable “latencies” to interrupts that don’t require real-time precision and can likewise assign acceptable tolerance values to timers that also don’t need real-time precision. This approach allows the OS kernel to group these events into a single wakeup event during which all timer and interrupt events can be handled, allowing the CPU to sleep longer (Fig. 3).

To further reduce power consumption, the OS kernel can be extended to recognize the longer periods during which it doesn’t need to wake up to handle deferred interrupts and timers since there is no need to wake up and handle system clock ticks with no associated events. With the ability to skip unnecessary clock ticks and to sleep longer, while maintaining overall system time and real-time precision, the OS kernel can selectively sleep for extended periods and reduce battery drain (Fig. 4).

Other Considerations

Other areas also need attention, such as building a framework that can easily and reliably connect smartphones to the embedded device. Such connectivity allows for screen sharing and control as well as media content sharing. Smartphones also can provide downloaded software updates to embedded devices that are typically unconnected. With the in-use lifecycle of embedded devices extending to 10 years or more, it’s crucial to get the system design correct from the outset.

Chris Ault is a product manager at QNX Software Systems, where he focuses on the medical and general embedded markets. Prior to joining QNX, he worked in various roles, including software engineering, engineering management, product management, and technical sales, at AppZero, Ciena, Liquid Computing, Nortel, and Wind River Systems.