Before sitting down to write code for self-driving cars, software engineers have to guide them through vast amounts of information on things like traffic lights, highway lines, and other vehicles.

“But there’s an entire other set of problems where it is extremely hard to figure out what exactly you want to learn,” said Praveen Raghavan, who leads machine learning chip development at Belgium’s Imec, the advanced electronics researcher center, in a recent interview.

That is one reason why Raghavan and his colleagues built a self-learning chip to compose music, learning the craft’s hidden rules through observation rather than explicit programming. The prototype is built to faintly resemble the human brain, detecting patterns without code and using those patterns to create its own tunes.

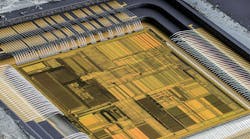

The chip is still a work-in-progress, but it represents the latest effort in the field of neuromorphic computing. These chips aim to mimic the brain’s ability to save and apply information, consuming so little power that sensors and smartphones can run complex software for image recognition and text translation without outsourcing work to cloud servers.

The researchers, who showed an early demo this month at the Imec Technology Forum, fed the hardware pieces of classical flute music. The device detected underlying patterns in the notes, training itself to the point where it composed a new 30-second song – a simple and unpolished tune, not without a few dissonant notes.

The chip contains arrays of oxide-based random-access memory or OxRAM memory cells, which swaps between states of electrical resistance to represent bits. Its power consumption is limited because it stores and processes data simultaneously, cutting out the exhausting task of retrieving data from memory.

In training, the neuromorphic chip learns to associate notes that it hears back-to-back in multiple pieces of music. After every encounter with that pair of notes, the conductance between the memory cells storing the individual notes increases. In that way, the pattern is burned into the chip’s circuitry.

The learning process bears a resemblance to how the human brain forges connections between neurons, creating synaptic highways for familiar ideas. With training, the chip becomes more likely to play two notes together when making music. The chip also grasps larger patterns thanks to its hierarchy of memory cells.

Raghavan declined to share many specifics about the architecture or its power consumption, since the chip’s blueprints are pending patent.

Imec suggested that the chip could be used in personalized wearables, detecting heart rate changes in an individual that betray heart abnormalities. It could also let smartphones translate text or grasp voice commands without consulting the cloud, where companies Nvidia and Google are targeting number-crunching chips for deep learning.

With the rise of custom silicon for data centers, the fate of neuromorphic chips for sensors and smartphones is still uncertain. For the most part, chip designers still color within the lines drawn in 1945 by the mathematician John von Neumann, who outlined an architecture where processors always fetch data from memory.

For decades, chip designers have tried to sidestep the von Neumann architecture. Perhaps the most famous is Carver Mead, a professor of electrical engineering at Caltech, whose research into neuromorphic chips in the eighties helped create a counterculture in computing inspired by the human brain.

Though most neuromorphic chips have fallen short of revolutionizing computers, many companies have not given up. IBM, for instance, cast billions of silicon transistors as biological neurons in its True North chip, which the company is aiming at deep learning software and nuclear simulations in servers.

Others companies like Qualcomm are not only inspired by the human brain but are literally trying to recreate it in silicon. These spiking neural network chips, Qualcomm says, are more efficient handlers of artificial intelligence software with billions of virtual neurons. Potentially, the chips could be used from drones to servers.

Optimizing hardware for specific algorithms – and vice-versa – is vital for making more powerful chips, Raghavan said. The Imec researchers also devised a prototype chip based on magnetic random access memory and hardwired for software using deep neural networks.

“What we truly need to get the most out of the technology is for algorithms to map very efficiently to the architecture, and the architecture to map extremely well to the circuit design,” Raghavan added. “We are trying to reach across the board.”

That has been a tall order for the entire semiconductor industry, as companies struggle to kick their habit for traditional graphics and multicore processors. “It’s like getting someone who only speaks Chinese to understand a person that only knows Greek,” said Raghavan.