Persistent memory (PM) used to be the norm when magnetic core memory was ubiquitous, but volatile DRAM now dominates main memory. While non-volatile memory (NVM) is used for booting and application code in microcontroller, it has been relatively rare in main memory for quite some time. How one deals with PM can significantly affect application and system design, providing features from fast restarts to high reliability.

Storage Networking Industry Association’s (SNIA) NVM programming model (NPM) changes how developers use main memory. The initial release was in 2013, and 1.2 is coming soon.

NPM is just a model and not an application programming interface (API), although it is designed to be the basis for an API. There are Linux and Windows implementations of NPM that provide developers with a concrete API, as well as the matching runtime support.

NPM supports a range of NVMs, including the JEDEC Solid State Technology Association’s NVDIMM-F, NVDIMM-N, and NVDIMM-P standards. These are DIMMs that would plug into the same sockets as DRAM, but they are NVM rather than volatile DRAM (which loses its contents if power is lost).

NVDIMM-F is the all-flash version, like Diablo Technologies’ Memory 1. It is slower than DRAM but higher capacity. NVDIMM-N uses a combination of DRAM and NVM. DRAM contents are copied to NVM if power is lost and copied back to DRAM when it is restored. It operates at DRAM speeds. NVDIMM-P is similar to NVDIMM-F except that it addresses non-flash technologies like resistive RAM (ReRAM), MRAM, or Intel’s Optane. SNIA and JEDEC won the Best of Show Award for NVDIMM-N Standard at this year’s Flash Memory Summit.

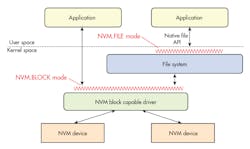

NPM defines two models for developers to use. The first is the more-conventional block mode used with NVMe and block devices like SAS and SATA drives (Fig. 1). This allows the block mode driver to be a back end for the usual file systems provided by the kernel. Applications can use the native file system APIs, or else they could go to the NVM block driver and access blocks directly.

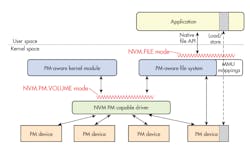

The more interesting model is the memory mapped approach (Fig. 2). This typically translates to memory mapped files that have usually been backed by disk storage. The model supports this, but the faster NVDIMMs make many more applications practical.

Memory mapped files are found in higher end operating systems like Linux and Windows where a memory management unit (MMU) is used. Usually the MMU is part of a virtual memory subsystem that allows an application to utilize a large address space in a sparse fashion. In particular, memory mapped files need enough address space to allow a file to grow depending upon the application. Normally the space needs to be allocated, but rarely is all of it actually used.

Simply having access to the memory mapped file is only part of the issue. Having embedded references within a file to memory allows faster access to data across the virtual memory space. This assumes that the location of memory mapped files remains consistent between program executions. This is possible with NPM, although there are reasons for using relative addressing instead of absolute addressing within data contained in a memory mapped file.

NPM allows multiple devices to managed and combined. It means system managers can handle configuration independent of the applications while still providing the persistence and performance of the underlying PM devices. PM devices can even be implemented using distributed memory technologies like NVMe-over-Fabric. Different devices will have their own latency, capacity, and performance characteristics but these would not affect the logical execution of an application.

NPM is being used in server, enterprise, and cloud applications, but it is equally applicable to embedded applications. Adoption of SATA and SAS flash devices—and even NVMe—has been common in embedded designs, but NVDIMM usage is much more limited at this point. That being said, its use has significant benefits in space-constrained embedded applications, including smaller footprints, lower power requirements, and significantly higher performance.