Streamline Your Augmented-Reality System with an All-Programmable SoC

Download this article in PDF format.

Research has shown that we humans interact with the world visually since we process visual images many times faster than information presented in other forms, such as written text. Augmented reality (AR), like its virtual-reality (VR) cousin, enables us to experience an increased perception of our surrounding environment. The major difference is that AR adds to, or augments, the natural world with virtual objects such as text or other visuals, equipping us to interact safely and more efficiently within our natural environment, whereas VR immerses us within a synthetically created environment.

Combinations of AR and VR are often described as presenting us with a mixed reality (MR) (Fig. 1). Many of us may have already used AR in our everyday lives without necessarily being aware, such as when we use our mobile devices for street-level navigation or to play AR games like Pokémon Go.

1. Combining augmented reality and virtual reality creates a mixed reality.

One of the best examples of AR and its applications is the head-up display (HUD). Among simpler AR applications, HUDs are used in aviation and automotive applications to make pertinent vehicle information available without glancing at the instrument cluster. AR applications with more advanced capabilities, including wearable technologies (often called smart AR), are predicted to be worth $2.3 billion by 2020 according to Tractica.

Augmented Reality Enhances Our Lives

AR is finding many applications and use cases across industrial, military, manufacturing, medical, and commercial sectors that enhance our lives. Within the social commercial sphere, AR is used in social-media applications to add biographical information and even recognize each person.

Many AR applications are based around the use of smart glasses worn by an operative. These smart glasses help to increase efficiency within the manufacturing environment by replacing manuals or by illustrating how to assemble piece parts (Fig. 2). In the medical field, smart glasses have the potential to share medical records as well as wound and injury details, thus giving on-scene emergency services the benefit of information that can later be made available to the ER.

2. Here’s an example of smart glasses used in an industrial setting.

A large parcel-delivery company is currently using AR in smart glasses to read the bar code on shipping labels. Having scanned the bar code, the glasses can communicate with the company’s servers using Wi-Fi infrastructure to determine the resultant destination for the package. With the destination known, the glasses can indicate where the parcel should be stacked for its ongoing shipment.

In addition to considering the end application, AR solutions involve a number of requirements, including performance, security, power, and future-proofing. Some of these can be competing, so designers must consider all of these requirements to arrive at the optimal AR system solution.

Empowering AR Systems to Succeed

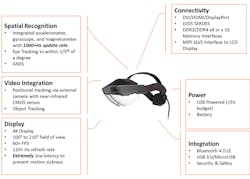

Complex AR systems require the ability to interface to, and process data from, multiple camera sensors that understand the surrounding environments. These sensors may also operate across different elements of the electromagnetic (EM) spectrum, such as infrared or near infrared. In addition, sensors may furnish information from outside the EM spectrum, providing inputs for detection of movement and rotation, as with MEMS accelerometers and gyroscopes, along with location data dispensed by Global Navigation Satellite Systems (GNSS) (Fig. 3). Embedded-vision systems that perform sensor fusion from several different sensor types like these are commonly known as heterogeneous sensor-fusion systems.

AR systems also require high frame rates along with the ability to perform real-time analysis frame-by-frame to extract and process the information contained within each frame. Equipping systems with the processing capability to achieve these requirements becomes a driving decision factor in component selection.

3. Looking at the anatomy of an augmented-reality system, multiple sensors are used, such as MEMS accelerometers and gyroscopes.

An example of a single chip that has the capability to process cores for AR systems is Xilinx’s All Programmable Zynq-7000 SoC or Zynq UltraScale+ MPSoC. These SoCs are themselves heterogeneous processing systems that combine ARM processors with high-performance programmable logic. Zynq UltraScale+ MPSoC, the next generation of the Zynq-7000 SoC, also incorporates an ARM Mali-400 GPU. In addition, certain family members contain a hardened video encoder that supports H.265 and the high-efficiency video coding (HEVC) standard.

These complex devices enable designers to segment their system architectures using processors for real-time analytics and transferring traditional processor tasks to the ecosystem. Designers can utilize the programmable logic for sensor interfaces and processing functions. This brings benefits such as:

- Parallel implementation of N image-processing pipelines as required by the application.

- Any-to-any connectivity—the ability to define and interface with any sensor, communication protocol, or display standard—allowing for flexibility and future upgrade paths.

Support for Embedded Vision and Machine Learning

To implement an image-processing pipeline and sensor-fusion algorithms, developers can leverage the reVISION acceleration stack that supports both embedded-vision and machine-learning applications. Developed predominantly within the software-defined SoC or SDSoC toolset environment, reVISION permits designers to use standard frameworks such as OpenVX for cross-platform acceleration of vision processing, OpenCV computer vision library, and Caffe Flow to target both the processor system and programmable logic. The reVISION stack can accelerate a large number of OpenCV functions (including the core OpenVX functions) into the programmable logic.

Furthermore, reVISION supports implementation of the machine-learning inference engine within the programmable logic directly from the Caffe prototxt file. The ability to use industry-standard frameworks reduces development time: It minimizes the gap between the high-level system model and design completion while yielding a more responsive, power-efficient (pixel per watt) and flexible solution thanks to the processing system and programmable logic combination (Fig. 4).

4. Using industry-standard frameworks reduces development time.

Designers must also consider the unique aspects of AR systems. They’re not only required to interface with cameras and sensors that observe the surrounding environment and execute algorithms as needed by the application and use case, but they must also be capable of tracking users’ eyes, determining their gaze and, hence, where they’re looking.

This is typically accomplished by using additional cameras that observe the user’s face and implementing an eye-tracking algorithm that allows the AR system to follow the user’s gaze and determine the content to be delivered to the AR display. This promotes efficient use of the bandwidth and processing requirements. Performing such detection and tracking can be a computationally intensive task, but it’s possible to accelerate it using reVISION.

Prioritizing Power for Portables

Most AR systems are also portable, untethered, and, in many instances, wearable, as is the case with smart glasses. This creates the unique challenge of implementing the processing required within a power-constrained environment. In this case, both the Zynq-7000 SoC and Zynq UltraScale+ MPSoC families rank in the top echelon in terms of performance per watt, according to Xilinx. They can further reduce power during operation by exercising different options, ranging from placing processors into standby mode to be awoken by one of several sources to powering down the programmable logic half of the device. By detecting that they’re no longer being used in these instances, AR systems extend battery life.

During operation of the AR system, elements of the processor not currently being used can be clock-gated to reduce power consumption. Designers are able to achieve a very efficient power solution within the programmable logic element by following simple design rules, such as making efficient use of hard macros, careful planning of control signals, and considering intelligent clock gating for device regions not currently required. This provides a more power-efficient and responsive single-chip solution when compared with a CPU or GPU based approach.

A single-chip Zynq-7000 SoC or Zynq UltraScale+ MPSoC solution using reVISION to accelerate machine-learning and image-processing elements achieves between 6X (machine learning) and 42X (image processing) more frames per second per watt with one-fifth the latency compared to other GPU-based solutions.

Meeting Sensitive Security Demands

AR applications such as sharing patient medical records and manufacturing data call for a high level of security both in the information-assurance (IA) and threat-protection (TP) domains, especially as AR systems will be highly mobile and could be misplaced. IA requires that we can trust the information stored within the system along with information received and transmitted by the system.

For a comprehensive IA domain, designers can leverage the Zynq devices’ secure-boot capabilities that enable the use of encryption. They also need to perform verification using the advanced encryption standard (AES), keyed-hash message authentication code (HMAC), and RSA public key cryptography algorithm. Once the device is correctly configured and running, developers can employ the ARM TrustZone and hypervisors to implement an orthogonal world, where one is secure and can’t be accessed by the other.

When it comes to threat protection, designers could use the built-in Xilinx ADC (XADC) macro within the system to monitor supply voltages, currents, and temperatures, and detect any attempts to tamper with the AR system. Should a threatening event occur, the Zynq device has protective options ranging from logging the attempt to erasing secure data and preventing the AR system from connecting again to the supporting infrastructure.

For more information, click here.