The next quantum leap in storage technologies is driving the need for faster storage fabrics to transport this data surge. Such an acceleration in storage demand and solutions requires storage transports to provide better overall performance as well as the ability to guarantee Service Level Agreements (SLAs). Of course, the storage solution I’m speaking about Non-Volatile Memory Express (NVMe) and its latest expansion into NVMe over Fabrics.

First some housekeeping. References to NVMe-oF and FC-NVMe are prevalent and cause confusion; these terms refer to two distinctly different entities. NVMe-oF is a term defining a set of transport protocols that utilize remote direct memory access (RDMA) to move data to/from NVMe storage systems. It’s also a term referencing the NVMe.org specification that defines the message passing and control parameters between the NVMe protocol and some transport protocols (RDMA). FC-NVMe is a term that describes the transport of NVMe traffic utilizing the Fibre Channel (FC) transport protocol; it also refers to the Fibre Channel standard specification defining the parameters to bind the Fibre Channel Protocol (FCP) to the NVMe storage solution.

The T11 organization created the new FC-NVMe standards specification that was published in August of 2017. This document defines the required NVMe Fibre Channel transport bindings to the NVMe storage protocol. Current FC-NVMe-2 standards development from the T11 will focus on areas such as sequence error correction, queue arbitration, and command cleanup.

The most prevalent storage protocol of today and yesterday is the Small Computer System Interface (SCSI). SCSI started in a similar fashion to NVMe, over PCI buses in local systems. It’s efficient but has limitations, such as queuing and stack simplicity when compared to NVMe. The newer NVMe protocol solves these issues by providing up to 64K queues and a smaller protocol stack requiring less copying of data during transactions.

NVMe can’t be talked about without mentioning solid-state disks (SSDs). One of the larger legacy latency issues associated with storage is the mechanical nature of disk drives and tape drives. SSD technologies have drastically reduced that storage latency. This latency reduction coupled with the lower latency of the NVMe protocol create today’s best latency-optimized storage solution. But how do we share this ultra-fast storage with the world?

Let’s Network It

The old networking transport wars were won by the Ethernet forces, but they weren’t able to stamp out all other transport factions such as Fibre Channel. Fibre Channel survived because of its focus on storage and reliability. It was able to provide the necessary performance due to its simpler protocol stack with Zero-Copy feature and provides superior SLA ability thanks to its buffer-to-buffer flow control feature.

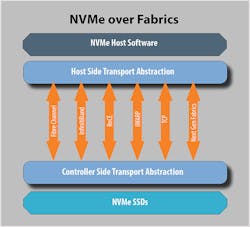

The speed aspects of Fibre Channel continue to play hopscotch with that of Ethernet, each jumping over the next to claim being the fastest protocol available. Fibre Channel is also well-suited as an NVMe Fabric transport for its ability to allow both SCSI and NVMe traffic to run on the same fabric simultaneously. Competing forces are taking different approaches to solve this new problem. NVMe-oF also refers to RDMA transport technologies such as RoCEV2, iWARP, and InfiniBand. Each of these transports provide their unique advantages and disadvantages. There’s also work on NVMe-TCP.

The T11 has worked closely with the NVMe protocols to reduce the amount of excess messaging and parameters required to transport NVMe across Fibre Channel Fabrics, while supporting all of the features in the NVMe-oF specification. Existing Gen5 (16G) and Gen6 (32G) storage-area networks (SANs) can run FC-NVMe over existing FC fabrics with minor upgrades without disruption. This would allow you to map volumes onto a FC LUN and NVMe namespaces over this same topology (see figure).

Block diagram of NVMe over Fabrics topology.

Fibre Channel specifications define how FC frames (FCID-28h), sequences, exchanges, and informational units map to NVMe transactions for scatter-gather lists (SGLs), fast-path IU (RSP) and extended response IU (ERSP), and ultimately read and write operations on server memory locations. The host bus adapater (HBA) will have an SGL list of memory locations that will rapidly transfer this data. The specific SGL descriptor type (05) is a transport SGL data block specific type that’s defined in the FC-NVMe specification. FCP DATA_IUs are used to transmit data using the SGL list in this new technology.

Measurables

Today’s SAN solutions are graded based on their measured performance during controlled testing. Latency, throughput, and the number of input/output operations per second (IOPS) give a good idea of the capabilities of products. Latency is defined as the time between arrival of the first bit of a frame’s arrival and the time the same bit is transmitted. Throughput is defined as the maximum port speed multiplied by the maximum number of ports. IOPS are the number of Input/output operations per second when performing a mix of read and write operations on a storage target.

NVMe across the PCI promises performance improvements over storage technology SATA and SAS of 2.5X-4X for throughput, 40-50% lower latency, and up to 4X more IOPS. Adding a fabric between the host/initiator and the storage/target will affect performance. The amount of performance change with the advent of a NVMe over Fabric solution will depend on the vendor product chosen and the fabric topology implemented.

One area that can be discussed is the basic workings of Fibre Channel and its inherent flow-control feature called “buffer to buffer credit.” This feature provides quality of data service (QoS) essential to meet customer SLA requirements. It will be essential for NVMe-oF fabrics to be able to provide lossless transport for customer storage needs. Other RDMA transport protocols will require the implementation of additional protocols to guarantee lossless QoS.

Latency improvements will be more noticeable at the end points of a storage solution with the advent of scatter-gather lists containing locations and lengths for read and write requests. The switching fabric of a Fibre Channel FC_NVMe solution will remain relatively unchanged and add no further latency. This is due to FC switching fabrics being able to intelligently switch FCP as well as NVMe frames.

Today the Fibre Channel industry is working diligently to provide low-cost, lossless, performance-based storage solutions using FC as the transport of NVMe storage data. The basics of the transport protocol are established and there have been many demo’s given to prove those basics.

The NVMe.org Integrator List (IL) has taken the next steps forward in creating a set of interoperability and conformance tests required to meet the IL criteria. Programs like the the NVMe.org IL serve to improve the quality of all participating products available to the storage industry. Group test events provide the opportunity to test and debug interoperability issues in real time. The next group test event will focus on the corner cases dealing with error correction being defined in the Fibre Channel T11 standards body.

Which will prevail—NVMe-oF or FC-NVMe technology? Time and the storage industry will make those decisions. Fibre Channel is well-positioned to benefit from the new NVMe storage technology. It’s a perfect storage technology to show off Fibre Channel’s performance and reliability. Fibre Channel requires less configuration than other transport technologies, and has an install base that will not require truckload upgrading of their existing fabrics. As the speed of transport protocols and their associated silicon increase, everyone stands to gain from the power of FC-NVMe and NVMe storage solutions.

Timothy Sheehan is Manager, Datacenter Technologies, at the University of New Hampshire InterOperability Lab (UNH-IOL).