This article is part of TechXchange: AI on the Edge

Download this article in PDF format.

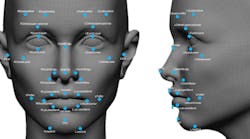

Facial recognition and voice control have landed, and it’s everywhere. Police officers are plucking offenders out of crowds of 60,000 or more; retail stores are enabling their high-definition displays with high-resolution cameras to monitor customers’ facial expressions; and, of course, smartphones are using it for user authentication.

The applications are myriad, yet facial recognition is essentially a form of advanced pattern recognition, which itself is being enabled by neural-network-based, deep-learning algorithms for artificial intelligence (AI). These are all of a class similar to the powerful algorithms used for autonomous vehicles and medical imaging, as well as simpler defect-detection applications on the factory floor or intruder detection in the home.

Those latter two systems are being trained to do more advanced defect detection and analysis on manufactured goods and consumer behavior patterns. Classical computer vision systems simply can’t perform at such levels.

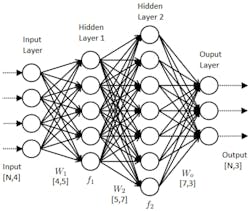

The primary reason deep learning has made such alarming advances is due to the combination of GPUs and artificial neural networks (ANNs) and its variants, such as convolutional neural networks (CNNs). Neural networks try to emulate the human brain but are essentially simple, interconnected processing elements that have multiple weighted inputs and a single output (Fig. 1). That output is fed to another hidden layer, and the process is repeated.

Sponsored Resources:

1. Artificial neural networks (ANNs) comprise multiple simple processing elements, each with weighted inputs and a single output. That single output forms the input to multiple elements at the next (hidden) layer. (Source: ViaSat)

In an image-processing example, an image is fed to the input. Subsequently, the first layer could perform edge detection, the second layer could do feature extraction (such as an ear and nose, or a STOP sign, or a type of defect), and the next layer could do Sobel edge detection, followed by contour detection at the next layer, and on it goes, depending on the application.

The layers through which the data propagated and transformed is called the credit assignment path (CAP). Deep-learning systems have a relatively large CAP, or number of layers through which the data is transformed. However, “large” isn’t defined, so the difference between shallow learning and deep learning also remains undefined.

Regardless of the number of layers, the formula for the output is deceptively simple:

Output = f2(f1(Input × W1) × W2) × W0

Given that an ANN can have hundreds of layers and thousands of nodes, the calculations grow rapidly. That’s where GPUs factor into the equation.

The Role of GPUs in Smart Everything

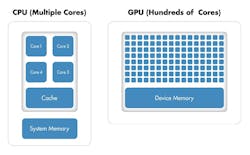

GPUs are the foundation of gaming platforms, because unlike CPUs, they’re specifically designed to process data in parallel using hundreds or thousands of simple, dedicated cores (Fig. 2). This is perfect for graphics processing, where the same processing function must be performed on large sets of data.

2. GPUs can scale to hundreds or thousands of simple processing elements that work in parallel to perform ANN-based deep learning. (Source: MathWorks)

With GPUs, this data processing is extremely parallelizable, unlike CPUs, which typically perform calculations in series. While adding cores and multithreading has made CPUs better at parallel data processing, the scalability of the GPU’s simple processing element makes it a natural fit for neural-network processing, and more recently for cryptocurrency mining.

Before a deep-learning algorithm can be embedded in a GPU for a given application, it must be trained and then optimized for performance, low power, and the smallest possible memory usage.

However, this training process requires lots of back-end processing, which is why Google came up with TensorFlow, a cloud-based framework for training that uses massive banks of GPUs to generate the trained model through iteration and data tagging. There are other frameworks, of course. Among the popular ones are Caffe, which uses C++ (TensorFlow uses the slower Python language), as well as Theano, which also uses Python and is a direct a competitor to TensorFlow, and Microsoft’s C++-based CNTK.

When looking for a framework, consider not only the language used, but also the simplicity of the interface; number of pre-trained models; how much programming is required after training; open source vs. proprietary framework; and how transferrable the models are to other frameworks, as well as new architectures and processors.

Once generated, the final model is then transferred to the GPU, which now becomes the “inference engine.” They’re called inference engines instead of fact engines for a reason: The whole process is understood to infer, or point to, a highly possible outcome. Though the accuracy is improving, getting that accuracy to 100% in any application will remain the Holy Grail for many years to come.

Keep it Simple: Do IoT to AI on One Multimedia Platform

While AI and its application in vision processing is garnering lots of interest, designers understand that it’s but the tip of the spear. For applications ranging from factory displays to home and building automation and entertainment, to automobile infotainment and simple scanners at the edge of the network, image processing is just the start.

On the factory floor, the human-machine interface (HMI) is increasingly relying on high-resolution, interactive touchscreens with fast wireless or wired connectivity to a connected gateway that is also aggregating sensor data. The gateway then either performs analysis locally or sends the inputs to the cloud for deeper analysis or general feedback to a larger system.

Likewise, in the home, security and entertainment systems are combining both vision processing and voice control, along with sensors for climate control, presence sensing, and remote surveillance. More and more, 4K HDR video (3840 × 2160 resolution) is a baseline for displays, for both TVs as well as interactive touchscreen displays for climate control and home monitoring. This has implications for devices ranging from set-top boxes to Internet of things (IoT) sensors and cameras as to the amount of on-board processing and communications capability is required.

Not to be left out is retail, where digital signage is combining high-definition displays and context- and customer-aware advertising, information, and useful wayfinding capability. Digital signage is also embedding cameras to analyze users’ expressions and behavior, as well as to match them to an online profile in what’s colloquially referred to within the industry as omni-touch, or omnipresence marketing.

Finally, autonomous vehicles, have become both data consumers and generators, with infotainment and constant internal and external sensing for user comfort and safety, from climate monitoring to advanced driver-assistance systems (ADAS), along with various levels of autonomy.

This is what’s happening. Now, what’s a designer’s most appropriate response?

Design Requirements

Clearly, each of these applications have wildly varying processing, memory, I/O, communications, environmental tolerance, security, software, and real-time performance requirements. Digital-signage players and home video may need two or 4K HD streams, while a home automation controller and interface may only need a 1024 × 768 graphics touchscreen. An IoT device may require face recognition, or simple cameras; a Bluetooth connection or full Wi-Fi to connect to the cloud for instant response.

However, as speech recognition, voice control, gesture control, and high-end audio become default requirements in many applications, designers have to be able to quickly scale up and down the functionality, power, communications, and performance curve, while simultaneously reining in costs and development time.

While one size clearly doesn’t fit all, the smart route is to pick a well-supported platform that can come close. The goal is to find one that has multiple levels of processing capability; comes with GPUs for video and graphics; has high-end audio, fast memory, wireless and wired communications, and appropriate OS support; and a wide and active support ecosystem.

The goal is to avoid reinventing the wheel, and focus on your true value add, whether it be in additional hardware or software.

Another key design requirement is longevity. Once deployed, a system may be in the field for many years, so high tolerance of harsh environments is necessary, as is the ability to perform updates over time. This latter point implies that enough margin has been built into the design to allow for more advanced processing requirements as functions get added, while still ensuring low power consumption.

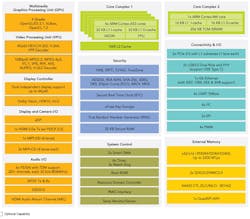

3. The i.MX 8M is an interesting solution to the problem of scaling and longevity for voice, audio, video, and AI applications from the home IoT device to high-end 4K HDR video. (Source: NXP Semiconductors)

A good example of a platform solution that can kickstart this approach is the i.MX 8M from NXP Semiconductors (Fig. 3). This is actually a family of processors that has up to four 1.5-GHz Arm Cortex-A53 and Cortex-M4 cores. It’s a part of wide-ranging lineup of 32- and 64-bit solutions based on Arm technology.

In addition to its high performance and low power consumption, the i.MX 8M family has flexible memory options and high-speed connectivity interfaces. The processors also feature full 4K UltraHD resolution and HDR (Dolby Vision, HDR10 and HLG) video quality, as well as the highest levels of pro audio fidelity with up to 20 audio channels and DSD512 audio.

Importantly, the solution supports Android, Linux OS, and FreeRTOS and their ecosystems, and is also scalable down to two Arm Cortex-A53 cores. The VPU and other functionality can be removed to lower cost and power.

To help get a design off the ground quickly, the i.MX 8M is also supported by an EVK, as well as a range of crossover processors. These high-performance MCUs solve specific problems without scaling to a full Linux machine. Included is the i.MX RT 1050 high-performance processor with real-time functionality.

Meeting the needs of a wide gamut of applications in terms of performance and functionality, while keeping costs and development time to a minimum, is a problem designers will face at some point. The trick is to learn one platform really well, but ensure it can scale as needs change, and that the company supporting it will be around 10 years from now.

Read more articles on this topic at the TechXchange: AI on the Edge

Sponsored Resources:

- i.MX8M Application Processors are built with advanced multimedia, high-performance and flexibility

- Built with industry-leading audio, voice, and video processing

- i.MX products from NXP

Related Resources: