Typically, when you run a software program on your computer, mobile device or via a server in the cloud, you’re using volatile memory, or memory that doesn’t retain its contents when the power is off. When the code is finished with its tasks, the resulting data is usually handed off to non-volatile media, like a hard drive or solid-state drive (SSD). The data can live there until it needs to be pulled again for a given software process.

Imagine, though, that you could cut down on the lag, or latency, that happens with this hand off? You’d have the perfect blend of volatile memory speed and non-volatile persistence. In fact, the new nascent storage media is called just that: Persistent Memory.

A number of competing technologies exist on the market. Companies are all trying to get the lowest latency possible out of the underlying media. The ultimate goal with persistent memory is to achieve the same or better latency than dynamic random-access memory (DRAM); then it can be a DRAM replacement.

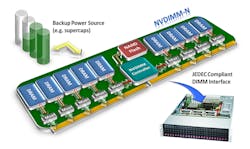

Persistent media already exists, but it uses a workaround by placing it behind DRAM, which has the lowest latency. What the host sees is fast DRAM latency. It makes that DRAM persistent by using Persistent Memory media as a backing store of sorts for the DRAM. So, when the host is busy rewriting or even refreshing the DRAM, and when the power fails, the device has enough power to persist what's in DRAM.

The devices shipping now that support this sort of persistent media are called non-volatile dual in-line memory modules, or NVDIMMs. While the main promise of persistent media is speed, it also can protect against power interruptions, allowing end users and processes to work with data even if the power is interrupted to a given device.

An NVDIMM-N is one type of persistent-media device.

There are currently two types of NVDIMM devices: NVDIMM-N and NVDIMM-P. NVDIMM-N operates during runtime with the performance and capacity like a DRAM DIMM, but made non-volatile through use of NAND flash. It typically features a capacity of 4 to 32 GB and low latency of tens of nanoseconds. NVDIMM-N is fully defined in established JEDEC standard specifications. NVDIMM-P is an upcoming specification from JEDEC that will define a memory module interface that’s compatible with the DRAM DIMM interfaces. It will also enable use of the capacity of fast non-volatile memory along with the speeds similar to DRAM DIMMs today. It allows for expansion of capacity per module from gigabytes to terabytes, and will carry low latency in tens to hundreds of nanoseconds.

Paradigm Shift

Persistent Memory media is surely a sea change in our computing paradigm. A similar transition came when SSDs came along. NAND (Not-And) devices were placed into enclosures and connected to computing devices with the same form factor as the previous spinning disk technology. Computing devices use the same interface, a serial-attached small computer system interface (SAS) or Serial AT Attachment (SATA), to talk to it. Suddenly, all of the applications that were on the hard drive work with solid state, only a lot faster.

The problem was, however, that without changes to the software, these new media devices were too fast for the underlying computer architecture. There was a latency limitation with SAS and SATA interfaces, as well as the driver stack above them, which limited the performance in that form factor and using those interfaces. A brand-new interface just for SSDs called NVMe, or NVM Express, had to be created. Now, devices can take full advantage of SSD performance.

NVMe SSDs work on a PCIe bus, so computing devices aren't restrained by lower-performance hard-disk drives. Consequently, new issues need to be resolved, like dissipating the heat from a bunch of NAND devices. Now a number of new form factors have the promise of fully taking advantage of solid-state storage.

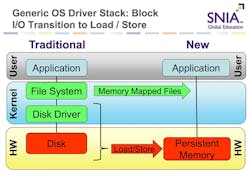

The same will likely happen with new persistent media, but this time as well, there needs to be software changes to get full performance from the new paradigm.

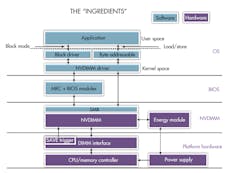

The “ingredients” of persistent media include platform hardware, OS and software.

Solving the Software

Software, from the operating system on up, needs to be rewritten to deal with new, lower latency and the end of the need to write data to slower non-volatile destinations. Many applications today assume that memory isn’t persistent. Therefore, sharing that data with another machine or another instance of the application, the cluster, or somewhere, requires that it's already coded to write it out to a block device. The app will write data to memory and use it in memory, but at some point, it needs to be checkpointed and written out to some persistent media, typically an SSD or a hard drive.

For software to take advantage of persistent memory, it will have to change the operations and realize that when its reading and writing in memory, that's it. It's already persistent. So, code won’t have to stage it out to another device.

The operating system itself needs to understand that memory is persistent, too, so it can pass that persistent-memory model to the applications it runs. It must add new features to the language itself to accommodate and optimize for this new model.

SNIA Model

An NVM programming model developed by SNIA explains the technical issues involved. For example, there’s a command called flush. The reason we use flush is because some of your data might be in memory and some might be in a processor cache. So, if your data is still in the processor cache, it's not persistent, but if you flush it out to memory, then it can be persistent. Therefore, where it once had to execute a checkpoint and send it out to the SSD, now it just has to do a checkpoint, or a flush.

That gets all of the data out of the cache and into the DRAM, for one thing. And if the power fails right then, the application and its data are saved in NAND flash memory, and its data would still exist. When the application comes up again, what it thought was in memory is still there.

The bottom line is that your applications will run several orders of magnitude faster than if you’re writing out to SSDs. It will initially cost more to implement this new memory architecture. That might make some business people worry. Businesses will have to pay more, but they will get more performance, and maybe the improved performance will actually make it possible to charge more, which is usually a good business choice.

Persistent memory will change the way applications transition to a new stack.

Ultimately, it’s about making a tradeoff. Some things won’t change in an app, but others will need to be modified if they’re to take advantage of this performance. Thus, you could make some interim steps that will result in better performance without having to change anything.

Swapping out all of your SSDs for persistent-media devices will be very expensive, especially now, when the technology is new. Changing all of your software to take advantage of persistent media will also involve a layout of capital, so you might find a middle path and just upgrade the devices and software that offer the best return on your investment.

What is SNIA?

The Storage Networking Industry Association (SNIA) is an organization made up of storage networking professionals. It provides a wide variety of whitepapers and educational documents, including the NVDIMM Cookbook: A Soup-to-Nuts Primer on Using NVDIMMs to Improve Your Storage Performance.

The association has a technical work group that’s developing specifications, white papers, and educational material around the NVM programming model. The Solid State Storage Initiative, which is a group meant to go out and promote adoption of solid-state and persistent memory, is aiding in that effort. The Initiative has a special interest group (SIG) that’s focused on NVDIMMs and persistent memory.

The same group has also done a number of BrightTALK webcasts, and presented at professional conferences. They present a substantial track every year on solid-state and persistent memory at the Storage Developer Conference every year in September.

The mission of the Persistent Memory and NVDIMM Special Interest Group (PM/NVDIMM SIG) is to educate on the types, benefits, value, and integration of persistent memory, as well as communicate the usage of the NVM programming model created to simplify integration of current and future persistent-memory technologies. It also hopes to influence and collaborate with middleware and application vendors while developing useful case studies, best practices, and vertical industry requirements around persistent memory. Furthermore, the PM/NVDIMM SIG hopes to coordinate with other industry standard groups to promote, synchronize, and communicate a common persistent-memory taxonomy.

All of that activity and energy is going into creating a future where persistent memory is the norm and persistent media is commonplace. SNIA has formed an alliance with a group called OpenFabrics Alliance (OFA), which focuses on low-latency fabric interconnects between CPUs and servers. Persistent memory gives you the ability to take an NVDIMM from a failed server and plug it into a new server so that everything is back online and available. It helps with data durability and availability—while the NVDIMM is swapped into a new server, the data is unavailable, which may mean the application is unavailable, and customers may not be paying money at that time.

Persistent memory over fabrics allows you to mirror the data that's written to local persistent memory over to remote persistent memory. That means if your server dies and can't be repaired, the application can use the data on a different server. Thus, your application is still available, the data is still available, and your customers are still paying.

That makes it so there’s no impact on the customer side. A slight hiccup could occur when an app finally determines that the server really is down while it has to switch over to the secondary, but that happens pretty quickly—a lot faster than unplugging the NVDIMM and putting it in a different server.

Gazing into the Crystal Ball

In the distant future, it’s not hard to imagine how much faster your laptop would be to get to a specific task without the need to boot up. A laptop would pick up wherever it was before, meaning whatever windows you had up and wherever your cursor was on the screen—all exactly the same as when you powered it off.

The cloud will benefit from the use of persistent memory, too. The new technology is going to make it so that any given machine can support many more clients. Businesses can have more customers deferring the cost of that one server by being able to service a greater influx of customers. All they give you is a slice, and that slice is based on how fast things can get done, and how fast they can take your data and put it on that server. If that data is already sitting in persistent memory, it's just running right now. It can start generating income right away since time isn’t spent on loading your applications and data.

That will help businesses decrease overhead as well. Typically, with a normal boot, devices have to read in a little piece and then a bigger piece, start running the operating system, subsequently start running the applications, and, finally, present the prompt (or the web page). During that time, customers aren’t being charged; there’s no work happening. Persistent media can eliminate that downtime on every server. As a result, more applications can be run on every server, which will ultimately help defray the cost of switching over to a persistent-memory paradigm.

Ultimately, while persistent memory and associated media is just coming into play, the technology itself will have far-reaching implications for our increasingly computerized and cloud-based world. Faster, durable, and mobile memory devices mean more effective computing abilities across applications in both business and private domains.

Companies, after some costly upgrades to both hardware and software, can begin to reap the benefits with more efficient systems, far less noticeable downtime or failure, and increased ability to serve more customers across a variety of domains. As we see the tech move into personal use devices like laptops and possibly tablets and handheld devices, persistent memory is the forefront of a new, more powerful way to manage our computing needs.

To learn more about the work that SNIA is doing around these topics, please visit our website at www.snia.org/pm.