Download this article in PDF format.

NVDIMM (non-volatile dual in-line memory module) is a new class of memory designed to allow non-volatile memory to be connected to the DIMM bus traditionally populated by DRAM. Being a new memory, we’re early into its lifecycle, with two variants in production and a third on the way. But proponents of NVDIMM are also in the early days of understanding the diverse ways that they can integrate the technology into their systems. As user ideas proliferate, they will spawn new NVDIMM architectures, further broadening NVDIMM’s application scope.

The newest NVDIMM combines flash memory for storage with a DRAM cache for faster access. While DRAM clearly has extremely broad adoption in computer systems, it’s fraught with management and power challenges. An emerging memory technology, magnetoresistive RAM (MRAM), is simpler to manage than DRAM, is targeted to use less energy, and is itself non-volatile. Replacing the DRAM in an NVDIMM with MRAM in new NVDIMM designs will result in higher performance, lower power, easier management, and new ways of using NVDIMM. It could also inspire new architectures for future versions.

Overviews: NVDIMM and MRAM

There are two existing versions of NVDIMM (with one more in the standardization process):

- NVDIMM-F is the original, and it consists only of flash memory wrapped in a DDR4 interface.

- NVDIMM-N adds the DRAM cache, keeping the DDR4 interface. Reads and writes go through the DRAM, with cache changes being written back to flash per system policy. Because DRAM is volatile, its contents must be stored in the flash when power is shut off or fails. Some form of backup battery is needed to provide the energy for that transfer.

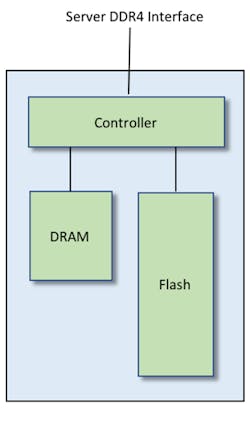

NVDIMM-P is a newer standard being formalized through the JEDEC organization. Intended to accommodate new types of memory, its first configuration will be monolithic, with subsequent variant versions to follow (Fig. 1).

1. A typical NVDIMM-N configuration combines flash and DRAM managed by a controller that moves data between the two during power transitions.

MRAM, meanwhile, is a newer non-volatile memory technology that uses very small magnetic elements as the storage cell. Unlike flash, they’re byte-addressable and require no special management like wear-leveling and refresh. And, as companies like Spin Transfer Technologies innovate to bring this technology into the broad commercial sphere, MRAM will be able to compete with DRAM on cost.

Handling Power Failures

One of the major challenges of NVDIMM-N is the need to save the contents of the DRAM when the power fails or is shut off. Under normal operating conditions, the cache must be saved when the power is turned off, and then, depending on how that save operation is done, the DRAM will need to be reloaded on power up to restore the state of the system when power was shut off.

Powering down a system is part of normal operation and can be planned for. But power failures come unpredictably, and multiple power on-off cycles may occur before full power is ultimately restored. This is typically handled by detecting the failure and then saving the cache to flash. During that save operation—which happens after system power is gone—a backup battery supplies the necessary energy. This adds some system-level requirements:

- Software is needed to manage the power transitions (including normal shutdown).

- Space in the flash may need to be allocated for cache storage.

- The battery itself takes precious space, has a limited lifetime, and introduces materials into the system that are covered by the Restriction of Hazardous Substances (RoHS) standard.

- If a primary battery is used, then replacement is needed after some predictable time. If the battery is rechargeable, then recharging circuitry is required, and both need to be periodically tested.

- When capacitors or other energy storage is used, operation can become unpredictable as the components age.

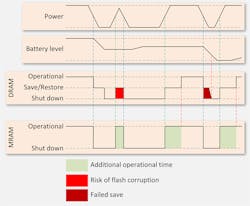

Repeated power cycles create yet other challenges. If the power comes back up, causing the cache contents to be read back out of flash, and then fails again during that load process, the flash contents can be corrupted. Worse yet, each power cycle further drains the backup battery, with no time for complete recharging if the battery is rechargeable. After too many cycles, the battery will have been exhausted.

Because of this, some NVDIMMs include a power-cycling session in their testing or validation. Power is turned off and on every few milliseconds for a couple of hours to ensure that the system can handle sustained power-cycling events.

MRAM as Cache

Replacing the DRAM cache with an MRAM cache eliminates the challenges associated with power failures. MRAM’s non-volatile character means that, if power goes off, MRAM maintains its contents. There’s no need to save the cache contents to flash when power goes away, nor does the cache need to be reloaded when power returns, making power-up faster and safe.

This means that systems using MRAM in NVDIMMs will not require the backup battery to support the MRAM. And by not having to reload the cache contents on power-up, there’s no risk of flash corruption on a subsequent failure. Finally, the software stack no longer needs code for managing the cache-saving process, and the byte-addressability of the MRAM further simplifies software (Fig. 2).

2. A power-fail scenario that can deplete the battery subsystem doesn’t fail with MRAM.

Note that if system design specifications call for writing the cache to flash on power-down for any reason, it’s still possible to do so even with MRAM. However, it’s no longer required as a fundamental characteristic of NVDIMM-N use.

NVDIMMs for Software-Defined Storage

Software-defined storage adds a layer of abstraction above traditional memory-mapping schemes. Instead of defining a memory region by its physical address boundaries, a region is given a name; the region is referred to as a namespace.

In a software program, the memory region is referred to by the name instead of by memory bounds. An intervening controller manages where the namespace is allocated. In fact, for security purposes, a namespace could be relocated on each boot-up. This abstraction decouples the memory region as referred to in the program from the physical storage, allowing the storage to be changed or even interleaved without any source program changes.

These memory regions can be encrypted, which adds key management to the system requirements. The keys are typically stored in their own encrypted space. System robustness is enhanced if those keys are stored in a memory separate from the memory where the data itself is stored.

The keys are themselves encrypted, so if the key storage is compromised, the attacker will not gain access to the data. The root key for encrypting the keys comes from the system. Therefore, gaining access to the key storage—say, by removing the NVDIMM module from the system—keeps the data secure.

In such a system, the key storage can be defined as one namespace; the data as a separate namespace. Data writes involve encryption; data reads involve two levels of decryption (key and data). These details are abstracted from the system through a controller. When the system needs to read data, for example, it simply requests a read. The controller then recovers and decrypts the required data key from the key storage, reads and decrypts the data, and then places it on the bus. The system is unaware of the details of how the data was obtained.

An NVDIMM with MRAM as the cache works very well for this application—particularly if a new controller allows for independent access to the MRAM and the flash (rather than requiring all flash accesses to go through the cache). The MRAM can be used as the key-store namespace; the flash becomes the data-storage namespace. Because the MRAM and flash are separate chips, the failure of one doesn’t affect the other.

MRAM Uses Less Energy

Using MRAM enhances energy benefits:

- DRAMs utilize a pre-charge mechanism for reading data. That pre-charging operation uses energy that’s not required for reading an MRAM.

- DRAMs need to be constantly refreshed. The refresh operation is performed by dedicated circuitry that keeps track of which memory sectors need to be refreshed at each refresh event. That circuitry uses energy, as do the memory cells, during refresh. No such circuitry is required for MRAM, so that energy is saved.

- DRAMs must be read one line at a time. When multiple threads are served out of a single memory, accesses will appear to be relatively random. Therefore, most of the contents of each retrieved memory line won’t be used, since successive reads will most likely come from different regions in memory. MRAM, by contrast, is byte-addressable. Only those bytes needed are retrieved, making access faster and reducing energy requirements.

- Cache storage and recovery in response to power events (up, down, fail, and recover) take time and use energy—some of which is supplied by the backup battery. MRAM requires no such handling, which saves that energy.

MRAMs Create New NVDIMM Opportunities

NVDIMMs as defined today leverage DRAMs as cache, mediating access to the full flash storage. Even for today’s applications, the current architectures can benefit from a swap of an MRAM in place of the DRAM. Power events no longer need to be handled; backup energy requirements drop; performance increases; and management software becomes simpler.

Meanwhile, new MRAM-based designs that permit direct access to both the MRAM and flash can open up new applications like key-encrypted key storage for accessing namespaces in the flash memory. This is but one variation; other architectures are likely to present benefits for different applications.

NVDIMM architects are faced with the opportunity to innovate as system designers envision yet new ways to use NVDIMM memories. Spin Transfer Technologies remains committed to working with NVDIMM creators to explore how this new memory class can further reduce system cost, decrease system power, and improve system performance.