This article is part of the TechXchange: Generating AI.

What you’ll learn:

- Generative AI accelerators aren’t capable of handling training and inferencing equally.

- GenAI accelerators consume enormous amounts of energy.

- Creating effective and efficient LLMs requires a unique skill set.

Lauro Rizzatti has turned his vast understanding of the semiconductor and verification markets to generative AI to better understand its benefits and limitations, something long overdue as myths and misconceptions multiply across the internet. Below he debunks 11 of those myths surrounding the technology.

1. Any computing engine, whether CPUs, GPUs, FPGAs, or custom ASICs, can accelerate GenAI.

Not true. CPUs don’t have the performance to accomplish the task. GPUs possess the nominal performance but suffer low efficiency that crashes the deliverable speed to a fraction of the nominal specification. FPGAs are less than ideal for the job.

Custom ASICs, when tailored to a specific task, are the only viable solution.

2. GenAI accelerators can handle both training and inferencing.

In principle, it’s possible to train and infer a model with the same GenAI processor. The reality is that training and inferencing are two different tasks with unique attributes. While model training and inference share performance requirements, they differ on four other characteristics: memory, latency, power consumption, and cost (Table 1).

The unique set of attributes lead to GenAI processors with rather different characteristics. Typically, the model training is carried out on extensive computing farms built on vast arrays of state-of-the-art GPUs. They run for days, consume large amounts of electric power, and cost up to hundreds of billions of dollars.

The real problem resides with inference at the edge. Edge GenAI processors today can only execute models tailored to specific tasks. No GenAI hardware is able to run interference on the full GPT-4.

3. Custom GenAI accelerators trade off programmability for performance.

While this may be correct for some custom implementations, it’s not true for a practical solution. An ideal solution must be programmable to allow for in-field upgrading. AI in general and GenAI in particular are endeavors in constant evolution. Today’s cutting-edge AI algorithms will be obsolete tomorrow. New larger, more powerful algorithms will replace them. Expensive hardware solutions must be upgradable in the field for at least three years.

4. GenAI accelerators consume less energy than traditional computing resources.

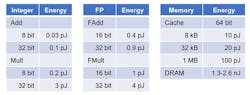

The reality is the opposite. Data processing consumes less energy than moving data. As numerically shown in a study from Stanford University led by Professor Mark Horowitz, power consumption in CMOS ICs is dominated by data movement, not data processing (Table 2).

The power consumption of memory access consumes orders of magnitude more energy than basic digital logic. Adders and multipliers dissipate from less than one picojoule when using integer arithmetic to a few picojoules when processing floating-point arithmetic.

By contrast, the energy spent accessing data in cache jumps one order of magnitude to 20-100 picojoules and up to three orders of magnitude to over 1,000 picojoules when data is accessed in DRAM. AI and GenAI processors are examples of design dominated by data movement.

5. Large language models (LLMs) are evolving with newer versions that are smaller than previous generations, since algorithm engineers learned how to reduce their dimensions without compromising functionality.

Not really. Since LLMs were created several years ago by a Google research team, they have constantly improved and, in the process, grown larger and larger. The trend continues and poses a challenge to the computing hardware. Attempting to alleviate the pressure on the hardware design community, software modeling teams design smaller models to address specific tasks in narrow applications.

6. Creating LLMs is just a software modeling endeavor; it requires no specific skills.

According to a panelist at the recent “2023 Efficient Generative AI Summit,” there are possibly 100 software designers in the world with the talent to design effective and efficient LLMs. Giving the panelist the benefit of the doubt, LLM development is an engineering endeavor as much as an artistic conception that requires a unique skill set not in abundance today.

7. A single LLM will define all use cases.

This may be every LLM development teams’ dream, but the truth is that such a goal may never be achievable. An LLM usable for text generation and image/video/music creation across the entire universe of applications, including financial, legal, medical, scientific, industrial, commercial, educational fields, and more, would lead to a model of astronomical dimensions. It’s not practical to design and virtually impossible to train and deploy.

8. GenAI is effective in all languages.

As a general statement, a GenAI model is trained on a specific language and then deployed in inference in the same language environment. To date, the development language has been English, but versions in other languages, such as main European and Asian languages, do exist.

In principle, it’s always possible to train and then deploy a model in any language, but the source data necessary to thoroughly train a model may be insufficient.

9. GenAI is unbiased and objective.

AI is built on a statistical, not deterministic, foundation. GenAI responses embed a degree of uncertainty. They ought to be accepted on the basis of being “probably” correct. They’re often correct; it’s infrequent when they’re wrong. The human overlooking the GenAI application should always evaluate the response with a critical mind.

10. GenAI can replace human creativity and innovation.

A resounding no. As stated in response to Myth #8, GenAI responses must be evaluated by a human with a deep knowledge of the specific field in which GenAI has been applied. Ignoring the assessment may lead to trouble, ultimately jeopardizing the institutions of an entire country, ruining education, and enabling plagiarism.

Still, GenAI has the potential to lift human productivity. A June 2023 report from McKinsey titled “The economic potential of generative ai” stated that Generative AI could add up to $4.4 trillion annually to the global economy across 63 analyzed use cases. The estimate might double when adding the impact of embedding generative AI into software currently used for other tasks beyond those use cases.

11. GenAI is too new and too risky.

GenAI is indeed new, but not risky. It’s a misconception to believe that humanity will disappear because GenAI has gone off track. The concern stems from some “chatbots’ threats to destroy humans” reported by bloggers, researchers, and the like.

It’s important not to take emotional responses to AI too seriously. After all, as discussed in WIRED magazine, we’re capable of downplaying reactions to inanimate objects. Pinocchio, for example, tells us he wants to be a “real boy,” but why do we react with an emotional breakdown when a chatbot says she wants to marry us.

Read more articles in the TechXchange: Generating AI.