New Cortex-A Targets Edge AI

What you’ll learn:

- What’s in Arm’s new edge compute platform?

- Quad-core Cortex-A320 handles many AI/ML tasks itself.

- Cortex-A320 boasts improved security.

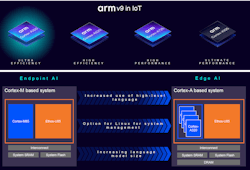

Arm’s low-end edge-computing solution combines the Cortex-M85 with an Ethos-U85 neural processing unit (NPU). The platform can do some amazing stuff when it comes to artificial intelligence and machine learning (AI/ML), but sometimes the compute chores need a bit more horsepower. Enter the Cortex-A320 (Fig. 1).

Quad-Core Cortex-A320 Handles Many AI/ML Tasks Itself

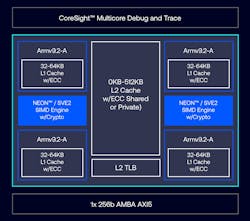

The Arm Cortex-A320 cores are designed to be low power and very efficient compared to the rest of the Cortex-A line. They’re based on the Arm9.2-A architecture. A typical quad Cortex-A320 layout adds cache and NEON/SVE2 SIMD engines with crypto support (Fig. 2). Multicore versions utilize Arm’s DSU-120T, DynamIQ Shared Unit (DSU).

The Ethos-U85 NPU continues to do the heavy AI/ML lifting, but the Cortex-A320 provides improved AI/ML model handling especially when compared to the low-end Cortex-A35 and the Cortex-M85. Arm touts up to a 70% performance boost plus a 10X increase for AI/ML chores. It’s 50% more power-efficient compared to an Arm Cortex-A520.

Dimosthenis Rossidis, Senior Product Manager, IoT Line of Business with Arm, notes that the Cortex-A320 gets some of its improvements from “a narrow fetch and decode datapath, densely banked L1 caches, a reduced-port integer register file, and other optimizations.” The cores have an 8-stage pipeline.

The Cortex-A320 includes support for NEON matrix multiply instructions. It supports the BFloat16 floating-point format that’s popular with compact AI/ML models. It also handles the Scalable Vector Extension (SVE2) instructions.

>>Check our coverage of embedded world 2025, and this TechXchange for similar articles and videos

The combination of the Cortex-320 and Ethos-U85 is designed to work with large language models (LLMs) locally over models with over one billion parameters.

Cortex-A320 Boasts Improved Security

Arm’s edge-AI application solution also pushes high-level security support (Fig. 3). This includes features like Arm’s Memory Tagging Extension (MTE) and Pointer Authentication (PAC) with Branch Target Identification (BTI). These are designed to improve memory safety at the hardware level.

MTE utilizes 4-bit address tagging and 4-bit memory tagging. The former adds information to pointers while the latter is associated with a 16-block region, also known as a tag granule. If the address and memory tags agree, then pointer references will work otherwise and the error is flagged.

PAC/BTI is designed to mitigate jump/return-oriented programming attacks. PAC added instructions to insert an authentication code into the upper bits of the 64-bit return address pointer. These bits were unused. Attackers can’t modify them, allowing for errors to be detected at runtime. BTI support is similar, but it addresses jumps. These features tend to be hidden by a compiler.

The Cortex-A320 supports Secure EL2 (Exception Level 2), which enhances Arm’s TrustZone security isolation.