Advanced AI SoC Powers Self-Driving Car

Check out Electronic Design's coverage of CES 2024.

What you’ll learn:

- How Ambarella’s system improves radar sensing.

- How the CV3 family addresses automotive applications like self-driving cars.

- What’s inside the CV3 system-on-chip (SoC)?

Advanced driver-assistance systems (ADAS) need high-performance computing engines to analyze the array of sensors around the car. The number of sensors and compute requirements continue to grow as self-driving cars put more demands on the hardware and software to provide a safe ride.

The Society of Automotive Engineers (SAE) specified six levels of driving automation. Level 0 is all manual while Level 5 is fully autonomous. Many companies are expanding the boundaries toward fully autonomous, but we’re not quite there yet. Companies such as Ambarella are pushing that envelope, though.

I recently had a chance to check out Ambarella’s self-driving technology, riding along with Alberto Broggi, General Manager at VisLab srl and Ambarella (watch the video above). They equipped a car with Ambarella’s CV3 chip and custom radar sensors plus additional sensors (Fig. 1). The self-driving software runs on the CV3, which incorporates a bunch of Arm cores and artificial-intelligence (AI) acceleration cores. As with most self-driving solutions today, this is a testbed that requires a live driver at the wheel to take over if necessary.

The CV3 is found in a system that’s housed in the trunk of the car (Fig. 2). The system has other boards and peripheral and network interfaces as the chip itself is a bit smaller.

The standard digital cameras and radar sensors ring the car (Fig. 3). These are connected to the automotive-grade CV3. The 4D imaging radar is from Oculii, which was recently acquired by Ambarella.

Its virtual aperture imaging software enhances the angular resolution of the radar system to provide a 3D point cloud. This is accomplished with a much simpler antenna and transmitter/receiver system that would normally be required to provide comparable point-cloud resolution. It uses dynamic waveform generation that’s performed by AI models. The approach can scale to higher resolution.

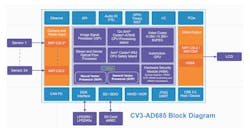

At the heart of the system is Ambarella’s ASIL-D-capable CV3-AD685, which includes a dozen, 64-bit Arm Cortex-A78AE cores and an Arm Cortex-R52 for real-time support (Fig. 4). The two dozen MIPI CSI-2 sensors feed the image-signal-processing (ISP) unit. The ISP supports multi-exposure, line-interleaved high-dynamic-range (HDR) inputs. It can handle real-time multi-scale, multi-field of view (FoV) generation and has hardware dewarping engine support.

There’s also a stereo and dense optical flow processor for advanced video monitoring. It addresses obstacle detection, terrain modeling and ego-motion from monocular video.

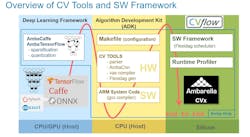

The Neural Vector Processor and a General Vector Processor accelerate a range of machine-learning (ML) models. This makes up CVflow, Ambarella’s AI/ML computer-vision processing system (Fig. 5). It specifically targets ADAS and self-driving car software chores.

The GPU is designed to handle surround-view rendering in addition to regular 3D graphics tasks. This enables a single system to handle user interaction as well as control and sensing work.

The processing islands are divided to allow for the safety-oriented island to take on ASIL D work while the other handles ASIL B, which includes the video output. Error correcting code (ECC) is used on all memory, such as external DRAM, and a central error handling unit (CEHU) provides advanced error management.

The chip targets a range of applications including driver monitoring systems (DMS) and multichannel, electronic mirrors with blind-spot detection.