Neuromorphic NPU Sips Power to Handle Edge Machine-Learning Models

What you’ll learn:

- What are spiking neural networks (SNNs)?

- Why the Akida Pico neural processing unit (NPU) can use so little power to handle machine-learning models.

- Why neuromorphic computing is important to artificial intelligence.

Neuromorphic computing tries to more closely mimic the body’s neurological features in the form of spiking neural networks (SNNs), which take a different approach than the popular deep neural networks (DNNs). I talked with Steve Brightfield, Chief Marketing Officer at BrainChip, about the company's ultra-low-power Akida Pico neural processing unit (NPU).

The Akida Pico NPU is a co-processor designed to pair with a low-power microcontroller, targeting IoT/edge-computing devices that can run for months or years on a battery or energy-harvesting sources. We’re talking about microwatts of power for artificial-intelligence and machine-learning (AI/ML) accelerated applications. It can handle applications like speech and audio processing without resorting to the cloud.

Ultra-Low-Power Akida Pico NPU for Edge Computing

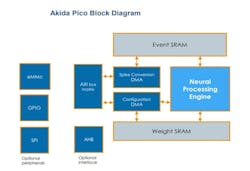

The Akida Pico is designed as a self-contained co-processor fed by the host (Fig. 1). It provides results to the host as a typical NPU would. The difference is how it handles the data. The next section provides a little more insight to neuromorphic computing and SNNs, but one of the key differences between SSNs and other DNNs is the use of spikes or “pulses.”

Essentially, the Akida Pico front end converts the usual parallel presentation of data into spikes that are fed into the processing system. These spikes note the changes between data provided to the NPU. There are some devices, such as Prophesee’s event-based Metavision image sensor, which could provide the type of data stream the system is capable of processing without conversion.

Regardless of the source data, the NPU will eventually kick out the model’s results based on the source data. The model is trained like a conventional DNN, using lots of data to generate the weights employed within the model.

BrainChip’s MetaTF development environment is designed for developers who have TensorFlow models (Fig. 2). The CNN2SNN tool converts convolutional neural networks (CNNs) to those that can be handled by the Akida platform.

What is Neuromorphic Computing and a Spiking Neural Network?

We haven’t yet looked at the underlying neuromorphic computing approach because developers are typically concerned with the AI/ML models. Spiking neural networks can’t handle all of the models, but neither can many other NPUs. For those that it’s able to handle, the models are developed and often trained using conventional tools.

CNN implementations build on layers that specify matrix computations. The inputs are massaged using the weights at each level generating output that’s processed by the next layer. The key here is that the computation for each layer is done all of the time and, computationally speaking, requires some heavy lifting. It’s why the work is typically done using an NPU or a GPU rather than a CPU. Optimizations that address sparsity, where weights are zero or very small, can accelerate computation without degrading the results.

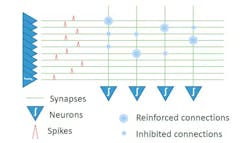

SNNs are much different, although they use a similar layered structure (Fig. 3). The difference is that the pulses initiate computation, whereas a CNN always performs computations at each point within the model, making them more computationally intensive.

Scaling SNNs includes some of the techniques found in other neural-network implementations, including the use of different size weights. The optimizations must be considered with respect to the quality of the results.

Overall, SNNs fair very well compared to their counterparts for the types of applications that work with SNNs, e.g., stream processing, such as audio and video data. The low-latency and low-power aspects of this type of NPU suit it for standalone applications that need to conserve power.

Developers can take advantage of BrainChip’s software tools and chip simulator to test their applications.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.