Semiconductor Test: Real-time adaptive test algorithm can safely reduce wafer testing time and cost

The testing of the semiconductor dies produced by a wafer fabrication plant involves a long series of operations requiring meticulous care. The time spent performing these tests markedly affects both a plant’s production throughput and production costs.

Spurred by the potential for efficiency improvements apparent in the conventional approach to wafer testing, a team at ams, a manufacturer of mixed-signal and analog ICs, set out to devise a method that would provide a worthwhile reduction in test time while affecting the quality of the finished products as little as possible.

To do so, ams developed software that performs adaptive test simulations based on real test results derived from a test sample. After comparing the results of the simulated tests with the results of real tests, ams discovered that it could reduce test times by as much as 50%.

Detailed analysis shows that the new simulation method lets very few defective components escape detection, resulting in only a very small reduction in production quality. This article describes the successful simulation method that ams devised and a statistical approach to reliably determining the number of undetected defective parts that the simulated tests will pass.

Contrast with conventional die testing methods

Traditionally, all ICs in a wafer sort are subject to the same list of tests, test limits, test flow, and test conditions during each back-end test insertion. If the wafer fabricator wants to refine the test procedure, this must be done offline, and then the new test routine will be applied uniformly to all subsequent dies. The advantage of this approach is that the units shipped to customers are known to be of extremely high quality. But it is a fixed and rigid process, and human intervention is needed to implement any modification or improvement.

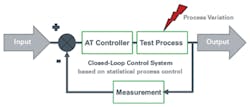

The concept explored by ams was to replace this fixed, uniform process with an adaptive and selective process. Figure 1 shows the process flow: A closed-loop control system is informed by a controlled statistical analysis of recent measurements. An adaptive test (AT) controller makes predictions about the behavior of the device under test (DUT) based on statistical data analysis, and this triggers the selection of test sets for it. The algorithm is dynamically adapted for maximum cost effectiveness by tuning the closed-loop system in real time, which means after every single measurement.1,2

Adaptive test algorithm

The adaptive testing method is founded on an algorithm. The use of the algorithm is limited to the lifetime of a test program, which normally is from the beginning to the end of a wafer sort. The algorithm is jettisoned at the end of each wafer sort and a new one developed in real time as a new wafer sort’s test program is implemented.

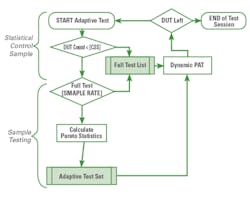

The construction of the adaptive algorithm is shown in Figure 2. Its principle is simple: The program tests the first n DUTs in the conventional way, performing all the specified tests on all the devices. The value of n is a statistical calculation and is the number of units required to give a control sample size (CSS) from which statistically significant analyses may be drawn.

After n DUTs have been tested, the algorithm calculates which tests a DUT is most likely to fail: these tests must be applied to every subsequent DUT.

All other tests may be omitted, thus providing a potentially dramatic reduction in test time. But they are not omitted for all DUTs. A sample of DUTs continues to be subjected to the normally omitted tests to verify the assumption that they are not likely to fail. A defined SAMPLE_RATE parameter in the algorithm determines the number of samples which continue to be subjected to normally omitted tests.

This then gives rise to the question, which tests are DUTs likely to fail? In fact, the decision on whether a test is allowed to be omitted is very strict. Once any DUT within a sample shows an error on any given test, this test may not be omitted for any subsequent DUTs in the rest of the test session. Put another way, only tests which have never had a failure within the test session are allowed to be omitted.

This approach satisfies the aim of improving test efficiency by only testing as much as is required to meet the outgoing quality target given the incoming manufactured quality level.3 It is clear that two wafers of the same product, one with a high yield, one with a lower yield, have the same requirement for the quality of the IC shipped to a customer. The algorithm is successful because it automatically increases the intensity of testing on the wafer with the worse manufacturing quality compared to the wafer with the higher yield.

Test escape risk management

Having devised a workable method for reducing test time, it is, of course, necessary to verify its effect on fault coverage. To do so, ams created a statistical approach to the estimation of the undetected lot fraction defective passed by the adaptive testing method.

“Lot fraction defective” is a statistical term—it means the fraction of faulty ICs in a given population of good ICs. As described, adaptive testing is a kind of statistical sampling procedure. Clearly, if a wafer is only subjected to a portion of the tests normally specified for every die, the user does not have 100% assurance that all the faulty parts will be detected.

But it is possible to estimate the number of defects that might be expected to be found in the whole population based on information from previously tested units. The accuracy of this estimation can be refined by specifying its uncertainty using a proper statistical confidence interval. This then may be used to derive a calculated lot fraction defective with which the results from an adaptive test program may be compared.

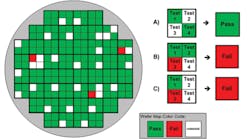

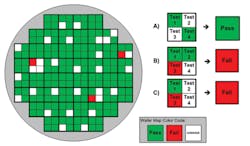

As an illustration of the practice of wafer testing, Figure 3 shows a simplified pass/fail wafer map of a conventional wafer sort, in which one square represents one DUT. Let’s assume that each DUT normally is subjected to a test set with four tests. In a conventional test routine, these tests are always executed in sequence, beginning with Test 1 and ending with Test 4. Once any single test has been failed, the device is rejected as defective, and the remaining tests are not performed on that device. This is the so-called Stop On Fail (SOF) method. Only if an IC passes the entire test set can it be marked as good (green in the figure).

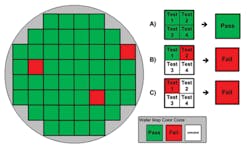

Figure 4. Wafer map with pass and fail information based on SOF strategy under adaptive test regime

The criterion for passing or failing a device is the same. Once a subunit in a die has failed a test, the whole die is deemed to be defective. The difference from conventional testing is that an IC may be considered error-free even if not every subunit was tested, as shown by die A in Figure 4. Clearly, untested units may contain undetected faults.

The statistical principle employed by ams to estimate the lot defective fraction is to use information about the tested subunits to estimate the probable number of defects in the fraction of subunits that are untested. The first assumption underlying this model is that every subunit is statistically independent, which means that the outcome of each subunit’s test does not depend in any way on the outcome of another subunit’s test. Any one subunit has the same chance of being faulty as any other.

Moreover, the fault distribution is assumed to follow a binomial model. A binomial distribution is a probability model for sampling from an infinitely large population. An explanation of the statistical methods for deriving a probability with the binomial method may be found elsewhere in the mathematical literature. In the case of ams’s production output, the binomial method gave an interesting result that encouraged the developers to pursue the sampling approach to wafer testing previously described.

Verifying adaptive testing in practice

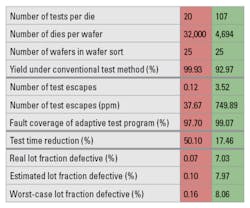

Table 1. Comparison of results from conventional testing and adapting testing programs

Table 1 summarizes the results of two test programs run by ams on actual wafer sorts. Testing Product A with the adaptive test method, ams achieved a high fault coverage of 98% (that is, detecting 98% of the actual defective devices). There were on average 0.12 test escapes per wafer using the adaptive test method. The test time using the adaptive test method was half that of the conventional test method.

For Product E, for which a fault coverage of 99% was required, ams achieved a test time reduction of around 17%. The lower test time reduction in the case of Product E is consistent with its higher lot fraction defective, which means that the algorithm dynamically reduces the number of omitted tests.

Interestingly, the table shows that the calculated value of the lot fraction defective closely matches the real value derived from the conventional test program and that it is a meaningful indicator of the risk of potential test escapes when implementing adaptive real-time testing.

Conclusion

The adaptive test methodology developed by ams has shown that, by the intelligent omission of tests that would normally be performed in a conventional test program, a test time reduction of up to 50% can be achieved. At the same time, only a small number of defective components (test escapes in ppm) fail to be detected by the adaptive test procedure, thus contributing to a very small reduction in the quality of the manufactured output.

References

- International Technology Roadmap for Semiconductors, 2011 Edition.

- O’Neill, P.M., “Adaptive Test,” Avago Technologies, 2009.

- Montgomery, D. C., Introduction to Statistical Quality Control, Fifth Edition, John Wiley & Sons, 2005.

About the author

Christian Streitwieser is a corporate test development engineer at ams and focuses on R&D as well as production testing projects. The Salzburg, Austria, native holds a master’s degree in biomedical engineering from the Technical University of Graz.