Ethernet Evolution Drives Parallel Changes in Test and Measurement

This file type includes high-resolution graphics and schematics when applicable.

Ethernet is evolving at a rapid rate, and it’s important for readers in the related test-and-measurement space to understand the applications, drivers, and impacts of this evolution.

Over the next three years, new Ethernet standards will provide six new speeds of data transport, enabling those higher Ethernet speeds to be used in both existing and new applications. Existing applications that can take advantage of higher Ethernet speeds include high-performance computing and wireless access points, while new applications include vehicular Ethernet and the Internet of Things.

There’s a symbiotic relationship between designing electronic components for applications that use these new Ethernet standards and the testing and measurement tools that must evolve in parallel to assess component performance and compliance with those standards. Thus, T&M work must evolve in a separate-but-parallel universe.

In other words, the changes in technology that drive changes in the Ethernet ecosystem also drive changes in the way test-and-measurement tools operate and how they’re applied.

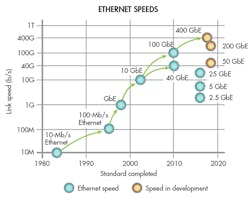

In its first 27 years, the IEEE802.3 Working Group has standardized six rates of Ethernet, including 10 and 100 megabits per second (Mb/s), 1 gigabit per second (Gb/s), 10 Gb/s, 40 Gb/s, and 100 Gb/s. As you read this article, six new speeds are simultaneously in development, targeting 2.5 Gb/s, 5 Gb/s, 25 Gb/s, 50 Gb/s, 200 Gb/s, and 400 Gb/s. All of these new networking speeds are anticipated to reach the market over the next three years.

Overall market success depends greatly on the ability to test and measure an Ethernet-related product’s adherence to standards; its ability to successfully communicate within and across multiple protocols, speeds, and platforms; and keep support calls to a minimum once a product is in clients’ or consumers’ hands. With Ethernet’s approach to global ubiquity, every player in the value chain relies on test-and-measurement tools.

Let’s look briefly at what’s driving these changes, how the technology that supports higher Ethernet speeds works, and how all of this impacts the Ethernet test-and-measurement space.

Changes in Signaling Technology

Until last year, optical and high-speed electrical signaling in Ethernet systems were based on something called non-return-to-zero (NRZ), which transmits data as either a zero or a one. Now, as we're jumping from 25-gig to 50-gig signaling, we’re moving away from the main modulation of NRZ signaling into something called PAM4, and that’s affecting 50 Gb/s, 100 Gb/s, 200 Gb/s, and 400 Gb/s, which will leverage 50-Gb/s-per-lane signaling. This will impact the semiconductors designed to the new Ethernet standards, as well as the T&M tools that will test those chips and the systems to which they provide logic.

Auto-negotiation, a link-layer protocol, basically tells devices how to talk to each other, and it’s assuming greater importance in test equipment with the assortment of speed choices. So there’s more smarts being put into the signaling and the devices driving that signaling.

People in the test-and-measurement space need to test the channel—that’s whatever you’re transmitting across, whether it’s optics or copper. And they need to test the protocols that shape the data being sent to ensure that it’s at the proper speed and has other efficacious attributes.

These two worlds—the component development relying on new signaling technology, and the test-and-measurement space that ascertains that components and interfaces are properly and accurately exchanging signals—are highly related going forward.

With so much change coming in such a short time, the test-and-measurement tools must be there to support it. Otherwise, the components will be manufactured and integrated, but whether they’re working and interoperating and deployable is another question altogether. This affects everyone in the value chain, from those involved in component design, development, and deployment to the IT managers and data-center managers involved in putting networks together and ensuring they “work.” That is, they must function in a way whereby all of the technology is invisible (and this discussion irrelevant) to the end user.

What Do T&M Tools Do?

Test-and-measurement tools provide the ability at any point in time or place in the ecosystem to tap into a signal or a set of signals without affecting the signals under observation. Then they examine and compare the transactions or the dialog on the wires in question, and associate those transactions to a specification or standard, whether it’s open or proprietary in nature.

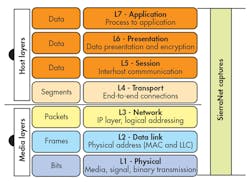

Test-and-measurement tools make it possible to look into Ethernet’s architecture from the physical layer through the operating-system driver layers and application layers to enable the development environment to effectively execute its role. Someone writing drivers for the Windows 10 operating system, for instance, has a driver base and knows how to write in C programming language. But how is that driver actually affecting transactions once it’s out of the software space and traveling across myriad interconnects in the ecosystem?

As Ethernet continues to evolve new speeds, new functionalities, and new protocols over the wires, we need to create new tools to ensure standards compliance, interoperability, and functional operation in the market. In some cases, these are homegrown, proprietary tools (a test suite) that a company creates to assess its own product, say, a hard drive, to glean information on performance metrics and specifications such as mean time between failure, latencies, and access times—all of the things you see on a marketing spec sheet.

The Ethernet community has long adapted “homegrown” tools and generally available software tools in combination with taps and traffic generators to create test environments. Up to the currently deployed Ethernet speeds, these have proved sufficient.

As the Ethernet ecosystem creates protocols that support higher bit rates and longer link distances to enable new applications, a continuous evolution of both software and hardware test and measurement tools is required. Whether proprietary or open-source in nature, they must address the new challenges introduced by increasing speeds.

Different Types of Test Equipment

The basic categories for Ethernet test-and-measurement tools are physical-layer tools, logic tools, and software tools. Oscilloscopes are applied in the physical-layer space, ensuring the PHYs can function at line rate. Software utilities historically have been the primary tool of the Ethernet protocol engineer, and the evolution in this area continues onward.

Logic analyzers (LAs) have been used to capture and review the bit streams; however, they are general-purpose, cumbersome, and limited in the amount of data represented. The evolution of the LA is the protocol analyzer. These tools are purpose-built and tap into links, capturing signals and data transiting a specific communication channel.

Among the categories of Ethernet test and measurement tools, both the physical-layer tools and the logic analyzers remain largely unchanged except in how they capture higher-bit-rate data streams.

The real changes have been occurring within the protocol- and software-specific spaces. A whole host of new offerings are hitting the market. Guidance on how to select and integrate the right tools tends to be lacking, though.

Fabric-management utilities are designed for assessing the general overall health of the network under observation and alerting the operator of performance degradation, and that there’s a problem. The drawback of these tools is the inability to adequately determine root cause; they only tell you that you have a problem, but not the nature of that problem.

When combined with other tools—be they physical-layer tools, logic, or protocol tools—software tools create a robust environment for both development engineers and IT data-center managers to understand what their technology is doing. In other words, how is the technology responding to different influences from various vendors in the same environment?

A protocol analyzer, however, includes both a software utility and a hardware platform designed specifically for its intended interface. The protocol analyzer’s challenge is to capture a signal at the native bit rates without affecting the signal—that’s the role of a “probing” technology. Protocol-specific tools are designed to capture and decode, into humanly readable form, the traffic on the link under test, and represent that information on a screen for comparison with the pertinent IEEE specification(s) or standard(s).

Probing methodologies, especially at today’s data rates, must be accurate and transparent in order to be effective. They’re designed to capture representative snapshots of the actual transactions. Thus, the user of a probing methodology needs to understand how the front-end probe of the analyzer treats the signal. Does the analyzer passively tap the link or actively re-drive the signal? If the signal being tested is inherently poor and the testing tool reconditions it for quality, it may change the dynamic of the link being tested.

The Feedback Loop

The test tool suite provides the foundation for lifecycle and iterative support of products in the market and those yet to come. Everyone favors fewer support calls and swift resolution to those that occur. Does the test environment offer the ability to effectively re-create the customer’s condition to diagnose the problem and, once a remedy is proposed, test the solution? Ideally, the validated solutions are returned to the design teams to be considered in the next iteration of a product, with the goal of reducing future support needs and improving the bottom line.

The Plugfest Test

The Ethernet Alliance is dedicated to the continued success and advancement of Ethernet technologies. We do this in a variety of ways, such as consensus building, education (in articles such as this one), and facilitating interoperability testing environments where member companies may validate their offerings.

To that end, the Ethernet Alliance sponsors “plugfests” that are real-world, vendor-neutral gatherings where organizations gather to ensure interoperability between equipment designed to the Ethernet standard, among other things, and-test and-measurement tools are applied to assess component and network performance. This helps assess that when the specification was written, it took into account the ability to do test and measurement. And it helps assess that the test-and-measurement people understand the specification well enough to devise the tools that will assess whether components, systems, and networks meet the specification.

Everyone in the Ethernet ecosystem has a stake in the development and use of effective test-and-measurement tools and, as Ethernet speeds increase for use in existing and new applications, it’s important to understand that T&M tools their application are evolving as well. It’s often said, “the strength of a standard lies in one’s ability to validate it through measurement,” and that’s what’s happening in the Ethernet-related test-and-measurement space today.

David J. Rodgers is on the board of directors of the Ethernet Alliance and senior product marketing manager at Teledyne LeCroy.

About the Author

David J. Rodgers

Ethernet Alliance President and Events & Conferences Chair, Ethernet Alliance

David J. Rodgers is a 35+ years industry professional, primarily focused on the Test and Measurement market. He is the Ethernet Alliance’s President and Events & Conferences Chair. His experience encompasses a comprehensive background in business and program management, and product development of serial protocol test and measurement solutions. He possesses wide-ranging serial communications protocol and interconnect validation experience. He’s most recently concentrating on deploying and marketing a broad range of high-speed serial analysis test and measurement products.

David currently represents EXFO for High-Speed electro-optical Test Technologies including Ethernet and Fibre Channel in various protocol industry groups, including the IEEE and T11 standards bodies and the Ethernet Alliance and the Fibre Channel Industry Association. He’s an original member of the USB Implementers Forum and one of the pioneer marketers of USB protocol analyzers.