Members can download this article in PDF format.

What you’ll learn:

- One size doesn’t fit all: Debunking why image sensors can’t be selected based on a few physical parameters.

- Misconceptions surrounding pixel size, resolution, read noise, SNR, and more.

- Why industry collaboration will be key to power tomorrow’s intelligent sensing era.

Today, every electronic device, from the smartphone in your pocket to the electric vehicle you drive, contains anywhere from 3 to 10 image sensors providing new features and powering ever-more intelligent applications. The recent advances in semiconductor technology have revolutionized how we see and capture the world around us.

Image sensors are a big part of this sensor revolution. From drones and advanced driver-assistance systems (ADAS) to machine-vision and medical applications, image sensors have propelled wider use of image data across numerous market segments. The right choice of image sensor determines accurate inspection, depth sensing, object recognition, and tracking.

With the global image-sensor market set to deliver record-level revenues, earning around US$32.8 billion by 2027 at a CAGR of 8.4%, the competition increases for advanced image sensors. Thus, it’s crucial for semiconductor suppliers such as onsemi to accelerate sensing innovation and challenge the status quo. As companies look to scope out sensor requirements to run applications five years from now, several misperceptions in the market persist.

Read on as I debunk 11 image sensor myths from the hype around split-pixel designs and global shutter systems for in-cabin systems, to the assumption that sensors for human viewing can also be used for machine-vision applications.

1. Image sensors are based on digital logic and should follow Moore’s Law.

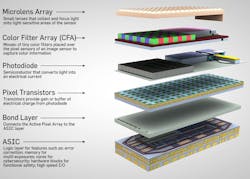

Many people think that image sensor performance and cost should mimic the trends followed by digital devices like processors. Moore’s Law describes the pace of transistors shrinking, doubling the performance and halving the cost every 18-24 months. Whereas image sensors have digital logic that scales with technology, there are significant analog functions as well as the pixels that don’t scale with the same trends.

While CMOS image sensors do have an increasing amount of digital logic that enables them to perform certain functions better, such as signal processing, the raw image quality is heavily dominated by several analog performance metrics. For example, quantum efficiency measures how efficiently incoming light is converted to signal data; analog-to-digital converter (ADC) performance affects frame rate and image quality; and noise metrics impact image quality.

In addition, the photodiodes in an image sensor can only capture the available light from the scene, so a smaller pixel will receive fewer photons. Therefore, it must perform better on sensitivity per unit area and lower noise to produce an equally good image in low light. Whereas Moore’s Law has been surprisingly accurate over decades, those dynamics simply don’t apply the same way to image sensors.

2. Pixel size is the most important metric.

In the image sensor world, a common fallacy is that a larger pixel size correlates to better image quality. While pixel performance across different light conditions is important and larger pixel sizes have more area available to gather light, they don’t always result in better image quality. Image quality is the result of several factors, including the resolution and pixel-noise metrics.

For instance, a sensor with smaller pixels might achieve better results than a sensor with the same optical area, but with larger pixels. Small pixels tend to have lower dark signal nonuniformity (DSNU); at higher temperatures, the DSNU limits low-light performance. Therefore, a smaller pixel sensor can outperform one with larger pixels in some cases.

Whether for a commercial, consumer, or light-industrial device, camera size depends on the application. While pixel size is a factor, its importance often gets overplayed. In practice, it’s only one element of a mix of characteristics that needs to be considered. When we design image sensors at onsemi, the requirements of the target application determine the optimal balance of speed, sensitivity, and image-quality characteristics to achieve leadership performance.

3. Resolution is the main metric to consider when choosing an image sensor.

System engineers often equate higher resolution to image quality. While higher resolution can provide sharper edges and finer detail, it needs to be balanced with metrics such as speed/frame rate, sensor size, pixel performance, and sensor power. Where higher resolution is required, a smaller pixel can enable that kind of resolution while also keeping the lens and camera size manageable to meet camera cost and size goals.

Today, some customers jump to a conclusion about what resolution they need before analyzing all of the tradeoffs. An analysis should begin with the core requirements, including lens size or camera body size constraints, the system’s goal, and what sensor parameters would best address those issues. Such an analysis may create more sensor options to optimize the overall system, rather than narrowing selection by a physical parameter first and then considering only those options.

Many factors affect pixel performance under different lighting conditions, so while resolution is important, it’s not a standalone metric.

4. Power-supply design doesn’t affect image quality.

A common misconception is that if there’s a great sensor in place, one can save a few dimes by compromising on the power-supply design because it’s a different system component. This notion is flawed—noise from the power-supply components can show up as image artifacts, impairing the final image quality.

At the core, image sensors are analog devices that count photons, often in low-light conditions. If the supply voltage is “dirty”—i.e., having little spikes or voltage transients rather than a smoother voltage level—it can show up in the final image output. Though sensors are designed for the power-supply voltage to fluctuate within a tolerance range, if voltage spikes or sharp drops travel to the image sensor’s power pins, the image quality can degrade.

5. Average SNR is what “makes or breaks” the image quality.

Signal-to-noise ratio (SNR) is the average ratio of signal power to the noise power. But average SNR is less important than consistent and high SNR across all parts of an image, especially when it comes to varying light levels (shadows, bright sunshine, or a dark night).

While more light is better, it’s key for the SNR value to remain high across a range of dark and light areas throughout the image. For instance, in perception systems that use machine vision where the SNR is low, objects maybe be missed or misclassified.

Average SNR is often marketed as a priority metric for image sensors. Teams will quote performance statistics and cherry-pick parts of their image where the SNR is good, with the assumption that it reflects the overall image quality across all lighting conditions.

It’s important to look beyond the “average” metric. A large number of sensor areas with very high SNR can boost the average, while hiding critical areas that have very low SNR.

For example, in an automotive use case, transition points between shorter and longer exposures can create low SNR areas: ADAS systems or self-parking systems could have lower performance and viewing systems could lose color fidelity or have brightness levels that look unnatural. Super-exposure pixel technology can address these “hidden” challenges.

6. Split-pixel designs offer a good balance of tradeoffs.

There are better solutions to solving the problem that split-pixel design is meant to address. Certain market players go after the architectural concept of “split-pixel designs” for their image sensors, because they can avoid the challenge of creating more capacity for the photodiode to collect electrons before it “fills up” for high-dynamic-range (HDR) imaging.

In split-pixel designs, the area dedicated to one pixel is divided into two parts: a big pixel covers most of the area and a small pixel covers a much smaller portion of the area. The big pixel collects light more quickly and, thus, saturates in bright conditions. On the other hand, the small pixel can be exposed for a longer time and not saturate because less area is exposed. This creates more dynamic range, the large pixel working in low-light conditions and the small pixel in bright conditions.

The downsides are that less pixel area is available in low-light conditions. Moreover, significant aliasing or color bleed between photodiodes can occur because of the chief ray angle of the lens interacting with the two different-sized pixels in the array.

At onsemi, we take a different approach, adding a special area connected to the pixel where the signal or charge can overflow. Think of this as a small cup that overflows into a bigger bucket. The signal in the small cup is easy to read with very high accuracy (excellent low-light performance), and the bigger bucket holds everything that overflowed (extending the dynamic range).

With this approach, the entire pixel area is available for low-light conditions and doesn’t saturate in brighter areas, retaining critical features like color information and sharpness. onsemi’s Super-Exposure technology enhances image quality across high dynamic range scenes for human and machine vision.

7. Image sensors are always difficult to synchronize in a multi-camera system.

Contrary to popular belief, if the sensors have the right features and good application notes, several options exist to make them more closely synchronized. Synchronization ensures that multiple image sensors are sampling the scene at the same time.

Whether it’s a stereo-camera system for the front-facing ADAS or a surround-view camera that gives you a bird’s eye view, it’s essential for all cameras to capture the image at the same time (in real-time). Effectively, it should act as one camera for different fields of view. This enables moving objects to be captured at the same place in the scene, resulting in fewer artifacts and the correct depth from stereo cameras.

Many sensors have input triggers to enable stereo or multi-camera systems to synchronize images, especially in ADAS, where machine vision plays a big role. The triggers can be hardware- or software-based.

A single hardware trigger using pulse-width modulation (PWM) can control all cameras in a multi-camera system. This ensures that each camera image will be synchronized. Some vendors attempt to avoid the added cost of the PWM control module using a software trigger, but they can suffer from latency issues. Various software schemes are employed to overcome these challenges—this is where the “synchronization is difficult” myth comes from.

8. Human-viewing sensors work well for machine vision.

Not always. As viewing cameras become more popular in the automotive industry, automakers are keen to add machine-vision sensors for features such as rear emergency braking or object collision warnings.

However, many don’t want an extra camera added for every single function and instead prefer a single camera to capture both types of images needed. More cameras increase system costs, and it’s challenging to integrate them into the vehicle design because they take up space and the lenses must be pointed in the right directions.

For machine-vision applications, though, image quality is critical due to the increasing sophistication of features like ADAS. Having reliable image quality across all use cases and lighting conditions in such applications is integral for the functionality to be available and effective.

An example is the emergency braking or object collision warning feature in a car. If the choice is made to use a single, typical human-viewing sensor, you may be compromising the performance of the safety system.

If a human-viewing system performs well most of the time but performs lower occasionally under certain conditions, the user will recognize the discrepancy and judge its effectiveness without much effort. Whereas the safety feature may not alert the driver that it’s functioning at reduced effectiveness unless it experiences a system error.

Machine-vision sensors are tuned differently and have different figures of merit. The same image input that’s fed into a machine-vision algorithm can’t be used in human-viewing applications. Machine-vision algorithms respond to mathematical differences in the pixel data, whereas humans have a nonlinear visual response to brightness and color changes.

To cover both human and machine viewing requirements, a sensor must be designed with both applications in mind. On that front, onsemi has applied its experience in developing image sensors for automotive machine vision and adapted that technology to cover both feature sets.

9. Machine-vision sensors also work well in human-viewing applications.

Not necessarily. As with the previous myth, it’s important to understand that even if a machine-vision sensor performs exceptionally in all use cases, it’s not designed to produce the same results for human-viewing applications. Human- and machine-vision sensors have two very different sets of priorities and expectations.

As more cameras are added to vehicles, automakers and OEMs want more features out of each camera. While rearview cameras are mandated in many countries, new systems are implementing an “object detection” trait such as an ADAS feature, enabling rear emergency braking to this “viewing” use case.

It’s crucial that the sensor and camera are designed for both use cases (sensing and viewing). The sensor requires the capability to produce two output images from a single “frame”—one optimized for human viewing and the second for machine vision. Typically, the machine-vision image would be sent to the vision processor with minimal processing and the human image would be processed through an image signal processor to make it ready to display on a screen.

10. Read noise is really important.

For most modern sensors, the read noise is low enough whereby other parameters will dominate. Be it a perception or vision system, for many applications at typical operating temperatures the DSNU will limit low-light performance.

DSNU is a fixed pattern noise in the image that’s caused by the average dark current in each pixel being different than the other pixels. Modern sensors correct the average dark current in the output, but it can’t correct this pixel-by-pixel variation from that average. DSNU is highly dependent on the junction or temperature of the sensor silicon.

11. Global shutter systems are required for in-cabin systems.

There’s a certain market sentiment that in-cabin systems need a global shutter sensor. Global shutter systems expose all pixels in the image simultaneously and stop all of the pixels from gathering light at the same time—as if a mechanical shutter is used to physically block the light.

The sensor captures the value of each pixel and then stores it in a memory space in the pixel. Here, it’s protected from light because many systems today don’t have a mechanical shutter due to cost, complexity and reliability issues. In this case, the readout can be a little bit slower, but the efficiency varies since one can’t read all the data at the same time—it will be streamed through an interface to the vision processor.

Global shutter system sensors are often used for driver-monitoring applications and considered a necessity. However, rolling shutter sensors work well for occupant monitoring because they’re more cost-effective for a given resolution and are capable of higher dynamic range.

In a driver-monitoring system, the IR LED intensity or sensor exposure time can be adjusted to properly expose the driver’s eyes. However, to image all of the occupants in the cabin, more dynamic range is needed because some passengers might be in the shade while others might be in the direct sunlight.

Also, occupant monitoring systems don’t need to stop ultra-fast motion (like measuring how fast your eyelid moves to better detect a drowsy driver). Future sensors and new AI software may enable more driver-monitoring functions with fast rolling shutter sensors in the future as well. This would enable all of the in-cabin functions to be addressed with a single system and a single sensor technology to enable greater functionality at lower cost than multiple separate systems.

About the Author

Geoff Ballew

Senior Director of Marketing, Intelligent Sensing Group, onsemi

Geoff Ballew leads the Group Marketing for the Intelligent Sensing Group (ISG) at onsemi. The ISG division offers a broad portfolio of sensing products optimized for automotive, industrial, and commercial markets with a focus on Intelligent Sensing applications, where sensor data enables market-changing machine-vision and AI capabilities.

Prior to onsemi, Mr. Ballew ran product lines for high-performance computing, high-performance graphics, and automotive processors at NVIDIA and learned valuable lessons at very small startup companies in Silicon Valley.