Build an Efficient, Advanced ADAS for a Safer Driving Experience

Members can download this article in PDF format.

The objective of all drivers is to drive safely to reduce accidents. The question is: “How are we going to do it?”

New automobiles today are equipped with an increasing number of sensors, processors, radars, and cameras that support advanced driver-assistance systems (ADAS). On that front, companies like Texas Instruments can help with the design of adaptable, advanced ADAS for a safer, more automated driving experience.

To deliver on these ambitions, driver-assistance systems must quickly, securely, and accurately process massive amounts of data and clearly communicate with the driver. By fostering a human connection to driving through communications via ADAS, an important step is taken toward reducing the risk factors on the road (Fig. 1). As such, to enhance safety, ADAS circuitry must behave similarly to how the central nervous system guides a body’s everyday movements.

TI’s technology—Ethernet PHYs, controller area networks, FPD-Links, and PCIe communications—can increase vehicle safety while at the same time reducing system size and cost, optimizing high-speed data transfer and meeting International Standardization Organization (ISO) 26262 requirements.

Vision Perception

Much of the good news derived from vehicle sensor development comes from cameras. From the beginning, Tesla, for example, has equipped its cars with eight surround cameras that provide 360 degrees of visibility around the car at 250 meters of range. The car incorporates data from its cameras, identifies important elements of its surrounding environment, and fuses these elements with data from other sensors.

Vision-based perception technology can be used in ADAS applications to interpret the environment and provide information to drivers, helping to move toward a collision-free future. In fact, in the world of autonomous vehicles, computer vision is often referred to as “perception.” That’s because cameras often are the primary sensor used by a vehicle to perceive its environment (such as a front-view camera for collision avoidance and pedestrian recognition, a rear-view camera for backup protection, and surround-view cameras for parking assistance).

In the last few years, deep neural networks have emerged as the dominant approach to working with camera video and images. These neural networks learn from data. For example, to teach a deep neural network what a stop sign looks like, it’s fed multiple stop sign images. Gradually, it learns to recognize a stop sign.

For anyone who has pondered what deep learning would do for ADAS performance in recognizing and classifying various objects, they should look into Stradvision’s SVNet. It runs on the high-performance TDA4AL-Q1 system-on chip (SoC), using camera data to recognize and classify objects.

SVNet is a lightweight solution using compact deep-neural-network (DNN)-based machine-learning algorithms that mimic the information processing of the brain. These algorithms can minimize the amount of computation required per frame. This helps maximize efficiency by minimizing memory and power consumption required for computation.

Delivering Current Beyond 100 A to an ADAS Processor

ADAS isn’t just about sensor technology. Moving toward higher autonomy levels will require enhanced computing power in real-time to provide higher resolution and quick responses. Embedding features such as artificial-intelligence technologies also heightens the need for more power-hungry ADAS SoC processors to enable a higher level of autonomous driving.

Meeting these needs requires a processor to support ECUs beyond 100 A. The design challenges associated with higher power include achieving efficiency for higher current rails, controlling thermal performance and load transients at full loads, and meeting functional-safety requirements.

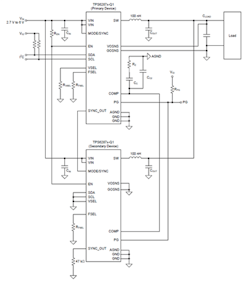

The TI TPS62876-Q1 buck converter helps designers address currents beyond 30 A with a novel stackability feature (Fig. 2). It achieves the high currents necessary to power a SoC such as the multicore processor TDA4VH-Q1.

Stacking operates by using the daisy-chain method. The primary device controls one compensation network, one POWERGOOD pin, one ENABLE pin, and one I2C interface.

For optimal current sharing, all devices in the stack must be programmed to use the same current rating, the same switching frequency, and the same current level. Stacking these devices not only helps power the core of next-generation ADAS SoCs, but also improves thermal performance by reducing thermal limitations. It increases efficiency as well.

Summary

Fully self-driving cars may still be a thing of the future, but the safety impact of automation is already being felt. ADAS technology enables cars to take actions similar to a driver—sensing weather conditions, detecting objects on the road—and make decisions in real-time to improve safety. TI’s portfolio of analog and embedded processing products and technologies give automotive engineers tools that can fuel vehicle innovation.

With its interactive system block diagrams, the company can guide you through its catalog of integrated circuits, reference designs, and supporting content to help you design efficient ADAS functionality for any vehicle.