It doesn’t matter if it’s storage, computation, or bandwidth—there’s never enough. Digital trends show continued improvements across the board, though. Designers will be able to get more memory and muscle based on their current requirements at a lower cost, all while using less power too.

More Storage

There will be plenty of action in storage this year. DDR4 will move from the labs to production. It doubles speed and triples density while cutting power by about 20%. The new crop of processors will demand DDR4 memory, especially for high-end servers where there never seems to be enough RAM or speed. DDR3 will still be the main memory for embedded applications.

Related Articles

- Flash Controllers Get Better Efficiency With Low Density Parity Check (LDPC)

- 64-bit And Real-Time Architectures At Arm TechCon

- Heterogeneous System Architecture Changes CPU/GPU Software

- Battle Of The Supercomputing Nodes

- How Many Quarks Does It Take To Make An IoT?

On the horizon is stacked memory like the Hybrid Memory Cube (see “Hybrid Memory Cube Shows New Direction For High-Performance Storage” at electronicdesign.com). It significantly improves bandwidth and capacity, but it requires a different memory controller interface and DDR4 is where the current crop of processors is headed. The memory is the last bastion of parallel interfaces on processors these days as other interfaces have moved to high-speed serial like PCI Express.

This file type includes high resolution graphics and schematics when applicable.

DRAM dual-inline memory modules (DIMMs) may find themselves sharing space with flash-based DIMMs such as those from Diablo Technologies, whose Memory Channel Storage DIMMs plug into DDR3 sockets to allow terabytes of flash memory to be placed on the processor’s memory channel (see “Memory Channel Storage Puts SSD Next To CPU” at electronicdesign.com).

These DIMMs target enterprise platforms but are interesting for embedded applications as well. The challenge on the enterprise side is the high demand for more RAM storage, but there are many applications where large amounts of non-volatile storage are advantageous. Flash on the memory channel eliminates the overhead and bandwidth limitations of PCI Express.

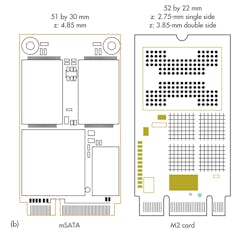

PCI Express will deliver more flash bandwidth and capacity. Standards like NVM Express (NVMe), SATA Express, and SCSI Express will be found on boards, in expansion boards, and in disk-drive form factors (see “Non-Volatile DIMMs And NVMe Spice Up The Flash Memory Summit” at electronicdesign.com). New form factors like the m.2 New Generation Form Factor (NGFF) are designed to provide high-speed, high-capacity replaceable storage options for mobile and embedded platforms (Fig. 1). The m.2 MGFF competes with existing mSATA modules, but its modules come in SATA or PCI Express versions that differ by the number of notches in the edge connector.

Flash memory densities continue to grow. Even enterprise systems have been moving from the more expensive single-level cell (SLC) flash to multilevel cell (MLC) and triple-level cell (TLC) NAND flash storage. The problem is that error rates rise along with capacity while storage write cycles decrease. Memory controllers fill the gap by utilizing better error correction techniques like low-density parity check (see “Flash Controllers Get Better Efficiency With Low-Density Parity Check” (LDPC)” at electronicdesign.com). LDPC requires more computational resources and can introduce more latency, so fast and efficient controller operation will be a necessity. On the plus side, the controllers hide the complexity of error correction, load leveling, and other flash memory management chores.

Another interesting deviation from the norm is Seagate’s direct-access-over-Ethernet Kinetic hard drive (see “Object Oriented Disk Drive Goes Direct To Ethernet” at electronicdesign.com). The Kinetic foregoes the conventional SATA or SAS block mode interface and replaces it with a pair of 1-Gbit Ethernet interfaces and an object-oriented protocol. It targets “big data” enterprise applications that already use network-based storage. The drive handles data management of the variable-size objects and hides the underlying block architecture. This differs from an iSCSI block approach, but it lacks the storage hierarchy and overhead of a NAS device.

More Computation

Storage isn’t the only place where there will be a lot of action this year. Changes in processing platforms will be just as dramatic as technologies and products that were announced in 2013 are delivered in 2014.

AMD’s Accelerated Processing Unit (APU) multicore chips that implement the Heterogeneous System Architecture (HSA) will be generally available (see “Heterogeneous System Architecture Changes CPU/GPU Software” at electronicdesign.com). AMD HSA APUs are inside the Microsoft XBox One and the Sony PlayStation 4, but they target gaming and utilize GDDR5 memory. The other APUs initially will support DDR3.

The HSA programming model that unifies the GPU and CPU virtual memory space will have a major software impact. Meanwhile, Arm also is a member of the HSA Foundation. The architecture is not processor specific, and Arm has CPU and GPU core offerings.

Standalone GPUs are disappearing from most low- to mid-range PCs and tablets, which have moved to integrated GPUs like AMD’s APU, Intel’s Integrated Graphics, and Arm’s Mali. That still leaves this type of GPU for high-end gaming and high-performance computing. Nvidia’s Kepler GPU can communicate with others over PCI Express and even through Ethernet adapters without CPU intervention (see “GPU Architecture Improves Embedded Application Support” at electronicdesign.com).

Apple had its own 64-bit Arm part in 2013, but the flood of 64-bit Arm Cortex-A50 processors will appear in 2014 (see “64-Bit And Real-Time Architectures At Arm TechCon” at electronicdesign.com). AMD is revving up a multicore, Arm Cortex-A50 system-on-chip (SoC) that will have its SeaMicro Freedom Fabric interface built in.

Of course, AMD will challenge Intel’s Core and Xeon lines of processors, which have dominated the PC and server space. Intel is making a big push into software defined networking (SDN) and network function virtualization (NFV) with combinations like the “Coleto Creek” chipsets and its Xeon E5-2600 processors. The chipset accelerates network cryptography and compression chores.

High-performance computing will get more bang from chips like Intel’s Phi and GPUs from Nvidia, AMD, and ARM. General-purpose GPUs (GPGPUs) will continue to grow in performance but programming remains a challenge (see “GPGPU Boosts Graphics Processing In Harsh Environments” at electronicdesign.com). Not all algorithms can be accelerated using GPUs.

Those users needing even more hardware acceleration for high-performance computing may choose FPGAs over GPUs. Xilinx and Altera FPGA modules can plug into CPU sockets in a multisocket environment using Intel’s QuickPath Interconnect (QPI). Combine this with Altera’s OpenGL software development kit (SDK) to ease FGPA development for some interesting hardware acceleration projects.

Multicore solutions that are down a notch or two on the performance scale like Intel’s 64-bit, eight-core C2000 SoC Atom are targeting microservers (see “8-Core Atom Expands Intel’s Server Strategy” at electronicdesign.com). Of course, they will see competition from a plethora of Arm-based solutions. These high-performance SoCs are ideal for a range of embedded applications as well.

Intel’s new Quark is the low-end, single-core, 32-bit, x86 SoC designed for use as an Internet of Things (IoT) gateway (see “How Many Quarks Does It Take To Make An IoT?” at electronicdesign.com). Quark will target mobile applications as well, but it too must compete with the Arm and MIPS contingent of SoCs already entrenched in this space.

Arm’s Cortex A-series now includes the A7, A9, A12, and A15 based on the ARMv7A architecture versus the ARMv8 used in the 64-bit Cortex-A50 family. The Cortex-A7 can be paired with the others in a big.LITTLE combination (see “Little Core Shares Big Core Architecture” at electronicdesign.com). Another change this year is the availability of big.LITTLE scheduling support that allows all cores, large or small, to be utilized at the same time.

Security hardware will be more available in chipset and SoC solutions. Secure boot, key management, and storage as well as other security-related features are standard on more parts.

More Micros

The 32-bit Arm Cortex-M and Cortex-R families are giving competing microcontroller architectures a run for their money. This includes 8- and 16-bit platforms, but 32-bit solutions continue to press down into these spaces while providing a high-end growth path too. More microcontroller vendors that had different 8- and 16-bit parts have adopted the Cortex-M0+. At this point, vendors with dissimilar 8-, 16-, and 32-bit offerings have unified their peripheral options and software development tools to make migration between platforms practical and fairly seamless, but that does not mean totally transparent.

CPU/DSP combinations remain popular for providing acceleration and programming flexibility. This type of combination has also gone multicore with multiple CPU cores as well as microcontroller cores. For example, the Texas Instruments OMAP 543x has a pair of Cortex-A15 and a pair of Cortex-M4 microcontrollers. There are a number of advantages to mixing cores, especially when the power and frequency for each core can be controlled independently.

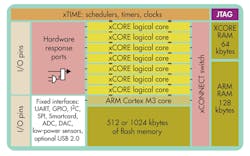

XMOS’s xCore-XA has one Cortex-M3 core and seven xCORE DSP cores (Fig. 2). XMOS worked with Silicon Labs to blend Silicon Labs’ EFM32 Gecko Cortex-M3 technology with XMOS’s xCORE soft peripheral (see “Micro Mixes Hard USB With Soft Peripherals” at electronicdesign.com). Some of XMOS’s systems can handle real-time Ethernet like EtherCAT in software. The xCORE cores share 64 kbytes of RAM while the Cortex-M3 has its own 128 kbytes of RAM. They share peripherals and up to 1 Mbyte of flash memory. The xCONNECT switch connects the cores.

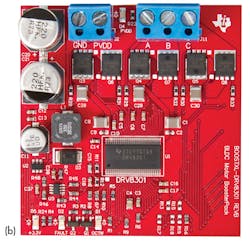

Specialization has been the hallmark of the microcontroller industry with hundreds of SKUs for many product families. This will continue in various venues to handle things like electric power meter and motor control. For instance, the Texas Instruments C2000 InstaSpin-FOC (Field Oriented Control) delivers self-profiling control of sensorless three-phase, synchronous or asynchronous motors (see “Self Profiling, Sensorless, FOC System Revolutionizes Motor Control” at electronicdesign.com). TI’s latest C2000 InstaSPIN-FOC LaunchPad board works with the DRV8301 motor drive BoosterPack (Fig. 3). The BoosterPack can sink 2.3 A or source 1.7 A using NexFET MOSFETs. It has built-in sense amplifiers. A buck converter provides power to the C2000 board.

Still, there is a move to smarter peripherals to reduce the plethora of chip types. At one extreme is FPGA-like programmability from Cypress Semiconductor that links configurable analog and digital peripherals to microcontroller cores that currently include the M8C, 8051, Cortex-M0, and Cortex-M3. At the other end, microcontrollers with serial ports can be configured for everything from UARTs to SPI or I2C communication. Many chips also have peripheral communication networks that allow linkages where a comparator output triggers an analog-to-digital converter (ADC) that sends its results via a UART all without CPU intervention. In fact, the CPU may be asleep, minimizing power usage.

More Interconnects

There will be fewer surprises but lots of action in high-speed serial interconnects. These components are based on standards that have been well established with a long-term roadmap, and most are between transition periods. For example, PCI Express Gen 3 is out in force. It will be found on most x86 motherboards and on high-end Arm platforms. Older or lower-end products may use Gen 2, which is upwards compatible.

Low-pin-count MIPI M-PHY systems may be used to deliver a few new protocols this year. Standards now include USB and PCI Express over M-PHY. M-PHY tends to be found on mobile devices where low pin counts and low power requirements are the norm.

New form factors will highlight PCI Express Gen 3 including the m.2, which targets high-density SSD-based (solid-state disk) storage. The m.2 will find a home in mobile devices like laptops and tablets as well as embedded applications. The stick comes in different lengths for more storage using a common socket. The OCuLink PCI Express cables will be available as well to provide cost-effective box-to-box connections. OCuLink will use copper or fiber.

Fiber is also showing up on the display side where Intel Thunderbolt 2 is running at 20 Gbits/s. It is needed to handle raw 4K video. The use of an active cable hides the copper/fiber issue from the device, but fiber allows cable lengths on the order of 100 meters. VGA and DVI connectors will still be around, but HDMI and DisplayPort are needed for 4K video. The usual copper cables will work in most cases, though fiber is available as an option.

Don’t expect any changes for USB 3.0 except a flood of products now that there are plenty of hosts to plug them into. Storage will no longer be the only device demanding the throughput and full-duplex operation that USB 3.0 offers. USB-based displays will also be more prevalent as well as USB 3.0-based docking stations and monitors.

What will be different this year will be USB 3.0 power management and delivery. Systems will be able to push as much as 100 W through the cable, although the connection will be intelligent at both ends so the amount of power will depend upon the capabilities of the cable, host, and device.

Keep an eye out for the proposed USB 3.1 Type-C connector, which will be orientation-agnostic. Current USB connectors only work when they’re properly oriented. The specification won’t be finalized until the middle of the year, so new products won’t appear until 2015.

InfiniBand and Serial RapidIO are well entrenched. InfiniBand’s 14-Gbit/s FDR (14 data rate) is pushing the limits of PCI Express, which is usually found on the other end of the interface. The 26-Gbit/s enhanced data rate (EDR) is on the horizon. Serial RapidIO Gen 2 is running at 10 Gbits/s with 25 Gbits/s in the next-generation roadmap.

The 12-Gbit/s SAS interface will be a boon to the enterprise, which will be able to use adapters and motherboards that will handle the higher bandwidth. The movement of flash storage to PCI Express and even the processor’s memory channel does not eliminate the need for banks of hard-disk and flash drives, especially for enterprises delivering cloud-based computing and storage.