Interconnecting Multi-Camera Systems in Mobile and Mobile-Influenced Designs

This file type includes high-resolution graphics and schematics when applicable.

Engineers are intrigued and challenged by the many new opportunities to deploy multi-camera imaging systems for mobile-related applications. Today, the market is ready for these applications, with vendors already demonstrating the possibilities multiple cameras can bring to enhance image quality and expand use cases for cameras in smartphones, tablets, video-game devices, and automobiles.

Multiple-camera systems can be challenging to implement, though, requiring not only the integration of sensors and application processors, but also the use of mathematical models, algorithms, and heuristics to add intelligence to visual information. This article examines the integration of multi-camera systems in mobile and mobile-influenced designs, and illustrates how the MIPI CSI-2 system architecture provides mission-critical transport that’s ideally suited for real-time streaming applications on multiple platforms.

MIPI CSI-2: A Mature Interface for Complex Implementations

Designers can employ embedded-device interface protocols and physical-layer technologies to simplify the integration of multi-camera systems in mobile and mobile-influenced products.

MIPI CSI, developed by members of the MIPI Alliance Camera Working Group, is used to interconnect cameras and application processors in virtually every smartphone built today. Available since 2005, MIPI CSI has been a pioneer of high-performance imaging for mobile and mobile-influenced applications. It offers an easy-to-use, robust, scalable, low-power, high-speed interface that provides bandwidth capable of supporting a wide range of imaging solutions, including 4K video.

MIPI CSI-2, currently available as v1.3, is a protocol layer that operates on two optional physical-layer specifications from the MIPI Alliance: MIPI C-PHY and MIPI D-PHY. The conduit provides 4K 60 FPS (frames per second) using 12BPP (12 bits per pixel), as an example. Depending on the configuration, MIPI CSI-2 conduits range from 80 Mb/s to 22 Gb/s with scalable lanes.

Updates Expand MIPI CSI-2 Use Cases and Capabilities

Traditionally, MIPI CSI-2 system conduits have been used to connect relatively small form-factor mobile devices, where the distance from the camera sensor to the application processor is less than 15 inches. MIPI CSI-2 v2.0, which will likely see application adoption in 2016, expands the scope of features and capabilities for IoT appliances, wearables, and automotive applications. Its key benefits include:

• Support for longer trace lengths.

• 32 virtual channels over MIPI C-PHY and 16 virtual channels over MIPI D-PHY to facilitate transport of multiple imaging and metadata content on a platform.

• Substantially increasing the number of MIPI CSI-2 image, radar, and sonar sensors that can be supported by an imaging conduit to an image signal processor (ISP) in an application processor.

• Improving transport efficiency over MIPI C-PHY/MIPI D-PHY to reduce the number of wires, toggle rate, and power consumption.

• Alleviating electrical overstress and current-leakage issues with newer process nodes.

• Offering high dynamic range (HDR-16 and HDR-20) capabilities to vastly improve intra-scene dynamic-range capability for applications such as automotive advanced driver-assistance systems (ADAS) collision avoidance.

• Scrambling to reduce power spectral-density emissions for MIPI C-PHY embedded clock and data, and MIPI D-PHY data.

Typical Imaging Capabilities for Multi-Camera Designs

Multi-camera systems will be used to enable current and near-term imaging features, such as gesture recognition, depth perception, and multiple focal point views, for a variety of mobile and mobile-influenced use cases.

Gesture recognition, for example, typically requires two camera sensors installed within very close proximity to one another, along with analytical software tools, to detect and understand motion. Gesture recognition is already available in smartphones, tablets, and video games. Gesture recognition is also evolving for in-car automotive applications that enable drivers to use hand movements to interact with steering-wheel or dashboard controls.

Stereoscopic depth perception is achieved by utilizing two cameras to enable 3D capture. As an example, 3D face recognition is used by enterprises as a secure solution for highly sensitive and classified data requiring biometrics authentication. Stereoscopic depth perception is also employed in detailed topographic contour-mapping applications and line-of-sight landings by drones.

Multifocal sensor fusion requiring three or more MIPI CSI-2 sensor topologies can be readily found in automotive ADAS applications. As the ADAS transitions to automation, and eventually to enable automobiles and commercial transport vehicles to function with complete autonomy, the MIPI CSI-2 system architecture is evolving to meet performance-imaging needs.

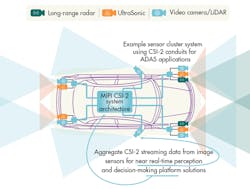

For instance, advanced sensing and perception ADAS solutions with radar (far-field), camera (mid-field), and ultrasound (near-field) may use three MIPI CSI-2 conduits for near-real-time processing and decision-making (see figure). The next-generation MIPI CSI-2 v2.0 with high-dynamic-range (HDR-16 and HDR-20) capabilities, extended virtual channels, and other features further enables comprehensive architectural pathways that can lead to fully autonomous vehicles.

The “bokeh” effect in photography—the creative and contrasting treatment of out-of-focus and in-focus content in an image—is achievable in mobile devices utilizing dual MIPI CSI-2 cameras. This enables professional photography capabilities that were previously limited to considerably larger and heavier single-lens reflex (SLR) camera form factors.

Sensor Fusion

A number of forward-looking use cases require sensor fusion in order to gather pixel content from traditional camera sensors with metadata from MEMS sensors, such as ambient light sensors (ALS), gyroscopes, and voice coil motors (VCMs). Development of MIPI CSI-2 v2.0 incorporates the forthcoming MIPI I3C v1.0 interface specification to improve camera-command interface performance and facilitate always-on and always-aware imaging needs.

Meeting Tomorrow’s Design Needs with MIPI CSI-2

The MIPI CSI-2 technology stack helps simplify implementation of increasingly complex imaging applications in mobile and mobile-influenced designs. The ability to integrate multiple cameras addresses the market’s needs for gesture recognition, depth perception, multi-focal-point images, and other effects. What’s more, MIPI CSI-2 will soon have the ability to extend the physical length of the connections to address more use cases and form factors, opening up more opportunities for product innovation.

Because MIPI CSI-2 has already achieved widespread adoption in the mobile industry, companies that have invested in infrastructure to build camera-enabled products will be able to conveniently extend the use of the technology to new multi-camera applications and market segments. Vendors will be able to extend their product lines with the same tools and systems they’ve used previously, which reduces integration complexities and minimizes R&D costs. Teams will not need to navigate a new learning curve, allowing them to get to market more quickly with new multi-camera designs.

Richard Sproul is MIPI Alliance IP architect and digital IP architect/principal design engineer at Cadence Design Systems, and Haran Thanigasalam is MIPI Alliance Camera Working Group chairman and senior platform architect at Intel.

Looking for parts? Go to SourceESB.