Make the most of your test time: Think before you write code, and surround yourself with smart data-mining tools.

Test-time optimiza-tion is a critical step in developing any test program because it minimizes costs and maximizes margins. But with test programs becoming increasingly more complex, especially in the SOC arena, and now using a wide variety of modules and resources, it has become very difficult to track down, identify, and fix inefficient code sections.

Program Design

• Time to market pressures, the actual available time to develop the program.

• Tester resources, the constraints of working with a given configuration.

• Throughput, the minimum productivity you expect.

As time to market is critical, to maximize the high margin during the product ramp-up phase, all strategies that help develop test programs faster are welcome. It now is very common to have programs designed in modules and use and leverage libraries shared with other test programs.

But as with any project, there are specific pluses and minuses. The benefits of using modules include faster program design and reusable components. The drawbacks are slower code and hidden inefficiencies that are hard to find because they are spread over different modules.

Test-Time Reduction

As a consequence, test-time reduction (TTR) usually is an afterthought. The first priority is to make the test program run with an acceptable yield. As production volumes increase, optimization sessions are performed to identify and trim the “fat” and rewrite sections in a more efficient way.

Since there are many ways to optimize a test program, diagnostic tools must be smart enough to automatically identify optimization opportunities and propose action items. This saves significant time because the potential gain of TTR is known in advance, and only techniques that can lead to significant results are investigated.

Many possibilities are available to make a test program run faster without reducing the coverage (Table 1). But because engineers are not all gurus, the results of test-time optimization sessions can vary significantly depending on the individual talents and tools at hand.

Most companies do their best to reduce the gap between senior and junior test engineers by sharing knowledge, implementing best practices, and leveraging tools. And any tool that boosts the learning curve helps the company become more competitive.

Profiling Tools

Experience and surveys show that for any process optimization, an engineer will spend 80% of the time collecting, filtering, and manipulating data and 20% analyzing it to take corrective actions. These numbers make it clear that there is a significant opportunity to save valuable time and energy providing engineers possess the proper tools and solutions.

These software solutions are called profiler tools. Profilers range from ones that simply dump raw data to much more advanced solutions that provide timing detail for any elementary test instruction and diagnostic reports to automatically identify potential improvements within test sections, all from an interactive environment (Figure 1).

To maximize the engineer’s time, a good profiler must:

• Display test-time information at any level from test blocs to a single instruction.

• Allow information analysis from different angles; for example, show time spent in a test bloc or time spent on a tester resource.

• Provide a flexible navigation environment that is graphical and explorer-like for intuitive navigation.

• Suggest actions automatically to perform, identify test blocs that can be optimized, and recommend possible actions to undertake.

• Be easy to use to minimize the learning curve.

Revisit code and write algorithms differently

Table 1

Considering that a typical test program is made up of 10,000 to 100,000 high-level instructions, you face the same challenge for data analysis. The key data is hidden in the middle of thousands of instructions, which makes the profiler mission more complex than simply reporting tables of test times. The tool must filter and extract only the pertinent information.

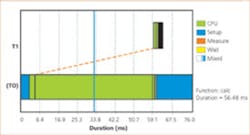

Case Study: Multithreads

Let’s consider the situation where device testing involves multithreading to maximize CPU idle time. To measure the efficiency of the test program, you would assume that you compare the testing time in the multithread mode to the nonmultithread mode where threads are serialized. This may or may not be correct. It all depends how the tester really implements multithreading.

Considering the time it takes to put in place and debug multithreading, some situations like this one are not worth it. The value of the profiler here is clear—avoid wasting time on the wrong priorities; only focus on what will pay off.

Smarter Tools Needed

When the key information is hidden in large amounts of data, you need more than a profiler. You want software with agents that extract the key information for you. Such tools are expected to be smart and propose decisions for you rather than dump reports and let you find your way. This is exactly what data-mining tools are for.

In today’s market, test engineers are squeezed by time and need to deliver test programs at a faster pace than before. They no longer have the time they want to complete their mission. As a result, they need tools that go the extra mile, tools that help them focus on the right actions and implement the right strategies.

Conclusion

Test-program optimization now is under such delivery constraints that conventional profiling tools fall short of what is needed. A new generation of smarter software is sought, tools that collect, filter, then interpret data to give you a solution.

About the Author

Philippe Lejeune is the founder, CEO, and CTO of Galaxy Semiconductor. He has been in the semiconductor test industry since 1987 and obtained four patents that apply to test-time reduction. Galaxy Semiconductor, Galway Technology Center, Mervue Business Park, Galway, Ireland, 011 353 (0) 91 730 762, e-mail: [email protected]

FOR MORE INFORMATION

on optimizing test time

enter this rsleads URL

www.rsleads.com/310ee-176

Return to EE Home Page

Published by EE-Evaluation Engineering

All contents © 2003 Nelson Publishing Inc.

No reprint, distribution, or reuse in any medium is permitted

without the express written consent of the publisher.

October 2003