For a developer perusing the datasheets of the latest microcontrollers, it’s easy to assume that efficient use of CPU resources, including memory and clock cycles, is, at most, a minor concern with today’s hardware. The latest 32-bit MCUs offer Flash and RAM allocations that were unheard of in the embedded space just a short time ago, and their CPUs are often clocked at speeds once reserved for desktop PCs.

However, as anyone with recent experience developing a product for the IoT knows, these advances in hardware have not occurred in a vacuum; they have been accompanied by pronounced changes in end-user expectations and design requirements. Accordingly, it is perhaps more important now than ever for developers to ensure that their software runs with the utmost efficiency and that their own time is spent in an efficient manner.

The software running on modern embedded systems tends to come from a variety of sources. Code written by application developers is often combined with off-the-shelf software components from an RTOS (real-time operating system) provider, and these components may, in turn, utilize driver code originally offered by a semiconductor company. Each piece of code can be written to optimize efficiency, but this article will focus on efficiency within off-the-shelf software components. Two components in particular will serve as the foundation for the examination of resource efficiency given herein: a real-time kernel and a transactional file system.

A Real-Time Kernel: The Heart of an Efficient System

A real-time kernel is the centerpiece of the software running in many of today’s embedded systems. In simple terms, a kernel is a scheduler; developers who write application code for a kernel-based system divide that code into tasks, and the kernel is responsible for scheduling the tasks. A kernel, then, is an alternative to the infinite loop in main() that often serves as the primary scheduling mechanism in bare-metal embedded systems.

Using a real-time kernel delivers major benefits, including Improved efficiency. Developers who choose to base their application code on a kernel can optimize the use of processor resources in their system while achieving more efficient use of their own time. Not all kernels are created equal, however, and efficiency gains are not guaranteed as a result of simply deciding to adopt a kernel for a new project.

A key area where kernels may differ and where CPU resources can be used with widely varying degrees of efficiency is scheduling. By offering an intelligent scheduling mechanism that allows tasks to run in response to events, a kernel helps developers achieve efficiency gains over an infinite loop, in which tasks (or functions, in other words) are executed in a fixed sequence. The Exact efficiency of a kernel-based application is depends, in part, on how its scheduler is implemented. A kernel’s scheduler—which is just a passage of code responsible for deciding when each task should be run—is ultimately overhead, and this overhead must not nullify the benefits that can be achieved by moving away from a bare-metal system.

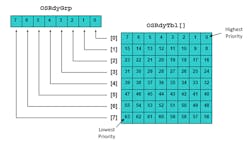

1. In the µC/OS-II scheduler, each task priority is represented by a bit in an array.

Typically, in a real-time kernel, scheduling is priority-based, meaning that application developers assign priorities (which are oftentimes numbers) to their tasks, and the kernel favors the higher-priority tasks when making scheduling decisions. Under this scheme, the kernel must maintain some type of data structure that tracks the priorities of an application’s different tasks along with the current state of each of those tasks. An example, taken from Micrium’s µC/OS-II kernel, is shown in Figure 1.

Within OSRdyTbl[], the 8-entry array (of eight-bit elements) shown here, each bit represents a different task priority, with the least-significant bit in the first element corresponding to the highest priority and the most-significant bit in the last element signifying the lowest priority. The array’s bit values reflect task state: A value of 1 is used if the task at the associated priority is ready and a 0 is used if the task is not ready.

Accompanying OSRdyTbl[] as part of µC/OS-II’s scheduler is the single eight-bit variable shown in the figure, OSRdyGrp. Each bit in this variable represents an entire row, or element, in the array: A 1 bit indicates that the corresponding row has at least one ready task, while a 0 bit means that none of the row’s tasks are ready. By scanning first OSRdyGrp and then OSRdyTbl[] using the code shown inthe listing below, µC/OS-II can determine the highest-priority task that is ready to run at any given time. As the listing indicates, this operation is highly efficient, requiring just two lines of C code.

y = OSUnMapTbl[OSRdyGrp]; OSPrioHighRdy = (INT8U)((y << 3u) + OSUnMapTbl[OSRdyTbl[y]]);

Scheduling can be accomplished with just two lines of C code in µC/OS-II.

Of course, compact, efficient code is only one of the characteristics developers should seek in a kernel. Given the relatively high Flash-to-RAM ratios offered by most new MCUs, it is also important for developers to consider the data side of a kernel’s footprint. As is true for the kernel’s scheduler, excessive overhead here, in the form of a bloated RAM footprint, can diminish the benefits that multitasking application code normally brings.

There are two approaches a kernel can take to allocate the basic resources needed for multitasking: Responsibility for allocating these resources can be left to application code, or the kernel itself can handle the allocation. There will inevitably be certain variables and data structures in any kernel that, because they are essential to the implementation of multi-tasking services, reside entirely within the confines of the kernel. However, for data structures like the TCBs (or task control blocks) used to record the status of each task, or even for the stacks that store CPU register values during context switches, kernel providers can choose to allocate internally or rely on application code.

Either approach, if implemented with flexibility as a goal, can yield an efficient kernel. Deferring allocation of resources to application code is, perhaps, the approach that gives developers the most flexibility, since it leaves the door open for static or dynamic allocation schemes. Micrium’s µC/OS-III takes this approach, letting application developers decide how best to allocate their TCBs and stacks. However, forcing resource allocation within the kernel can be an equally efficient approach, provided that there are means for configuring the quantities of allocated resources, as is the case for TCBs in Micrium’s µC/OS-II. Ultimately, application developers need a way to eliminate unused resources from a system’s memory footprint.

File System Efficiency

Most devices need the option to store data and logging, either before cloud access or more permanently. Any code designed for that purpose is a file system, whether written and tested by the developer or provided as part of the RTOS solution. The file system can also provide options for efficiency. These range from simple (how many memory buffers to reserve) to complex (whether to support complete POSIX operations).

Developers should start with their requirements for data storage. Will that data be operated on in the field, or merely stored and later transmitted? How many things will be measured? Should their data be kept separate or combined? Is the data stored until the device is later collected, or will it be transmitted to the cloud? How reliable is the media, and is the design completely safe from power failure?

To start with, some RTOS provide a FAT like file system. This includes code to perform I/O with a standard media format, including folders and files. Generally this isn’t very customizable, and it rarely protects from data loss during a power failure. Another option is Datalight’s Reliance Edge, which uses transaction points to provide a power fail safe environment. The exciting thing here is how the flexibility of design contributes to efficiency.

Reliance Edge provides customization of storage options. In the minimum use case, referred to as “File System Essentials”, no folders or even file names are used. Data is stored into numbered inodes. The count of these locations is determined at compile time, but the sizes are not predetermined. One “file” can contain more data than the others, and the media is only full when the total of the “files” reaches the threshold. Files can also be truncated, read and written to freely.

2. Comparing the FAT style file system and Reliance Edge.

In contrast, the FAT style file system has blocks of the media dedicated to two file allocation tables (Fig. 2). For each user data file, a file name and metadata are allocated – the former may be quite large to support long file names. If subfolders are used, they will also take space for metadata and long file names. All of this results in less space on the media for the gathered user data.

For larger designs, a more POSIX like environment is also an option. Here file names, folders and file system metadata (such as attributes, along with data and time) are a configurable option. This can be a very good option for situations where an application that expects a POSIX interface is ported from another design. Ultimately, the final choice of file system requirements is directly connected to the use case, which is by far the most resource efficient solution.

Viewing Efficiency Holistically

Resource-usage concerns aside, efficiency has, for years, been front and center in the minds of developers shopping for a kernel, file system, and other software modules. That is because the rationale used to justify adoption of such modules is often that writing equivalent code from scratch would be a waste of time. In other words, application developers’ time is most efficiently spent writing applications, as opposed to slogging through tens of thousands of lines of infrastructure code.

However, just as kernel and file system use does not, in and of itself, guarantee efficient handling of CPU resources, the decision to bring such modules into a new project does not automatically ensure developers’ time will be spent in the most efficient manner. In order to truly allow users to focus on application-level code, an embedded software module must have an intuitive interface, and, importantly, that interface must be thoroughly documented. In the absence of helpful documentation, developers can burn through weeks of time resolving issues that, in hindsight, prove to be cases of misused functions.

Unfortunately, even well-documented code can unnecessarily consume development time if it doesn’t reliably function as it is described. This is why, in addition to demanding comprehensive documentation, developers should seek evidence of reliability—such as past certifications or test results—when selecting software for a new project. Practically every software module sounds reliable in promotional literature, but only a subset of modules provide credible proof that they run as well as advertised. Datalight’s Reliance Edge, for example, is accompanied by source code for a variety of different tests that allow application developers to confirm the file system is running reliably in a particular development environment.

Example: Developing an IoT Medical Device with Maximum Efficiency

What type of development environment might be present in an IoT project? Given the rapidly growing demand for connectivity in embedded devices, it’s impossible to identify one particular combination of hardware, software, and tool-chain that defines the space. It’s similarly challenging to find a single end-product that fully represents the range of possibilities in IoT. Nonetheless, discussions in this field can certainly benefit from concrete examples.

A product that helps to illustrate some of the challenges faced by IoT developers, and that, just a few years ago, would not even have been considered a connected device, is the blood-glucose meter. One of the key characteristics of this product is volume: Blood-glucose meters are often produced in quantities of millions, and they tend to be sold below cost or even given way. Accordingly, the pressure to reduce BOM cost and minimize development time for these meters is intense. It’s not as though developing one of these devices is trivial, though. In fact, the feature list for a new blood-glucose meter is likely to include a color display, data logging capabilities, and cloud connectivity.

Facing such a complex list of requirements, a development team responsible for a blood-glucose meter would certainly want to leverage the multi-tasking capabilities of a kernel. Optimizing the kernel’s memory footprint would probably be one of the team’s top concerns, since the low-cost MCUs typical of high-volume products tend to have stingy Flash and RAM allocations.

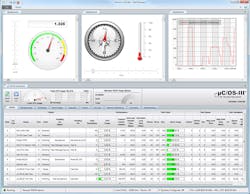

A key step in reducing the footprint would be to remove any kernel resources—such as TCBs—not needed by application code. It would also be helpful to eliminate waste within the stacks needed by the application’s various kernel-managed tasks. A tool like Micrium’s µC/Probe, a screenshot of which is shown in Figure 3, could be used to achieve this objective. µC/Probe offers insight into a kernel-based application’s stack and heap use, enabling developers to easily identify and correct inefficiencies.

3. µC/Probe provides run-time access to a system’s data, including kernel statistics.

When implementing the blood-glucose meter’s data logging functionality, the meter’s development team would benefit from the capabilities of a file system. Here, as with the kernel, the use of an off-the-shelf software module would relieve the team of the burden of developing infrastructure code and would, thus, contribute to a much shorter, and cost-effective, development cycle. Processor resource usage, as one of the system’s overarching constraints, would inevitably need to be considered during development of the data logging code, so the use of a highly efficient transactional file system would be ideal. With a file system solution like Reliance Edge, the development team could easily scale back services to a bare minimum in order to leave as much memory as possible for the application.

Conclusion

While every embedded system presents its own unique demands, many of the approaches suitable for maximizing efficiency in a blood-glucose meter could easily be utilized in the development of other types of devices. Reuse of components has long been recognized as a best practice for software development, and much of the infrastructure code needed for a blood-glucose meter—including a real-time kernel and file system—could serve as the foundation for other devices with few changes beyond the replacement of a handful of low-level code.

By choosing quality off-the-shelf components as the foundation for their projects, development teams can ensure the efficient use of their own resources, as well as those of their embedded hardware, and can focus on writing innovative application code to make their work stand out amongst the deluge of products the IoT is already beginning to deliver.

Tom Denholm is Technical Product Manager at Datalight.